-

Posts

27 -

Joined

-

Last visited

Content Type

Forums

Store

Crowdfunding

Applications

Events

Raffles

Community Map

Everything posted by antsu

-

This time it survived a little longer, but sure enough, today I woke up to my H64 unreachable on the 2.5G port again: [255036.090052] xhci-hcd xhci-hcd.0.auto: xHCI host not responding to stop endpoint command. [255036.090061] xhci-hcd xhci-hcd.0.auto: USBSTS: [255036.103643] xhci-hcd xhci-hcd.0.auto: xHCI host controller not responding, assume dead [255036.103681] xhci-hcd xhci-hcd.0.auto: HC died; cleaning up [255036.103734] r8152 4-1.4:1.0 eth1: Stop submitting intr, status -108 [255036.103774] r8152 4-1.4:1.0 eth1: get_registers -110 [255036.103822] r8152 4-1.4:1.0 eth1: Tx status -108 [255036.103831] r8152 4-1.4:1.0 eth1: Tx status -108 [255036.103839] r8152 4-1.4:1.0 eth1: Tx status -108 [255036.103850] r8152 4-1.4:1.0 eth1: Tx status -108 [255036.103868] usb 3-1: USB disconnect, device number 2 [255036.109516] usb 4-1: USB disconnect, device number 2 [255036.109526] usb 4-1.1: USB disconnect, device number 3 [255036.131518] usb 4-1.4: USB disconnect, device number 4 [255429.047792] zio pool=backups vdev=/dev/disk/by-id/usb-WD_Elements_XXXX_XXXXXXXXXXXXXXX-0:0-part1 error=5 type=1 offset=3186896842752 size=4096 flags=180880 [255429.047897] zio pool=backups vdev=/dev/disk/by-id/usb-WD_Elements_XXXX_XXXXXXXXXXXXXXX-0:0-part1 error=5 type=1 offset=270336 size=8192 flags=b08c1 [255429.047937] zio pool=backups vdev=/dev/disk/by-id/usb-WD_Elements_XXXX_XXXXXXXXXXXXXXX-0:0-part1 error=5 type=1 offset=14000475086848 size=8192 flags=b08c1 [255429.047966] zio pool=backups vdev=/dev/disk/by-id/usb-WD_Elements_XXXX_XXXXXXXXXXXXXXX-0:0-part1 error=5 type=1 offset=14000475348992 size=8192 flags=b08c1 [255429.048323] WARNING: Pool 'backups' has encountered an uncorrectable I/O failure and has been suspended. [255433.136397] WARNING: Pool 'backups' has encountered an uncorrectable I/O failure and has been suspended. I'm going back to using just the 1G port for now, since my H64 has been much more stable after the IO scheduler changes suggested by @ShadowDance in another thread, but happy to do more tests if anyone wants to try to figure out what's happening.

-

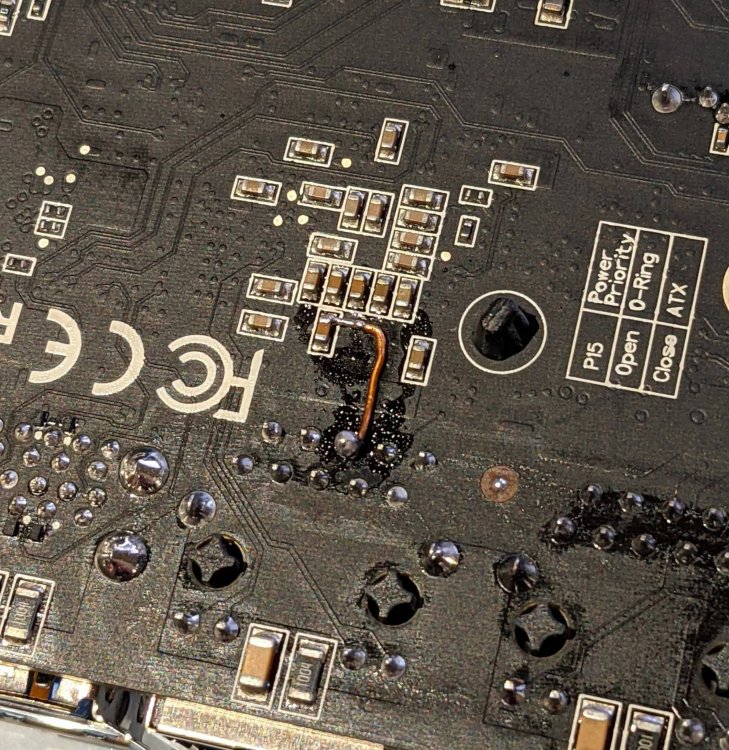

I was trying to avoid opening a new instability topic, but this one seems different enough from the others to deserve it's own discussion. A few days ago I applied the 1G fix (pic attached) to the 2.5G port - which was not in use until then, since all my hardware is 1G max. I did this with the intention of using both ports: the 1G port goes to an isolated VLAN that serves VM images to my Proxmox hosts, and the 2.5G interface (running at 1G speed) serves files to the rest of my network. While the fix appears to work and the port is able to communicate at 1G speeds seemingly fine, after changing to this setup I'm experiencing frequent drops in the USB bus, which causes both the 2.5G port and my USB HDD (which has its own PSU) to disconnect, requiring a reboot to regain connectivity. When this happens, I get these messages in dmesg: [48657.415910] xhci-hcd xhci-hcd.0.auto: xHCI host not responding to stop endpoint command. [48657.415930] xhci-hcd xhci-hcd.0.auto: USBSTS: [48657.429522] xhci-hcd xhci-hcd.0.auto: xHCI host controller not responding, assume dead [48657.429604] xhci-hcd xhci-hcd.0.auto: HC died; cleaning up [48657.429952] r8152 4-1.4:1.0 eth1: Stop submitting intr, status -108 [48657.430068] r8152 4-1.4:1.0 eth1: get_registers -110 [48657.430193] r8152 4-1.4:1.0 eth1: Tx status -108 [48657.430223] r8152 4-1.4:1.0 eth1: Tx status -108 [48657.430241] r8152 4-1.4:1.0 eth1: Tx status -108 [48657.430262] r8152 4-1.4:1.0 eth1: Tx status -108 [48657.430327] usb 3-1: USB disconnect, device number 2 [48657.431551] usb 4-1: USB disconnect, device number 2 [48657.431572] usb 4-1.1: USB disconnect, device number 3 [48657.467516] usb 4-1.4: USB disconnect, device number 4 The problem seems to manifest more quickly if I push more data through that interface. I'm running a clean (new) install of Armbian 21.02.3, with OMV and ZFS. Full dmesg and boot log below.

-

@ShadowDance It's a regular swap partition on sda1, not on a zvol. But thanks for the quick reply.

-

New crash, but different behaviour and conditions this time. It had a kernel oops at around 5am this morning, resulting in a graceful-ish reboot. From my limited understanding, the error seems to be related with swap (the line "Comm: swapper"), so I have disabled the swap partition for now and will continue to monitor. The major difference this time is that there was nothing happening at that time, it was just sitting idle.

-

@ShadowDance I think you're definitely on to something here! I just ran the Rsync jobs after setting the scheduler to none for all disks and it completed successfully without crashing. I'll keep an eye on it and report any other crashes, but for now thank you very much! Update: It's now a little over 3 hours into a ZFS scrub, and I restarted all my VMs simultaneously *while* doing the scrub and it has not rebooted nor complained about anything on dmesg. This is very promising! Update 2: Scrub finished without problems!

-

@aprayogaAs I mentioned, the crash happens when some Rsync jobs are run. I'll try to describe my setup as best as possible. My Helios64 is running LK 5.10.21, OMV 5.6.2-1, and has a RAID-Z2 array + a simple EXT4 partition. The drives are: - Bay 1: 500GB Crucial MX500. - partition 1: 16GB swap partition, created while troubleshooting the crashes (to make sure it was not due to lack of memory). The crashes happen both with and without it. - partition 2: 400GB EXT4 partition, used to serve VM/container images via NFS. - partition 3: 50GB write cache for the ZFS pool. - Bays 2 through 5: 4x 4TB Seagate IronWolf (ST4000VN008-2DR1) in RAID-Z2, ashift=12, ZSTD compression (although most of the data is compressed with LZ4). My remote NAS is a Helios4, also running OMV, with the Rsync plugin in server mode. On the Helios64 I have 4 Rsync jobs for 4 different shared folders, 3 sending and 1 receiving. They all are scheduled to run on Sundays at 3 AM. The reason for starting all 4 jobs at the same time is to maximise the bandwidth usage, since the backup happens over the internet (Wireguard running on the router, not on the H64), and the latency from where I am to where the Helios4 is (Brazil) is quite high. The transfers are incremental, so not a lot of data gets sent with each run, but I guess Rsync still needs to read all the files on the source to see what has changed, causing the high IO load. The data on these synced folders is very diverse in size and number of files. In total it's about 1.7TB. Another way I can reproduce the crash is by running a ZFS scrub. And also as I mentioned in the previous post, in one occasion, the NAS crashed when generating simultaneous IO from multiple VMs, which sit on an EXT4 partition on the SSD, so it doesn't seem limited to the ZFS pool.

-

Sorry if I'm hijacking this thread, but the title fits the problem, and I didn't want to create yet another thread about stability issues. I'm also having similar problems with my Helios64. It will consistently crash and reboot when I/O is stressed. The two most common scenarios where this happens is when running an Rsync job that I use to sync my H64 with my remote backup NAS (a Helios4 that still works like a champ), and when performing a ZFS scrub. In one isolated occasion, I was also able to trigger a crash by executing an Ansible playbook that made several VMs generate I/O activity simultaneously (the VMs disks are served by the H64). Since the Rsync crash is consistent and predictable, I managed to capture the crash output from the serial console in two occasions and under different settings, listed below: Kernel 5.10.16, performance governor, frequency locked to 1.2GHz Kernel 5.10.21, ondemand governor, dynamic stock frequencies (settings recommended in this and other stability threads): Happy to help with more information or tests.

-

So, I went hunting for the PR where this got merged, found the reference to kernel module "ledtrig-netdev", noticed the module wasn't loaded on my Helios 64 (no idea why), loaded it via modprobe, restarted the service unit I mentioned in the post above, and now it works. I've added ledtrig-netdev to /etc/modules-load.d/modules.conf, so now it should hopefully work automagically on every boot.

-

Hi all. Some time ago, in the release notes of a past Armbian version, I remember reading that the network LED should now be enabled by default and associated with eth0. Despite always keeping my Helios64 as up-to-date as possible, mine never lit up, but I didn't think much of it. Today I decided to investigate why, and I'm not sure where to go from here. First things first, the LED works, it's not a hardware issue. I can echo 1 and 0 to the LED path (/sys/class/leds/helios64:blue:net/brightness) and it lights on and off as expected. From what I can tell, this LED, along with the heartbeat one, is enabled by the Systemd unit helios64-heartbeat-led.service. When I check for the status of the unit, I get the following output: ● helios64-heartbeat-led.service - Enable heartbeat & network activity led on Helios64 Loaded: loaded (/etc/systemd/system/helios64-heartbeat-led.service; enabled; vendor preset: enabled) Active: failed (Result: exit-code) since Sat 2021-03-13 00:30:52 GMT; 8min ago Process: 3740 ExecStart=/usr/bin/bash -c echo heartbeat | tee /sys/class/leds/helios64\:\:status/trigger (code=exited, status=0/SUCCESS) Process: 3744 ExecStart=/usr/bin/bash -c echo netdev | tee /sys/class/leds/helios64\:blue\:net/trigger (code=exited, status=1/FAILURE) Main PID: 3744 (code=exited, status=1/FAILURE) Mar 13 00:30:52 nas.lan systemd[1]: Starting Enable heartbeat & network activity led on Helios64... Mar 13 00:30:52 nas.lan bash[3740]: heartbeat Mar 13 00:30:52 nas.lan bash[3744]: netdev Mar 13 00:30:52 nas.lan bash[3744]: tee: '/sys/class/leds/helios64:blue:net/trigger': Invalid argument Mar 13 00:30:52 nas.lan systemd[1]: helios64-heartbeat-led.service: Main process exited, code=exited, status=1/FAILURE Mar 13 00:30:52 nas.lan systemd[1]: helios64-heartbeat-led.service: Failed with result 'exit-code'. Mar 13 00:30:52 nas.lan systemd[1]: Failed to start Enable heartbeat & network activity led on Helios64. Looking into the unit's source, I can see the line where this fails: ExecStart=bash -c 'echo netdev | tee /sys/class/leds/helios64\\:blue\\:net/trigger' And sure enough, there is no "netdev" inside /sys/class/leds/helios64:blue:net/trigger: $ cat /sys/class/leds/helios64:blue:net/trigger [none] usb-gadget usb-host kbd-scrolllock kbd-numlock kbd-capslock kbd-kanalock kbd-shiftlock kbd-altgrlock kbd-ctrllock kbd-altlock kbd-shiftllock kbd-shiftrlock kbd-ctrlllock kbd-ctrlrlock usbport mmc1 mmc2 disk-activity disk-read disk-write ide-disk mtd nand-disk heartbeat cpu cpu0 cpu1 cpu2 cpu3 cpu4 cpu5 activity default-on panic stmmac-0:00:link stmmac-0:00:1Gbps stmmac-0:00:100Mbps stmmac-0:00:10Mbps rc-feedback gpio-charger-online tcpm-source-psy-4-0022-online I'm not sure why it's not there or how does that get populated. I'm running Armbian Buster 21.02.3 with LK 5.10.21-rockchip64. Any help will be much appreciated.

-

Use kernel 5.8 and follow the instructions on the thread above.

-

@jberglerThank you very much for that. The steps just needed a small tweak, but it works perfectly. Running on kernel 5.8.16 right now! The small tweak is just to also install flex and bison before installing the kernel headers package (or run apt-get install -f if already installed).

-

@jberglerThanks again for the ZFS module you shared on the Helios64 support thread. It's been running for days without any problems. If it's not too much trouble, would you mind sharing the process you used to build it?

-

A little advice for anyone planning to use nand-sata-install to install on the eMMC and has already installed and configured OMV: nand-sata-install will break OMV, but it's easy to fix if you know what's happening. It will skip /srv when copying the root to avoid copying stuff from other mounted filesystems, but OMV 5 stores part of its config in there (salt and pillar folders) and will throw a fit if they're not there when you boot from the eMMC. Simply copy these folders back from the microSD using your preferred method. If you have NFS shares set in OMV, make sure to add the entry /export/* to the file /usr/lib/nand-sata-install/exclude.txt BEFORE running nand-sata-install, or it will try to copy the content of your NFS shares to the eMMC. Lastly, if you're using ZFS, which by default mounts to /<pool_name>, make sure to add its mountpoint to /usr/lib/nand-sata-install/exclude.txt before nand-sata-install as well.

-

@RaSca Are your disks powered from the USB port itself, or do they have their own power supplies? Is your USB hub powered externally? To me it sounds like the disks are trying to draw more power than the board can provide, and thus failing. For the record, I have a 14TB WD Elements (powered by a 12V external PSU) connected to my H64 and running flawlessly, reaching the max speeds the disk can provide (~200MB/s reads).

-

Idk if these questions are within the scope of this thread, but I'm curious about a few things regarding thermals on the Helios64, if someone from Kobol could clarify: - What is the expected temperature range for this SoC? - How does the heatsink interface with the SoC? Pads, paste? - Does the heatsink also make contact with any other chips around the CPU? - Would it benefit in any way from a "re-paste" with high performance thermal paste? I could, of course, just disassemble mine and find out for myself, but I figured I would ask first.

-

@raoulh The correct package name is linux-headers-current-rockchip64.

-

@KiSMI had the same kind of issue with the legacy image. It seems anything that puts a constant load on the system eventually causes a kernel crash, and sometimes a reboot. The current image (kernel 5.8) however is rock solid. I've been using it now for 2 days and really stressing both the CPU and disks on my Helios64 without a single problem.

-

Legend! Thank you very much, I'll give this a try asap.

-

I agree. Apologies for sort of derailing the thread and my sincere thanks to those who tried to help. I'll keep an eye on the other thread I mentioned in my previous posts, since it is already essentially the same topic.

-

Nothing wrong as far as I can tell: sudo smartctl --all /dev/sde smartctl 6.6 2017-11-05 r4594 [aarch64-linux-4.4.213-rk3399] (local build) Copyright (C) 2002-17, Bruce Allen, Christian Franke, www.smartmontools.org === START OF INFORMATION SECTION === Model Family: Seagate IronWolf Device Model: ST4000VN008-2DR166 Serial Number: xxxxxx LU WWN Device Id: xxxxxx Firmware Version: SC60 User Capacity: 4,000,787,030,016 bytes [4.00 TB] Sector Sizes: 512 bytes logical, 4096 bytes physical Rotation Rate: 5980 rpm Form Factor: 3.5 inches Device is: In smartctl database [for details use: -P show] ATA Version is: ACS-3 T13/2161-D revision 5 SATA Version is: SATA 3.1, 6.0 Gb/s (current: 6.0 Gb/s) Local Time is: Wed Oct 7 18:33:40 2020 BST SMART support is: Available - device has SMART capability. SMART support is: Enabled === START OF READ SMART DATA SECTION === SMART overall-health self-assessment test result: PASSED General SMART Values: Offline data collection status: (0x82) Offline data collection activity was completed without error. Auto Offline Data Collection: Enabled. Self-test execution status: ( 0) The previous self-test routine completed without error or no self-test has ever been run. Total time to complete Offline data collection: ( 591) seconds. Offline data collection capabilities: (0x7b) SMART execute Offline immediate. Auto Offline data collection on/off support. Suspend Offline collection upon new command. Offline surface scan supported. Self-test supported. Conveyance Self-test supported. Selective Self-test supported. SMART capabilities: (0x0003) Saves SMART data before entering power-saving mode. Supports SMART auto save timer. Error logging capability: (0x01) Error logging supported. General Purpose Logging supported. Short self-test routine recommended polling time: ( 1) minutes. Extended self-test routine recommended polling time: ( 664) minutes. Conveyance self-test routine recommended polling time: ( 2) minutes. SCT capabilities: (0x50bd) SCT Status supported. SCT Error Recovery Control supported. SCT Feature Control supported. SCT Data Table supported. SMART Attributes Data Structure revision number: 10 Vendor Specific SMART Attributes with Thresholds: ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE 1 Raw_Read_Error_Rate 0x000f 080 064 044 Pre-fail Always - 97064976 3 Spin_Up_Time 0x0003 094 093 000 Pre-fail Always - 0 4 Start_Stop_Count 0x0032 100 100 020 Old_age Always - 132 5 Reallocated_Sector_Ct 0x0033 100 100 010 Pre-fail Always - 0 7 Seek_Error_Rate 0x000f 093 060 045 Pre-fail Always - 2082420238 9 Power_On_Hours 0x0032 083 083 000 Old_age Always - 14904 (177 35 0) 10 Spin_Retry_Count 0x0013 100 100 097 Pre-fail Always - 0 12 Power_Cycle_Count 0x0032 100 100 020 Old_age Always - 132 184 End-to-End_Error 0x0032 100 100 099 Old_age Always - 0 187 Reported_Uncorrect 0x0032 100 100 000 Old_age Always - 0 188 Command_Timeout 0x0032 100 099 000 Old_age Always - 2 189 High_Fly_Writes 0x003a 100 100 000 Old_age Always - 0 190 Airflow_Temperature_Cel 0x0022 071 053 040 Old_age Always - 29 (Min/Max 28/29) 191 G-Sense_Error_Rate 0x0032 100 100 000 Old_age Always - 0 192 Power-Off_Retract_Count 0x0032 100 100 000 Old_age Always - 47 193 Load_Cycle_Count 0x0032 088 088 000 Old_age Always - 24785 194 Temperature_Celsius 0x0022 029 047 000 Old_age Always - 29 (0 16 0 0 0) 197 Current_Pending_Sector 0x0012 100 100 000 Old_age Always - 0 198 Offline_Uncorrectable 0x0010 100 100 000 Old_age Offline - 0 199 UDMA_CRC_Error_Count 0x003e 200 200 000 Old_age Always - 0 240 Head_Flying_Hours 0x0000 100 253 000 Old_age Offline - 14694 (50 14 0) 241 Total_LBAs_Written 0x0000 100 253 000 Old_age Offline - 50906738288 242 Total_LBAs_Read 0x0000 100 253 000 Old_age Offline - 157149639538 SMART Error Log Version: 1 No Errors Logged SMART Self-test log structure revision number 1 Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error # 1 Short offline Completed without error 00% 14904 - SMART Selective self-test log data structure revision number 1 SPAN MIN_LBA MAX_LBA CURRENT_TEST_STATUS 1 0 0 Not_testing 2 0 0 Not_testing 3 0 0 Not_testing 4 0 0 Not_testing 5 0 0 Not_testing Selective self-test flags (0x0): After scanning selected spans, do NOT read-scan remainder of disk. If Selective self-test is pending on power-up, resume after 0 minute delay.

-

Just tried building 0.8.5 on the Buster current image. Unfortunately is still fails with the "__stack_chk_guard" errors. This topic and this GitHub issue (by the same author) have more info about the possible cause for this. ---------------------- @aprayoga I will try to reproduce the crash on legacy again and post the results.

-

This one works "even less" (if that makes sense) since it's missing this patch and fails at an earlier stage in the compilation process. (With the patch applied it fails at the same point I mentioned in my previous post). Unrelated, but I should also note that today, after configuring my Helios64 with the legacy Armbian image and letting it run for a few hours with a moderate load, it crashed again with a kernel panic. Unfortunately I wasn't able to capture the panic messages as I had iftop open on the serial console at the time and it all mixed together beautifully.

-

@IgorI had the same error with the package you posted as I had when manually compiling from source (which again, seems to be related to the GCC version): MODPOST /var/lib/dkms/zfs/0.8.4/build/module/Module.symvers ERROR: modpost: "__stack_chk_guard" [/var/lib/dkms/zfs/0.8.4/build/module/zfs/zfs.ko] undefined! ERROR: modpost: "__stack_chk_guard" [/var/lib/dkms/zfs/0.8.4/build/module/zcommon/zcommon.ko] undefined! ERROR: modpost: "__stack_chk_guard" [/var/lib/dkms/zfs/0.8.4/build/module/unicode/zunicode.ko] undefined! ERROR: modpost: "__stack_chk_guard" [/var/lib/dkms/zfs/0.8.4/build/module/spl/spl.ko] undefined! ERROR: modpost: "__stack_chk_guard" [/var/lib/dkms/zfs/0.8.4/build/module/nvpair/znvpair.ko] undefined! ERROR: modpost: "__stack_chk_guard" [/var/lib/dkms/zfs/0.8.4/build/module/lua/zlua.ko] undefined! ERROR: modpost: "__stack_chk_guard" [/var/lib/dkms/zfs/0.8.4/build/module/icp/icp.ko] undefined! ERROR: modpost: "__stack_chk_guard" [/var/lib/dkms/zfs/0.8.4/build/module/avl/zavl.ko] undefined! make[4]: *** [scripts/Makefile.modpost:111: /var/lib/dkms/zfs/0.8.4/build/module/Module.symvers] Error 1 make[4]: *** Deleting file '/var/lib/dkms/zfs/0.8.4/build/module/Module.symvers' make[3]: *** [Makefile:1665: modules] Error 2 make[3]: Leaving directory '/usr/src/linux-headers-5.8.13-rockchip64' make[2]: *** [Makefile:30: modules] Error 2 make[2]: Leaving directory '/var/lib/dkms/zfs/0.8.4/build/module' make[1]: *** [Makefile:808: all-recursive] Error 1 make[1]: Leaving directory '/var/lib/dkms/zfs/0.8.4/build' make: *** [Makefile:677: all] Error 2 Just to test it, I've added the Debian testing repo to the Buster image and installed build-essential from there, which contains GCC 10. With GCC10 your package installs successfully, but then the testing repo breaks OMV compatibility. Guess I'll just stay in legacy for the time being.

-

Thank you. To be clear, I did try to compile the latest ZFS from source, but ran into some errors that my internet searches suggest have to do with the GCC version in Debian Buster. I was able to get it to build successfully with the Debian Bullseye image (which as far as I can tell uses GCC 10), but then there's no OMV... I'll give that package a shot, thanks again.