AxelFoley

-

Posts

69 -

Joined

-

Last visited

Content Type

Forums

Store

Crowdfunding

Applications

Events

Raffles

Community Map

Posts posted by AxelFoley

-

-

Thanks Igor ..... should I install lightdm as the default from armbian-config again ?

whats going to be the new default ?

-

Another reproducible issue is that I can get exactly the same screen lockup on boot

When I re installed the desktop from the armbian-config it installed lightdm and removed nodm.

I backed out this change .. uninstalled lightdm and reinstallled nodm in case that was what was causing the instability.

Unfortunately in doing so ... it changed the default nodm auto login to root instead of mu default user (pico).

if i edit /etc/default/nodm back to pico instead of root ..... it locks up every time in the same manner 100% every time.

To fix the issue I have to mount the emmc module externa,,y and edit the file back to default login as root to be able to access the desktop.

-

Right ... I think I can recreate the problem 100% by simply loading the Armbian Forum in chromium.

I have tried for 30min to create the same OS lockup using firefox with no problems what so ever. Indeed video on sites like BBC News play flawlessly.

So it looks like the issue is specific to the default Chromium install of Armbian.

I had overlooked it being chromium simply because it looked like it was a HW Video Driver glitch as the entire desktop would lock up and the mouse curser would typically not even move.

but bingo 30seconds after I loaded Chromium ... I went straight into the Armbian Forum and it locked up on the default forum page.

It did not used to be this reproducible ... I avoided media sites and generally I could get on with coding befoe a crash/lock would occur.

I am not sure what has made the environment so much more unstable and easy to reproduce a crash as I apt update && apt upgrade several times a day.

is there a way I can run chromium in debug mode and get it not to delete the log files when I have to hard reboot the device ?

-

So far so good with firefox .... I'll start loading media sites and see if I can replicate the crash.

If I cant in firefox I will do the same with chrome and see if its a chromium specific issue.

-

I have had these lockup issues ever since I built the cluster in Dec 2018.

I initially thought it was a power issues (I was seeing some behaviour that suggested power was a problem)..

However since then I have resolved / upgraded my power source and eliminated that instability.

When I installed the base Armbian image I did follow some instructions to install some lib-mali drivers.

I suspected that may have been the issue and I associated the crashes trying to run video in a browser, so I avoided that.

However now more than ever I am seeing lockups just loading a browser and going to the armbian folder.

I will try to install firefox and see if that has similar problems.

-

I have a video but its 320MB in size so it wont upload.

-

Nope .... I rebooted ... went straight into the Armbian forum using the default Chromium browser and the OS/HW locked up and froze :-(

-

I had to transfer the script to a USB stick on a laptop then transfer it to the RockPro64 because the browser kept locking up when trying to point Chromium to the armbian media script site. I selected the defaults of System, MPV and Gstreamer from the install CLI. I then opted for the Armsoc fullscreen vsync.

Tonight development is a write off so I will start again tomorrow.

Thanks for all your help .. fingers crossed I can stop the desktop from locking up.

Its only been this bad recently since the distro upgrades (I upgrade daily).

Normally the forums are fine ... and I avoid sites with media content (video always locks up) but recently even the Armbiam forum causes the OS to lock the RockPro64 OS\HW Up.

The install script threw errors (see attached)

I will do an apt autoremove, reboot

and report back if stability improves

-

I am using the web browser default (chromium) that came with Armbian Bionic Desktop 18.04.2 LTS kernel 4.4.174 Armbian 7.53

I am trying to install the media script but the browser keeps hanging the HW\OS.

I will give wget\curl a try

-

Is it just me .... all the time I start to use the Armbian web browser ...including simply loading this forum the whole RockPro64 OS locks up and I have to reset,.

Typically its when I load Media web sites like the BBC ,.... but it even occurs when I load this forum!!

I am trying to develop some C GPIO library to help the rockPro64 community, but its hopeless. I have to work on console and web brows on a laptop.

I have ordered a KVM to help but this is really frustrating.

Because the HW locks up I can not detect any issues in the logs and I don't know how to set up a HW trace in the graphics drivers.

I have eliminated any Power issues. I have a pico cluster case and decent heatsink ... I have ordered some Heatsink fans but CPU is in the 40 Deg C.

Any Ideas how I find out why the HW just freezes ?

It must have something to do with the mali drivers ?

Any ideas welcome

-

Right PicoCluster kindly sent me a free replacement power supply rated at 12v.

I checked the power circuity doc [pfry] posted and it does say 12v input but all that happens is that it has 2 x buck converters to down volt to 2 x 5.1v and 1 5v rails.

The buck converters are SY8113-B and can operate from 4.5-18v delivering 3A Current, so explains why the 5V PSU was working.

It also appeared that the one cable I did not re-crimp from PicoCluster also had a loose connection that was causing power loss if the wiring loom was moved.

So I do not know if that was the wiring loom issues failing to deliver enough current or it it was the PSU not able to drive the buck converters to deliver enough current to the USB 5.1V rail.

@v5 those buck converters are 96% efficient so its hard to think that the difference between 5.1 and 5.0v would be significant enough to stop the unit booting reliably with a USB Keyboard and mouse in place. So my hunch was that the root cause was an dodgy wiring loom causing current drop off under boot load.

I rewired the entire loom again this time with heat shrink crimp connectors to firmly hold the cables in place and soldered the wired before crimping (i know its bad practice). Now everything boots perfectly!

The 10 X RockPro64 Cluster Case + 2 x Switches + 2 fans draws in peak of 60 watts on boot and 30 watts running unloaded

It also helped me find the real cause of why the master node did not boot into the desktop ........

......it looks like the package libEGL.so.1 was missing preventing nodm from booting!!!!

I had to force reinstall the libegl1 package to get the libs back and it now boots into the desktop fine ... so some how an apt-get upgrade mush have crashed!!

I still have two nodes power cycling ... but at least I can check them out now knowing that its not the poersupply/cabling and I can also focus on getting the nvme drive working :-)

Happy Days!

-

Thanks for your help .... Good news ... I sorted out the issues with PicoCluster, they are sending me a 100w 12v Unit with a StepDown converter (to feed the fans) free of charge.

At least then I can get the PCIe NVMe working ... I was thinking it was the image all the time, when in fact it was the power.

100w is still on the low side as it also feeds the 2 switches ... so you are looking at 0.8a shared accross all devices peak :-0

But it will at least get me developing / testing and I can keep an eye out when I start to load the platform.

I will also get my scope & multimeters out and monitor the current draw on each 12v rail on the old and new PSU

-

Turned out that the armbian-config when installing BETA version 4.20.0-rockchip64 seem to change the naming convention of where

(what I assume is the firmware)

from /boot/dtb-(version)-rockchip64

to /boot/rockchip64

and the old mainline kernel was deleted so although the kernel image was there and symlinked ... there was nothing under;

/boot/dtb (symlink to)

/boot/dtb-4.4.167-rockchip64

I populated the missing directories from a working node and the eMMC was able to bootstrap the device.

would be good to know why the deb package touched .next however ?

-

debian postinst code;

#!/bin/bash

# Pass maintainer script parameters to hook scripts

export DEB_MAINT_PARAMS="$*"# Tell initramfs builder whether it's wanted

export INITRD=Yestest -d /etc/kernel/postinst.d && run-parts --arg="4.20.0-rockchip64" --arg="/boot/vmlinuz-4.20.0-rockchip64" /etc/kernel/postinst.d

if [ "$(grep nand /proc/partitions)" != "" ] && [ "$(grep mmc /proc/partitions)" = "" ]; then

mkimage -A arm -O linux -T kernel -C none -a "0x40008000" -e "0x40008000" -n "Linux kernel" -d /boot/vmlinuz-4.20.0-rockchip64 /boot/uImage > /dev/null 2>&1

cp /boot/uImage /tmp/uImage

sync

mountpoint -q /boot || mount /boot

cp /tmp/uImage /boot/uImage

rm -f /boot/vmlinuz-4.20.0-rockchip64

else

ln -sf vmlinuz-4.20.0-rockchip64 /boot/Image || mv /boot/vmlinuz-4.20.0-rockchip64 /boot/Image

fi

touch /boot/.next

exit 0 -

debian preinst code;

#!/bin/bash

# Pass maintainer script parameters to hook scripts

export DEB_MAINT_PARAMS="$*"# Tell initramfs builder whether it's wanted

export INITRD=Yestest -d /etc/kernel/preinst.d && run-parts --arg="4.20.0-rockchip64" --arg="/boot/vmlinuz-4.20.0-rockchip64" /etc/kernel/preinst.d

# exit if we are running chroot

if [ "$(stat -c %d:%i /)" != "$(stat -c %d:%i /proc/1/root/.)" ]; then exit 0; ficheck_and_unmount (){

boot_device=$(mountpoint -d /boot)for file in /dev/* ; do

CURRENT_DEVICE=$(printf "%d:%d" $(stat --printf="0x%t 0x%T" $file))

if [[ "$CURRENT_DEVICE" = "$boot_device" ]]; then

boot_partition=$file

break

fi

donebootfstype=$(blkid -s TYPE -o value $boot_partition)

if [ "$bootfstype" = "vfat" ]; then

umount /boot

rm -f /boot/System.map* /boot/config* /boot/vmlinuz* /boot/Image /boot/uImage

fi

}

mountpoint -q /boot && check_and_unmount

exit 0 -

decided to go through armbian-config for clues .to see what it does during a kernel change

... unfortunately in function other_kernel_version () it seems to rely on debian packages to trigger the commands i need .... probably on a post install event.

so its back to the u-boot website

-

Apologies, the links on how to change the Armbian boot kernel don't lead to any documentation.

I am wading through the u-boot documentation tree now, but somebody may have an answer to a simple question.

I manage to make my device unbootable by using armbian-config and selecting a development kernel vmlinuz-4.20.0-rockchip64

I have mounted the disk on a ubuntu laptop and I take it u-boot is similar to grub2 in that its not just a case of changing the symlinks

Image -> vmlinuz-4.4.167-rockchip64

Uinitrd -> uInitrd-4.4.167-rockchip64

you have to do a grub2-mkconfig command \ grub-update in order to boot strap the device ?

If I find the answer from the u-boot site ... I will post the answer.

-

Hi All,

my sincere apologies .... after several late stressful night .... the more and more I looked into this the more suspicious I became the issues was power.

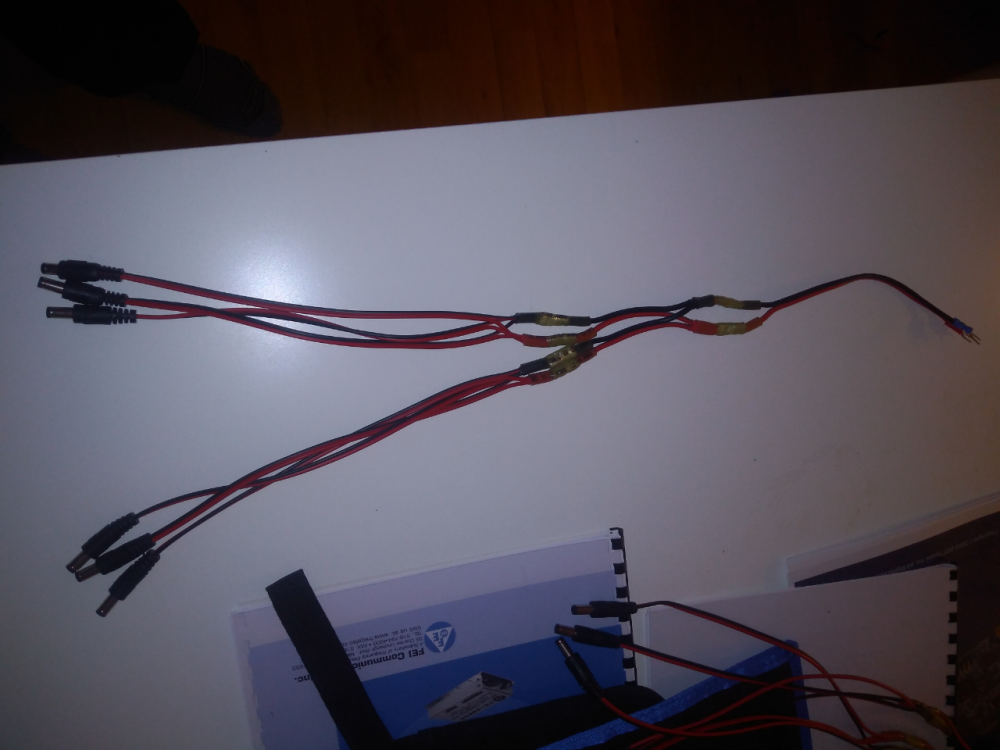

I bought the Cluster from PICO and their cabling loom was appalling... I last posted I have completely re-cabled the wiring loom.

(see attached ... I will redo it again when my order of heat shrink crimp connectors turn up as the normal heat shrink will not be that reliable)

PICO had tried to force up-to 4 x awg 22 cables into an awg 14-16 Fork crimp connector.

They then tried tried to force 2 x forks on a power supply screw output terminal ... not great when you are already over forcing 4 wires into a crimp terminal designed for 1 !!!

Its at this point I noticed the power supply they sent with a "High Powered" RocPro64 10H Cluster unit ...... 5V 18A !!!

The spec's of the RockPro64 state 12v 3A minimum

I think that they send me a power supply for a Raspberry PI 10 x cluster not a Rock Pro !!

I have logged a support ticket with PICO... but until I have reliable power .... all these issues can be explain by PICO Cluster not doing their jobs.

This may also explain why I can not get the NVMe Drive working.

I will update accordingly. -

Right,

new update ... I can not even get the rockprocluster master to boot to console with the original EMMC device on the master.

that previously I could boot to at least console. It could just be a dodgy emmc chip .... but my replacement emmc has not turned up and it still does not explain why i could recreate the issue on ssd on Saturday or why it barfed a lot of my 10 nodes!

this is looking more and more looking like a HW power issue down to pico being incompetent. The the next best explanation is a fucked up board firmware issue from pine.

It has to be related to power draw on GPU (Mali) initialization or a barfed EEMC unit .... but then why did all my 10 units choke ???

It hast to be power .... pine board designers can not be compete morons !

-

well i am stumped .... I can not recreate the problem even after a "apt update" & "apt upgrade" but I did this twice on Saturday and it knocked out all my 10 devices! I am not a moron ... I do my due diligence .. I was able to recreate (admittedly on a SD not a EMMC on just my master)

This means that either the upgrade packages has been changed since Saturday or its a spurious temperature/power related issue.

I have not been happy with these RockPro64 devices inconstant behaviour on power cycle and I bought them all in one batch.

I have had issues with vendors before not testing kit in hotter / colder climates (Mellanox being one) so i'd think it was power before device defect.

Although I have still one unit constantly power cycling.

I will swap out the power supply next as if the pico cluster guys can not even get their cabling correct, how can I trust their power suppler choice!

Thanks for your help and I apologies if I have wasted your valuable time.

-

interesting ... init 6 ... the device stopped pinging but did not reboot. I had to do a physical reset.

Then it booted into successfully into the desktop.

I did the "apt update" & "apt upgrade" to install the remaining 4 packages

again the device stops pinging but does not reboot. I have to do a physical reset to get the device to boot.

but the device now boots into desktop OK.... I now cannot recreate the problem I could at the weekend!

So this time a repeat the process above again from scratch ... but this time I do an "apt update" & "apt upgrade" on successful 1st boot of a new flashed image instead of a "apt-get update" and "apt-get upgrade"

However the device will not boot the new flashed SD Card until I physically pull the power cable out and put it in again. i.e. the power off and reset buttons do not cause the device to restart and boot from the newly flashed SD Card.

This is not a bodged cluster project ... its a Pico cluster 10!

Having said that I have been suspicious that their power supply Quality it is abysmal .. I had to re-crimp and re-cable their shoddy work. One theory was that this is power draw when the GPU Kicks in and it was causing instability. However I thought I mitigated the risk this is all a HW Power related issue by testing with the master rocpro64 with a dedicated external power supply and I was still able to recreate the problem, but this could have been "after" I broke the installation with ambian-config that replaced nodm with lightdm which totally screwed the boot image.

Anyhow I'm now upgrading with "apt update" & "apt upgrade" in one go including those 4 held back packages....lets see how this goes.

if it still works after this maybe this is a power issue all along ... I only replaced 50% of pico clusters shoddy cabling work (they are using automotive crimp fork connectors and trying to shove up to 3-4 awg 22 cables into an awg 14-16 fork connector (morons).

I have noticed one of the devices constantly rebooting and the inconsistency of the masters behaviour ....

-

Original Image: Armbian_5.67_Rockpro64_Ubuntu_bionic_default_4.4.166_desktop on 64GB EMMC Disk.

The OS was "apt updated" on 28th Jan. When "apt updated" on the 2nd March the OS would not boot to desktop as teh screen went blank.

How I recreated the problem

Same Image: Armbian_5.67 on 64GB Sandisk Ultra SD XC 1 using Pine Installer to flash.

Boot RockPro64 on SSD: Screen and Monitor working as expected ... see boot messages and Armbian file system resize (6min)

Create New User and Pass

Boots to Armbian desktop OK.

console as root: apt-get update, apt-get upgrade

reboot

server comes up, available to ssh into. However while the display managed try's to boot into the desktop it hangs on black screen with "_" in the top left corner:

Now I am trying to recreate the problem again ..... but this time I notice that the following packages are now "held back" when they where previously installed.

This means that I probably did a "apt update" & "apt upgrade" the 1st time around.

libgli-mesa-dri

libgtk-3-0

libwayland-egli-mesa

netplan.io

I am waiting for the upgrade to complete and see if I can recreate the problem a second time.... will post when the upgrade is completed and attach relevant log files

-

Ok I'll post detailed steps to recreate when I get home tonight.

It knocked out all 10 of my RockPro64 clusters desktops when I did a salt apt update & upgrade. However I only use the Desktop on the master.

I recreate the issue on the master from a rebuild on a fresh SSD not the original EMMC (I have a new one on order Sonia can test 100% like for like).

I'll post the precise images I was using.

It could just be a comparability issue with the HP Monitor, I do t have a spare to test.

Also note ... There was a red herring about lightdm ... The display manager was nodm ...the lightdm was installed after the issue by me using armbian-config trying to fix the issue by reinstalling the desktop. In my fresh install the issue can be fixed by disconnecting the monitor after the upgrade and reconnecting it again with nodm in place like I originally had.

FYI I tried some other dists and they were a lot slower than Armbian on the desktop. My main reason from moving away from Armbian would be my need to get the PCIe NVMe drive working on the master.

Out of all the Dists I prefer Armbian.

-

This is me done with Armbian ,,, my cluster has been down for months due to stability issues.

I am reformatting all my nodes with a reliable distro

sick and tired of my Armbian desktop locking and crashing

in Pine RockPro64

Posted

armbian-config does not do a good job of installing lightdm, it failed to launch lightdm on boot with errors around trying to restart the service too quickly after it initially failed to start.

I don't have time to troubleshoot somebody else's sloppy mess.

I have reverted back to nodm so I can get back to writing some code.

The display lockup when nodm boots and logs in as any other user apart from root ... is not going to be nodm's problem I suspect.

more likely an Xorg config issue \ graphics driver.

good to know I can avoid 90% of my stability issues just but not using the default armbian chromium browser.

I am soon going to have to face facts and accept I need to reformat my entire cluster with a different distro, I just can not get Armbian stable on rockPro64.

I have been trying for 4 months. I have these lockup issues with my master node and I have 20% of my nodes constantly restarting.

I have eliminated power issues.

I have ordered a load of heatsink fans to eliminate the last thing I can think of ... heat as being the problem (despite them all having heat sinks and a pico cluster case).

The fans are the last thing I try before giving up on armbian and starting from scratch and loosing all my salt/cassandra/promethious/grafana cluster configuration.