usual user

Members-

Posts

422 -

Joined

-

Last visited

Content Type

Forums

Store

Crowdfunding

Applications

Events

Raffles

Community Map

Everything posted by usual user

-

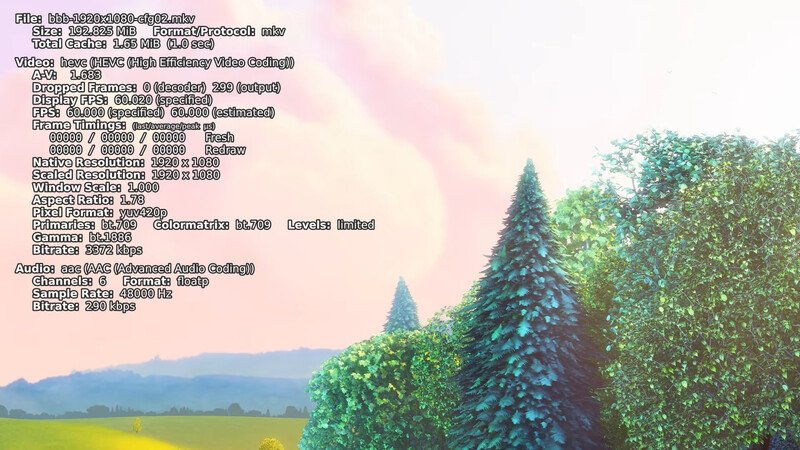

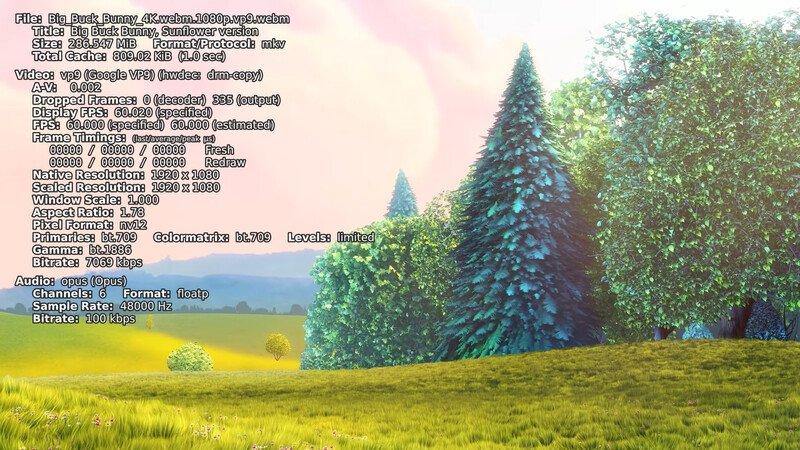

Damn, missed the rebase of the "Draft: v4l2codecs: Implement VP9 v4l2 decoder" patches and the source branch has been deleted. With gstreamer main branch as of 11.12.2021 I get this video-pipeline-vp9.pdf and everything is working as expected. Just discovered how to gather some video playback statistics while playing online videos. I still don't know which backend is used, but since I can play e.g. three videos in parallel, I'm pretty sure the VPU will be used. The eagle has landed. \o/

-

VOP is Video Output Processor, the term Rockchip uses for the display subsystem. Its resources are exposed through the KMS/DRM framework. The rk3399 even has two of them (vopl and vopb in DT) In a virtual console you use it e. g. via GBM. No 3D accelerator involved for standard video playback. In Xwindow/Wayland, the display subsystem is occupied by the server, so an application must forward video content to it and cannot directly access KMS/DRM. Xorg uses the modesetting driver which emulates all 2D action via 3D accelerator (glamor) and transmits all results via dumb buffer to KMS/DRM. This prevents the use of specific acceleration features of the display subsystem. In Wayland world this is a completely different story. The DRM backend in Weston and the KWin Wayland compositor at least implement proper KMS/DRM API and make use of avilable display subsystem acceleration features. Thus, video content can be played without redirection via the 3D accelerator, as on a virtual console. Of course, the 3D accelerator, if it fits, is used for all graphic eye candy by the compositor. In IBM PC architecture world the modesetting way is the "right thing to do" (TM) as the GPU card also provides the scan out engine. Content uploaded to the GPU will never return to the CPU for display. The scan out is done direct in hardware from the GPU card. I get similar relatively rising values with the start of XvImageSink playback, but since the overall value fluctuates a lot, it's difficult to know which CPU cycles are really associated with video playback or are attributable to other desktop activities.

-

But I still don't understand why you insist to involve the 3D accelerator in the video pipeline while the VOP can scan out video formats directly.

-

Plays for me: video-pipeline-glimagesink.pdf But auto negotiation selects better default: video-pipeline-autovideosink.pdf What MESA version you are running?

-

Yes, but I prefer the video pipeline that gst-play auto negotiates because it gives me a resizeable, semitransparent window while moveing and window decorations. It is probably to blame a misconfigured display pipeline of the compositor if the video pipeline is identical. Maybe attach the video pipeline that is negotiated with this command on GNOME: GST_DEBUG_DUMP_DOT_DIR=. gst-play-1.0 demo320x240.mp4.149ba2bb88584b89814a1c41b5feef77.mp4 For visualisation convert the dot-file by: dot -Tpdf foo.dot > foo.pdf

-

This one is playing: video-pipeline.pdf

-

I don't know if you run Gnome with Xwindow or Wayland backend With Xwindow backend I am running LXQt and with Wayland backend I use Plasma. This are the video resources my current kernel exposes: videoX-infos.txt Of course, HEVC support is missing because the LE patch cannot be applied.

-

As expected, no valid video-pipeline.pdf is created. [plasma@trial-01 video]$ gst-play-1.0 --use-playbin3 --videosink="glimagesink" demo320x240.mp4.149ba2bb88584b89814a1c41b5feef77.mp4 Press 'k' to see a list of keyboard shortcuts. Now playing /home/plasma/workbench/video/demo320x240.mp4.149ba2bb88584b89814a1c41b5feef77.mp4 ERROR Driver did not report framing and start code method. for file:///home/plasma/workbench/video/demo320x240.mp4.149ba2bb88584b89814a1c41b5feef77.mp4 ERROR debug information: ../sys/v4l2codecs/gstv4l2codech264dec.c(212): gst_v4l2_codec_h264_dec_open (): /GstPlayBin3:playbin/GstURIDecodeBin3:uridecodebin3-0/GstDecodebin3:decodebin3-0/v4l2slh264dec:v4l2slh264dec0: gst_v4l2_decoder_get_controls() failed: Invalid argument Reached end of play list. Useing a mem to mem 3D accellerator in arm world with Wayland environment in a video-pipeline is a bad idea.

-

Plays flawless for me with this video-pipeline.pdf.

-

hevc-ctrl.h does not exist, but hevc-ctrls.h are identical: --- hevc-ctrls.h-kernel 2021-09-08 20:51:20.802970171 +0200 +++ hevc-ctrls.h-ffmpeg 2021-10-11 23:43:54.894127584 +0200 @@ -210,7 +210,7 @@ struct v4l2_ctrl_hevc_slice_params { __u16 short_term_ref_pic_set_size; __u16 long_term_ref_pic_set_size; - + __u32 num_entry_point_offsets; __u32 entry_point_offset_minus1[256]; __u8 padding[8];

-

I already use these patches since I build 5.15.0. I switched to 5.17.0 preview to see what's currently missing when it comes out. AFAIS I have only to wait for a rebase of "Draft: v4l2codecs: Implement VP9 v4l2 decoder" for gstreamer and LE 0000-linux-2001-v4l-wip-rkvdec-hevc.patch for kernel to have all the basics for further experiments in place. For now only mpv works to some extend.

-

VP9 support has just landed in media staging, i.e. is in flight for 5.17.0. I moved to 5.16.0-rc2 with media staging patches applied. LE 0000-linux-0011-v4l2-from-list.patch and 0000-linux-1001-v4l2-rockchip.patch where also applied after resolving small merge conflicts or dropping already applied commits. 0000-linux-2001-v4l-wip-rkvdec-hevc.patch can no longer be applied, I will wait patiently until LE rebases. I'm not even sure if hevc works at all, because with 5.15.0 and the patch, mpv does not pick drm-copy as it does like for VP9.

-

If I read the specification of the MFC-L2710DW correctly, it supports IPP (AirPrint). Therefore, IPP Everywhere is what to look for.

-

https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/tree/Documentation/admin-guide/kernel-parameters.txt

-

If I read the specification of the HL-L3230CDW correctly, it supports IPP (AirPrint). Therefore, IPP Everywhere is what to look for.

-

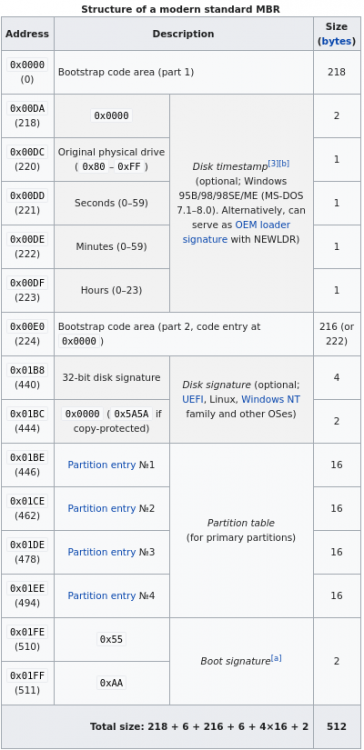

Looks like the existing boot firmware on the emmc, for whatever reason, does not tolerate a valid MSDOS MBR on the emmc. If this is still valid, you can certainly replace the existing firmware with a suitable one, but since a functioning solution has already been worked out, it is certainly easier to use it.

-

AFAIS you have inserted a populated partition table. Maybe the boot firmware now changes the boot flow according these values. To confirm that the presence of an MSDOS MBR already triggers it, just add the boot signature "55AA" to the last two bytes of the sector. If booting still does not work with an otherwise empty partition table, no MSDOS MBR can be used unless the existing boot firmware is also adapted.

-

The first 440 bytes of the MBR are used for bootstrap executable code. The partition management relevant data starts at 0x01B8 and lasts till 0x01FF. Tools that create MSDOS master boot records usually insert code into the bootstap code area that is suitable for PC BIOS use, i.e. useless for Arm systems. Regardless of what you put in the first 440 bytes of the MBR, you still have a valid MBR with partition table entries. Only the last 72 bytes of the first sector have to be maintained therefor.

-

I am running at an entire different user space. But this doesn' t matter. Also the exact kernel version doesn' t matter. The only thing that is really important is that the elements that are needed by the hardware are also built. dmesg tells you which components are used. Looking at kernel config does only tell wich modules are build, but not if they are realy used. E.g. my kernel is built with a large number of modules. With the help of initramfs it is suitable for all devices with aarch64 architecture. When the kernel is booting it will either probe the hardware or know via devicetree wich modules are required. The customizieing I am aplying is to pull display support and everything to mount the rootfs as build-in. This way I have early display support and I can run without initramfs. Everything else will be loaded later as module. In order to learn which components are needed, it is very helpful to have a dmesg from a running system.

-

I run my NanoPC-T4 as a fully flagged desktop with all the bells and whistles. I have uploaded my dmesg so you have a reference what messages to expect. More components, not called HDMI, are required for display support. This is user space that tries to load kernel modules and expected when the modules are built in or already loaded by the kernel. dmesg

-

Trying to learn more about u-boot for amlogic devices.

usual user replied to Sameer9793's topic in General Chat

https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/tree/Documentation/admin-guide/kernel-parameters.txt https://source.denx.de/u-boot/u-boot/-/blob/master/doc/README.distro -

CSC Armbian for RK3318/RK3328 TV box boards

usual user replied to jock's topic in Rockchip CPU Boxes

Then why not start, @jock has announced new versions and the links on the first page are already up? -

CSC Armbian for RK3318/RK3328 TV box boards

usual user replied to jock's topic in Rockchip CPU Boxes

I don't know what requirements Kivy places on the graphics stack, but since Panfrost achieves OpenGL ES 3.1 conformance on Mali-G52, it certainly makes sense to read this to understand what to expect from a lima-driven GPU. I still remember very well times when my GPU only reached almost 2.0 and the performance was quite moderate. With now 3.1 it's a big difference for daily desktop use, although my GPU isn't yet declared fully compliant. But since this is bleeding edge development, at least the current mesa mainline release is required in any case. If not even the main branch to use just implemented extentions. Kernel driver wise everything necessary should already be available and therefor mesa is where you are looking for GPU support improvements. -

Thank you for sharing the schedule. But no hurry, my daily use cases are already working anyway. I'm only doing this for my own education and as a preview of maybe landing features in Mainline. For me, it's the other way around. hwdec=drm yield high CPU utilisation for bbb_sunflower_2160p_30fps_normal.mp4 and hwdec=drm-copy yield low CPU utilisation. As the values are fluctuating heavyly it is difficult to provide absolut numbers. But anyway here are some rough values: bbb_sunflower_1080p_30fps_normal.mp4: gst-play-1.0 ~35% mpv --hwdec=none --hwdec-codecs=all ~40% mpv --hwdec=drm --hwdec-codecs=all ~38% mpv --hwdec=drm-copy --hwdec-codecs=all ~28% bbb_sunflower_2160p_30fps_normal.mp4: gst-play-1.0 ~35% mpv --hwdec=none --hwdec-codecs=all ~98% playing slow, sound out of sync mpv --hwdec=drm --hwdec-codecs=all ~98% playing jerky, sound out of sync mpv --hwdec=drm-copy --hwdec-codecs=all ~34% playing slow, sound out of sync This is on plasma desktop with wayland backend. Desktop CPU usage fluctuates around 8 to 15% without playing a video, so it's uncertain which CPU cycles are really associated with video playback. The utilization is always distributed almost evenly over all cores. Frequency scaling is not considered here. For reference here the values for lxqt desktop with native xorg backend: bbb_sunflower_1080p_30fps_normal.mp4: gst-play-1.0 ~25% mpv --hwdec=none --hwdec-codecs=all ~30% mpv --hwdec=drm --hwdec-codecs=all ~28% mpv --hwdec=drm-copy --hwdec-codecs=all ~18% bbb_sunflower_2160p_30fps_normal.mp4: gst-play-1.0 ~18% paying as diashow mpv --hwdec=none --hwdec-codecs=all ~98% playing slow, sound out of sync mpv --hwdec=drm --hwdec-codecs=all ~98% playing jerky, sound out of sync mpv --hwdec=drm-copy --hwdec-codecs=all ~22% playing slow, sound out of sync Conlusion: The VPU decoder is not the bottleneck, but setting up an efifcient video pipeline with proper interaction of the several involved hardware acellerators. For Xwindow with the modeset driver this seems not realy possible.

-

Thank you for the offer @jock. But 5.14.0 wise I had all VPU relevant LE patches and even the drm ones applied. With gstreamer framework it is working, even for bbb_sunflower_2160p_30fps_normal.mp4 without any problem. It is mpv that seems not be able to cope with the hight data rate. Useing --hwdec=drm-copy makes the CPU utilization ramp up go away and the video plays smoothly, but to slow and the sound get out of sync. I don't think I am missing anything kernel wise. Just tried to drop 2000-v4l-wip-rkvdec-vp9 in favour of this more recent patchset. Resolving a merge conflict to apply the LE patches on top was easy: But the kernel build drops out with this: So I will stay at the status quo of LE patches on 5.15.0-rc1 for now and wait till they rebase. VPU wise everything from LE is in place and seems to work.