-

Posts

5462 -

Joined

Content Type

Forums

Store

Crowdfunding

Applications

Events

Raffles

Community Map

Posts posted by tkaiser

-

-

On 9/30/2018 at 9:46 AM, hjc said:

I remember that once in a YouTube interview video it is said that one of these Ethernet ports are for out of band management. Don't know if that's accurate, though. It is mentioned that the LTE (mPCIe) port is also for the management system, so remote access of the serial console is possible

Yeah, if I understood correctly the M.2 slot is there to fit the 'Baseboard Management Controller' (BMC): an MCU that then controls power and has serial console access to RK3399 while also being interconnected to a WWAN modem in the mPCIe slot. Well, we'll see once documentation becomes publicly available.

In the meantime I had a start with vendor provided Ubuntu 16.04.3 'server' OS image (download link, logon credentials are rock:rock, the archive also contains a BLOB called rk3399_loader_v1.12.112.bin). I just checked some tunables (e.g. /sys/module/pcie_aspm/parameters/policy defaulting to powersave) and let sbc-bench run for the following two reasons:

- getting a 'baseline' so when switching over to Armbian being able to spot areas where Armbian might perform worse than vendor OS image (especially tinymembench numbers interesting here)

- getting an early idea about heat dissipation. The board does not come with a heatsink and there are also no mounting holes. On the other hand the board size is huge and the whole board acts as a heatsink itself

Quick look at the results (full output):

sbc-bench v0.6.1 Installing needed tools. This may take some time... Done. Checking cpufreq OPP... Done. Executing tinymembench. This will take a long time... Done. Executing OpenSSL benchmark. This will take 3 minutes... Done. Executing 7-zip benchmark. This will take a long time... Done. Executing cpuminer. This will take 5 minutes... Done. Checking cpufreq OPP... Done. ATTENTION: Throttling might have occured on CPUs 0-3. Check the log for details. ATTENTION: Throttling might have occured on CPUs 4-5. Check the log for details. Memory performance (big.LITTLE cores measured individually): memcpy: 1439.4 MB/s (0.2%) memset: 4771.3 MB/s (0.3%) memcpy: 2876.7 MB/s memset: 4902.2 MB/s (0.5%) Cpuminer total scores (5 minutes execution): 6.77,6.76,6.73,6.66,6.63,6.60,6.59,6.57,6.56,6.55,6.53,6.52,6.51,6.50,6.49,6.48,6.47,6.46,6.45,6.44,6.43,6.42,6.41 kH/s 7-zip total scores (3 consecutive runs): 5444,5300,5265 OpenSSL results (big.LITTLE cores measured individually): type 16 bytes 64 bytes 256 bytes 1024 bytes 8192 bytes aes-128-cbc 177074.76k 473539.43k 791910.23k 984429.57k 1059487.74k aes-128-cbc 363947.66k 785832.70k 1179533.91k 1285858.65k 1345047.21k aes-192-cbc 165413.53k 407764.10k 632680.28k 752687.45k 796715.69k aes-192-cbc 389359.64k 802071.27k 1059886.51k 1140258.82k 1188118.53k aes-256-cbc 158442.61k 367502.10k 541234.60k 626816.68k 657066.67k aes-256-cbc 434718.62k 765007.34k 944500.74k 992573.78k 1020420.10k Full results uploaded to http://ix.io/1nUC. Please check the log for anomalies (e.g. swapping or throttling happenend) and otherwise share this URL.- Throttling happened with demanding loads as expected (I'll look into this later in detail. Just as a related information: there's a 3 pin power header for a fan on the board)

- Vamrs is using the conservative 1800/1400 MHz settings for the CPU cores

- Memory performance exactly as ODROID N1 (also DDR3 equipped, little core result, big core result to compare). So memory performance slightly lower as those other RK3399 designs using LPDDR3 and LPDDR4 RAM

- 7-zip and cpuminer scores are irrelevant since binaries built with GCC 5.4 (the provided image is a Xenial)

Since I'm running off the provided 8GB SanDisk eMMC module I quickly measured the performance (amazingly fast for an 8 GB module):

random random kB reclen write rewrite read reread read write 102400 4 22385 24709 43605 45137 20742 25053 102400 16 40426 40611 115123 116118 65446 40031 102400 512 40390 40806 260663 263373 244410 41262 102400 1024 40824 41244 276769 281198 267166 41219 102400 16384 41095 41109 276685 276525 277313 41240 1024000 16384When running the last test with 1000M test size, an interruption occurred since 'filesystem full'. The vendor provided OS image does no automatic rootfs resize. I think it's time to stop now, create a ficus.csc config and continue the tests with Armbian...

Edit: idle consumption with vendor OS image (12V/4A PSU included): 6.1W

-

2 hours ago, malvcr said:

I have it running very well now (without antivirus support that always kill the machine)

Somewhat unrelated but I really hope you're aware that with most recent Armbian release you're able to do MASSIVE memory overcommitments and performance will just slightly suck?

I'm running some SBC for test purposes as well as some productive virtualized x86 servers since some time with 300% memory overcommitment. No problems whatsoever.

-

Latest RK3399 arrival in the lab. For now just some q&d photographs:

@wtarreau my first 96boards thing so far (just like you I felt the standard being directed towards nowhere given that there's no Ethernet). And guess what: 2 x Ethernet here!

A quick preliminary specifications list:

- RK3399 (performing identical to any other RK3399 thingy out there as long as no throttling happens)

- 2 GB DDR3 RAM (in April Vamrs said they will provide 1GB, 2GB and 4GB variants for $99, $129 and $149)

- Board size is the standard 160×120 mm 96Boards EE form factor. EE = Enterprise Edition, for details download 96Boards-EE-Specification.pdf (1.1MB)

- Full size x16 PCIe slot as per EE specs (of course only x4 usable since RK3399 only provides 4 lanes at Gen2 speed)

- Board can be powered with 12V through barrel plug, 4-pin ATX plug or via pin header (Vamrs sent a 12V/4A PSU with the board)

- Serial console available via Micro USB (there's an onboard FTDI chip)

- 2 SATA ports + 2 SATA power ports (5V/12V). SATA is provided by a JMS561 USB3 SATA bridge that can operate in some silly RAID modes or PM mode (with spinning rust this chip is totally sufficient -- for SSDs better use NVMe/PCIe)

- Socketed eMMC and mechanical SD card adapter available (Vamrs sent also a SanDisk 8GB eMMC module as can be seen on the pictures)

- SIM card slot on the lower PCB side to be combined with an USB based WWAN modem in the mPCIe slot (USB2 only)

- 1 x SD card slot routed to RK3399, 1 x SD card slot for the BMC (Baseboard Management Controller)

- Gigabit Ethernet and separate Fast Ethernet port for the BMC

- Ampak AP6354 (dual-band and dual-antenna WiFi + BT 4.1)

- USB-C port with USB3 SuperSpeed and DisplayPort available

- eDP and HDMI 2.0

- USB2 on pin headers and 2 type A receptacles all behind an internal USB2 hub

- USB3 on one pin header and 2 type A receptacles all behind an internal USB3 hub

- 96boards Low Speed Expansion connector with various interfaces exposed

- 96boards High Speed Expansion connector with various interfaces exposed (e.g. the 2nd USB2 host port, see diagram below)

- S/PDIF audio out

- 'real' on/off switch to cut power. To really power on the board the translucent button next to it needs to be pressed

-

3 hours ago, SMburn said:

Generic Amazon mSATA M.2

Please be very careful with these wordings. M.2 is not mSATA. M.2 is just a mechanical connector able to transport a bunch of different protocols. In case you have no other device suited for an M.2 SATA SSD you might want to have a look at https://jeyi.aliexpress.com/store/group/USB3-0-USB3-1-Type-C-Series/710516_511630295.html for external USB3 enclosures (to get a bulky but ultra fast 'USB pendrive'). But since a 'generic' 60 GB SSD will perform crappy anyway and you talked about Amazon the best you could do is to return such a thing right now.

-

3 hours ago, Faber said:

I already knew about that

Great. So you got the 'you can run FreedomBox on any computer that you can install Debian on'. Now you just need to know what Armbian is (Ubuntu or Debian userland with optimized kernel and optimized settings), then as long as you are able to accept running an Armbian Debian flavor is in some way comparable to 'running Debian' you can start. If Armbian is not for you for whatever reason simply wait a few months or years until upstream support for recent/decent ARM hardware arrived in Debian.

In case you choose Armbian you need to ensure to freeze kernel and u-boot upgrades since otherwise you use a stable distro on an unstable basis (it seems stuff like this needs to happen from time to time for reasons unknown to me).

-

armbianmonitor -ufirst please...

-

10 hours ago, Faber said:

Has anyone tried installing either Freedombox or Debian on this board?

-

3 minutes ago, Jason Law said:

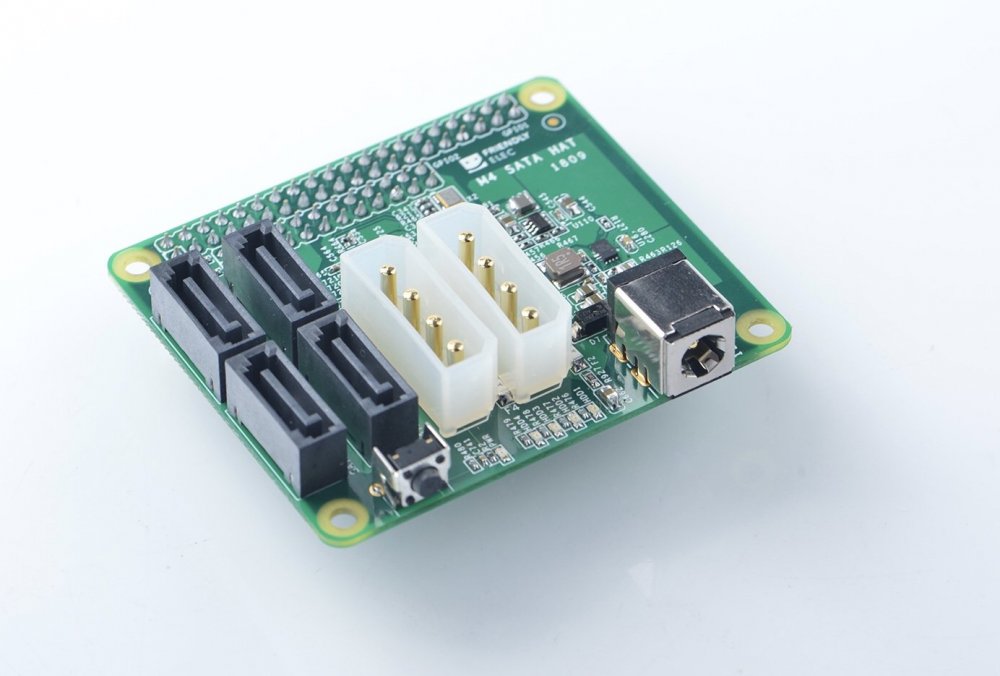

how will this connect to the M4?

It's a HAT so you simply mount in on top of the NanoPi using 4 spacers so GPIO headers make contact. Since NanoPi M4 is one of the few SBC that have the SoC on the appropriate side of the PCB (the lower one) attaching HATs does not negatively affect heat dissipation.

(still hoping that the final version of the HAT will also use a 40 pin male header so the GPIOs on this header can still be used)

-

13 minutes ago, NicoD said:

Weird numbers

Nope, everything as expected. The M4 number in the list https://github.com/ThomasKaiser/sbc-bench/blob/master/Results.md has been made with mainline kernel which shows way higher memory bandwidth on RK3399 (check the other RK3399 devices there). Cpuminer numbers differ due to GCC version (Stretch ships with 6.3, Bionic with 7.3 -- see the first three Rock64 numbers with 1400 MHz in the list -- always Stretch but two times with manually built newer GCC versions which significantly improve cpuminer performance)

If you love performance use recent software...

-

Orange Pi R2 announced to be available back in April might be ready in November: https://www.cnx-software.com/2018/09/23/realtek-rtd1296-u-boot-linux-source-code-rtd1619-cortex-a55-soc/#comment-556261

I wonder whether the board is still at $79 as announced back in April... BPi people charge $93 for their BPi-W2 but there you need to acquire a Wi-Fi/BT combo card that fits into the M.2 Key E slot separately (and by reading through this mess here choosing Bpi's own RTL8822 card seems to be the best idea -- most probably this chip is part of RealTek's reference design)

BTW: SinoVoip is about to throw out a few new and of course totally incompatible boards soon just to ensure software support situation will be the usual mess

Watch them here already: http://www.banana-pi.org

Watch them here already: http://www.banana-pi.org

-

5 hours ago, sfx2000 said:

There was a ticket open over on ChromiumOS

The important part there is at the bottom: the need to 'NEON-ize lz4 and/or lzo' which has not happened on ARM yet, that's why lz4 outperforms lzo on x86 (SIMD instructions used) while on ARM it's the opposite.

Once some kernel developer will spend some time to add NEON instructions for the in-kernel code for either lzo or lz4 (or maybe even a slower algo) I'll immediately evaluate a detection routine for this based on kernel version since most probably this also then outperforming any other unoptimized algorithm that in theory should be more suited.

Missing SIMD optimization on ARM are still a big problem, see for example https://www.cnx-software.com/2018/04/14/optimizing-jpeg-transformations-on-qualcomm-centriq-arm-servers-with-neon-instructions/

-

3 hours ago, lanefu said:

Relevant swapping occurred. Still no idea why so I added monitoring of virtual memory settings few hours ago. I'll look into this within the next months but no high priority since there is detailed monitoring in sbc-bench we know exactly why numbers differ currently between Orange Pi One Plus (1 GB) and PineH64 (more than 1 GB).

-

48 minutes ago, Jason Law said:

Has anyone tried a 2 or 4 port SATA controller in the M.2 slot? Does it work?

Nope and I don't think so. I wanted to try the same, did some research and ended up with an Amazon review from someone trying this with the Firefly RK3399 saying the Marvell 9235 adapter he had has some components on the bottom that make it impossible to use it on the board without shorting something. These things look like this:

And then on NanoPC-T4 there are only mounting holes for 2280 while the SATA adapters are all 2242. So unless you get a PCIe extender somewhere the M.2 slot is 'SSD only'.

Are you aware that FriendlyELEC will provide the below for NanoPi M4 soon (also solving the powering problem):

-

Ok, this is pure madness here. Won't look into this thread for some time.

@ag123 it's really nice that you're creative and able to develop theories about Linux being broken in general (breaking the system by swapping out important daemons -- I guess you also think kernel developers behave that moronic that they allow to swap out the kernel itself?). It also is nice that you ignore reality (@Moklev having reported a system freeze and not sshd being unresponsive). It also doesn't matter that much that you create out of one single report of 'freezes since some time' for reasons yet unknown a proof that there's something wrong with the new vm.swappiness default in Armbian and talk about 'related issues surfaced currently' (issues --> plural).

I simply won't read these funny stories any more since they're preventing time better spent on something useful.

Here are the download stats: https://dl.armbian.com/_download-stats/ Check when 5.60 has been released, count the number of downloads, count the reports of boards freezing/crashing in the forum and you know about 'issues surfaced currently'.

-

5 minutes ago, ag123 said:

i think for the time being selecting a vm.swappiness in a mid range between 0 to 100 seem to alleviate related issues surfaced currently

Are you trolling? Which 'related issues'? I only know of one overheating Orange Pi Zero that for whatever reasons is/was unstable. Zero useful efforts taken to diagnose this single problem other than a huge waste of time right now.

-

Let's stop here. I won't further waste my time with this 'report' (which is just a bunch of theories, assumptions and a weird methodology to back an idea).

@Moklev: I was asking for armbianmonitor -u output but got only a redacted/censored variant (the line numbers are there for a reason). I was asking for what's the output of 'free -m' NOW. As in 'with your vm.swappiness=30 or 60 setting). Instead you rebooted (why?! You can adjust vm.swappiness all the time, no reboot needed).

Providing 'free -m' output directly after a reboot is pointless as you see ZERO swapping happened. And providing a log containing information from 14.36.28 until 14.53.31 is pointless too.

14 minutes ago, Moklev said:With wm.swappiness=100: system work fine for a random time (3-12 h), then hang with ... rpi monitor web pages unreachables

Ok

On 9/26/2018 at 10:19 AM, Moklev said:Yes, I've starting monitoring...

Yesterday you started monitoring. Now you report having switched from vm.swappiness=30 to 100. But you're able to report that with 100 settings your board froze and even RPi Monitor web page not being accessible. So you tested within the last 29 hours already twice with 100 settings and were able to report your board crashing (since you talk about '3-12 h' -- if these 3-12h is some anecdotical story from days ago I'm not interested in. It's only relevant what happens now with some monitoring installed able to provide insights).

I'm interested in resolving real problems if there are any. What happens here is developing weird theories and trying to back them. Unless I get data I won't look any further into this.

If you had RPi-Monitor running the graphs are available even after a reboot so you can share them focussing on the last hour prior to latest crash (Cpufreq, temperature, load). Also if 'ssh unreachable, yellow ethernet led fixed on, pihole/motioneye/rpi monitor web pagesunreachable' is the symptom then you report your board having freezes or crashed (and not sshd not being responsive any more).

-

26 minutes ago, Igor said:

As you can see I manually tested most of the boards and already that was on the edge what I can do "by the way". I am not sure I will do that ever again and certainly not within the next 6 months. It's painful, time wasteful and it goes by completely unnoticed.

So what?

Armbian supports too many boards for us to handle. Nothing new.

The interesting question still is: how do we provide something that could be called 'stable'? It's simply unacceptable that a simple 'apt upgrade' bricks boards or removes basic functionality (which is not the case here now as I understand -- still wondering why there's a comment reading 'network sometimes doesn't work properly' -- but at the beginning of this year we had a bunch of such totally unnecessary issues caused by untested updates rushed out).

If it's not possible to prevent such issues (and clearly separating playground area from stable area) the only alternative is to freeze u-boot/kernel updates by default and add to documentation that users when they want to upgrade u-boot and kernel they're responsible for cloning their installation before -- good luck! -- so they can revert to a working version if something breaks.

-

2 minutes ago, Igor said:

My C2 is working without any issues: http://ix.io/1nGc

So where did your comment 'usb hotpluging and network sometimes doesn't work properly' originates from then?

I'm not sure whether you get my point: I want Armbian to be a stable distribution. I do not rely unnecessarily on either Debian or Ubuntu with all their 'outdated as hell' software packages if there wouldn't be that great promise of 'being stable'. If I pull in updates I expect that afterwards my device still works. With the userland this is pretty much the case (upstream Debian or Ubuntu) but wrt kernel/u-boot (the Armbian provided stuff) it seems this is impossible.

Why did you write 'network sometimes doesn't work properly' in your commit message and especially why did you allow a kernel update for ODROID-C2 that is then supposed to break basic functionality? As already said: I'm fine with USB hotplug issues since USB devices connected at boot are fine for 'stable' use cases. But if there are network issues to be expected I really don't get it why then such a kernel update will be rolled out and not skipped this time?

It's about the process! If an update is to be expected to break functionality then why enrolling it?

Is it possible to move from playground mode to stable mode? What's the dev branch for when testing obviously also happens in the next branch?

-

17 minutes ago, ag123 said:

i think the issue about the vm.swapiness is that some of the processes, e.g. sshd after it is swapped out i.e. not resident in memory, may not respond or respond too slowly to connects over the network

WTF? Can we please stop developing weird theories but focus on what's happening (which requires a diagnostic attempt collecting some data)? Without swap it could be possible that the sshd gets quit by the oom-killer (very very very unlikely though because the memory footprint of sshd is pretty low) but if it would be possible that swapping in Linux results in daemons not responding any more or not fast enough we all would've stopped using Linux a long time ago.

And can you please try to understand that we're not talking about 'swap on crappy storage' (HDD) any more but about zram: that's just decompressing swapped out pages back into another memory area which is lightning fast compared to the old attempts with swap on HDD.

-

12 hours ago, lanefu said:

I bet unattended-upgrades was running in the background (you could check /var/log/dpkg.log)

But I've been wrong. The background activity was swap/zram in reality.

-

10 minutes ago, Igor said:

A network was working OK for some time and at the time of testing but it is possible that one wrong patch finds its way upstream

Seriously: I don't get this policy. But I think any more discussion is useless and Armbian can just not be considered a stable distribution. Or at least people who are interested in stable operation need to freeze kernel and u-boot updates since otherwise updating the software means ending up with a bricked board or some necessary functionality not working any more (and based on Armbian's origin I consider fully functioning network to be the most basic requirement of all -- but I might be wrong and Armbian in the meantime focuses on display stuff trying to compete with Android)

I thought once there was a next branch available for ODROID-C2 this could be considered 'stable' (since there's still the 'dev' branch for development and testing). But it seems I've been wrong and we have no definition of 'stable' if it's possible that updates are provided that destroy functionality that has been working flawlessly before.

So by accident 'default' seems to be the only stable branch in Armbian for the simple reason no kernel development happens there.

-

25 minutes ago, ag123 said:

i think the somewhat elevated temperatures of say 70-75 deg C on H3 socs can be tolerated

Huh? Can we please stay focused here on an Armbian release upgrade and potential show-stoppers? You brought up the claim that vm.swapiness settings cause crashes without any evidence whatsoever. Let's focus now on collecting some data related to this and keep temperature babbling outside.

We all know that certain boards report wrong temperatures anyway (OPi Zero rev 1.4 for example) and the only area where this gets interesting is whether those wrongly reported temperatures cause an emergency shutdown (the kernel's cpufreq framework defines that at a specific critical temperature a shutdown is initiated). So obviously with wrong thermal readouts as we experience on some sunxi boards/platforms we get a 'denial of service' behavior under load.

Using the wrong hardware for specific purposes is not the focus of this thread (e.g. using a board with just 512 MB for desktop linux -- that's not a 'use case', that's just plain weird)

-

14 minutes ago, Moklev said:

zram0 0,00 0,01 0,00 4 0 zram1 0,00 0,00 0,00 0 0Sorry, this thing is doing nothing and especially not swapping. And I'm really not interested in what a MicroServer somewhere on this earth is doing. It's only about Armbian and the claim vm.swappiness=100 would crash your board.

So as already said, it would be great if you can provide output from the two commands I asked for now.

1 hour ago, tkaiser said:So can you please provide output from both 'free -m' and 'armbianmonitor -u' now?

Since after switching back to vm.swappiness=100 your board is supposed to crash then afterwards it gets interesting to have a look at the two logs (submitted via pastebin.com or some similar online pasteboard service).

-

23 hours ago, Moklev said:

Yes, I've starting monitoring...

So can you please provide output from both 'free -m' and 'armbianmonitor -u' now? Once you switch back to vm.swappiness=100 the VM monitoring script should be adjusted to

while true ; do echo -e "\n$(date)" >>/root/free.log free -m >>/root/free.log sync sleep 60 done(same with iostat -- also switching from 600 seconds to 60. And then the 'sync' call above is very important since with default Armbian settings we have a 600 second commit interval so last monitoring entries won't be written to disk without this sync call happening)

Quick Review of Rock960 Enterprise Edition AKA Ficus

in Reviews, Tutorials, Hardware hacks

Posted

Nope, lazy mode won (thanks to parted and resize2fs now using the eMMC's full capacity, then did do-release-upgrade to upgrade Vamrs' Xenial image to 18.04 LTS, then quickly checked partition 5 whether latest DT changes are present -- they are). Then I took two SATA SSDs and one NVMe SSD (in Pine's PCIe to M.2 adapter), threw everything in a corner, connected the stuff and fired it up again:

The huge fan is there to generate sbc-bench numbers now almost without throttling. Numbers here: http://ix.io/1nVS -- performance (not so) surprisingly identical to all other RK3399 thingies around but DDR3 memory results in slightly lower memory performance (negligible with almost every use case) and of course those RK3399 boards where we allowed 2000/1500 MHz so far show ~5% better CPU performance. The better cpuminer scores compared to RockPro64 with same 4.4. kernel here are just due to testing with Bionic on Ficus (GCC 7.3) vs. Debian Stretch there (GCC 6.3 -- with some tasks simply updating GCC you end up with whopping performance improvements for free)

Not able to test the PCIe SSD since 'rockchip-pcie f8000000.pcie: PCIe link training gen1 timeout!' (full dmesg output). I guess there's still some work needed to get DT bits correctly for PCIe? Ok, let's forget about PCIe now (there won't be anything new anyway since PCIe lives inside RK3399 so as long as trace routing on the PCB is ok this RK3399 will perform 100% identical to every other RK3399 thingy out there)

Now let's check USB situation. I'm a bit excited to have a look at the USB3 SATA implementation here since on the PCB itself there's a JMS561 controller (USB3-to-SATA bridge with support for 2 SATA ports, providing some silly RAID functionality but also PM mode. PM --> port multiplier). The two SSDs when connected with the JMS561 in PM mode appear as one device on the USB bus:

root@rock960:~# lsusb Bus 004 Device 003: ID 1058:0a10 Western Digital Technologies, Inc. Bus 004 Device 002: ID 05e3:0616 Genesys Logic, Inc. hub Bus 004 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub Bus 003 Device 002: ID 05e3:0610 Genesys Logic, Inc. 4-port hub Bus 003 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub Bus 002 Device 001: ID 1d6b:0001 Linux Foundation 1.1 root hub Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub Bus 006 Device 001: ID 1d6b:0001 Linux Foundation 1.1 root hub Bus 005 Device 003: ID 0403:6010 Future Technology Devices International, Ltd FT2232C Dual USB-UART/FIFO IC Bus 005 Device 002: ID 1a40:0201 Terminus Technology Inc. FE 2.1 7-port Hub Bus 005 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub root@rock960:~# lsusb -t /: Bus 06.Port 1: Dev 1, Class=root_hub, Driver=ohci-platform/1p, 12M /: Bus 05.Port 1: Dev 1, Class=root_hub, Driver=ehci-platform/1p, 480M |__ Port 1: Dev 2, If 0, Class=Hub, Driver=hub/7p, 480M |__ Port 2: Dev 3, If 0, Class=Vendor Specific Class, Driver=ftdi_sio, 480M |__ Port 2: Dev 3, If 1, Class=Vendor Specific Class, Driver=ftdi_sio, 480M /: Bus 04.Port 1: Dev 1, Class=root_hub, Driver=xhci-hcd/1p, 5000M |__ Port 1: Dev 2, If 0, Class=Hub, Driver=hub/4p, 5000M |__ Port 3: Dev 3, If 0, Class=Mass Storage, Driver=uas, 5000M /: Bus 03.Port 1: Dev 1, Class=root_hub, Driver=xhci-hcd/1p, 480M |__ Port 1: Dev 2, If 0, Class=Hub, Driver=hub/4p, 480M /: Bus 02.Port 1: Dev 1, Class=root_hub, Driver=ohci-platform/1p, 12M /: Bus 01.Port 1: Dev 1, Class=root_hub, Driver=ehci-platform/1p, 480MThe good news: there is SuperSpeed (USB3) on the USB-C connector and it's not behind the internal USB3 hub (BTW: Schematics for the board are here: https://dl.vamrs.com/products/ficus/docs/hw/). The bad news: all USB receptacles are behind one of the two internal USB hubs.

So let's focus now on the 2 SATA ports provided by the JMS561.

1) Samsung EVO840 connected to one of the SATA ports. Testing methodology exactly identical as outlined here: https://forum.armbian.com/topic/8097-nanopi-m4-performance-and-consumption-review/?do=findComment&comment=61783. Only some minor slowdowns compared to NanoPi M4 (there the JMS567 USB-to-SATA bridge also being behind an internal USB3 hub). In other words: When HDDs are attached this is just fine since then the HDD is the bottleneck but neither the JMS561 nor the USB3 connection:

random random kB reclen write rewrite read reread read write 102400 4 23192 26619 20636 20727 20633 26177 102400 16 79214 89381 78784 79102 78889 88974 102400 512 292661 295840 271486 277983 277481 300712 102400 1024 321196 322169 305092 312765 312185 329470 102400 16384 356091 356179 350200 360167 359817 357583 2048000 16384 371761 374096 361612 361590 361724 3744012) Another Samsung added to 2nd 'SATA' port (now 2 disks connected to the JMS561). Running two independent iozone tests with 2GB test size at the same time. As expected we now run into 'shared bandwidth' and bus contention issues since both SSD are connected to the same JMS561 that is bottlenecked by the upstream connection to the SoC (one single SuperSpeed line that has to go through the USB3 hub). If we would talk about HDD the below numbers are still excellent since HDD are still the bottleneck with such a '2 disk' setup.

random random kB reclen write rewrite read reread read write PM851 2048000 16384 131096 132075 161149 162122 161282 131273 EVO840 2048000 16384 151916 157295 176928 183874 186000 1782853) Now adding another Samsung SSD in an JMS567 enclosure to one of the USB3 receptacles (also behind the hub). As expected 'shared bandwidth' and bus contention issues matter even more now with 3 parallel iozone benchmarks running on each SSD in parallel. But the newly added Samsung EVO750 in an external enclosure finished way earlier:

random random kB reclen write rewrite read reread read write PM851 2048000 16384 112897 130875 125697 171855 175661 134626 EVO840 2048000 16384 113541 132846 127664 172124 174311 137836 EVO750 2048000 16384 214842 164890 328764 326065 197740 154398TL;DR: The 2 available SATA ports provided by a JMS561 in PM fashion are totally sufficient when you want to attach one or two HDDs. In case you need highest random IO or sequential IO performance exceeding the USB3 limit (with this 'behind a hub' topology this means ~365 MB/s) then your only choice is the PCIe slot once it works (either by using a PCIe based SSD there or a SATA or even SAS adapter)