usual user

-

Posts

519 -

Joined

-

Last visited

Content Type

Forums

Store

Crowdfunding

Applications

Events

Raffles

Community Map

Posts posted by usual user

-

-

5 hours ago, Ryzer said:

Another issue is that neither is 100% accurate as some listed gpio lines do not actually exist

"/sys/kernel/debug/gpio" represents what the kernel has instantiated. Its accuracy depends on the exact description (DT), as the layout cannot be probed.

But many people think they can copy DT fragments of similar devices together to get an exact description of a particular device without verification. But it's just like a device schematic, it will never accurately replicate the layout design of another device. DT is just one step further, as it can change the layout itself at runtime.5 hours ago, Ryzer said:That said if the mapping was incorrect then surely the dc pin and backlight pins woud not be correct either.

The test was intended to verify that the GPIO subsystem is correctly configured and that the GPIO line can be acquired from a native GPIO process.

This is because the line cannot be operated as a native CS line by the SPI IP but only emulated with the cooperation of the GPIO Subsystem.5 hours ago, Ryzer said:Are you sure this is right as it returns an error and gpioset -h does not list a -c option

Mine does:

Spoiler# gpioset --help Usage: gpioset [OPTIONS] <line=value>... Set values of GPIO lines. Lines are specified by name, or optionally by offset if the chip option is provided. Values may be '1' or '0', or equivalently 'active'/'inactive' or 'on'/'off'. The line output state is maintained until the process exits, but after that is not guaranteed. Options: --banner display a banner on successful startup -b, --bias <bias> specify the line bias Possible values: 'pull-down', 'pull-up', 'disabled'. (default is to leave bias unchanged) --by-names treat lines as names even if they would parse as an offset -c, --chip <chip> restrict scope to a particular chip -C, --consumer <name> consumer name applied to requested lines (default is 'gpioset') -d, --drive <drive> specify the line drive mode Possible values: 'push-pull', 'open-drain', 'open-source'. (default is 'push-pull') -h, --help display this help and exit -l, --active-low treat the line as active low -p, --hold-period <period> the minimum time period to hold lines at the requested values -s, --strict abort if requested line names are not unique -t, --toggle <period>[,period]... toggle the line(s) after the specified period(s) If the last period is non-zero then the sequence repeats. --unquoted don't quote line names -v, --version output version information and exit -z, --daemonize set values then detach from the controlling terminal # gpioset --version gpioset (libgpiod) v2.1.3 Copyright (C) 2017-2023 Bartosz Golaszewski License: GPL-2.0-or-later This is free software: you are free to change and redistribute it. There is NO WARRANTY, to the extent permitted by law.

-

3 hours ago, Ryzer said:

I did a bit of digging into previous commits to armbian build and found an interesting patch for the 5.15 kernel series:

Of course, I don't know which patches Armbian has applied at times, but what looks suspicious is the fact that the gpio line numbers used in the "/sys/kernel/debug/gpio" logs for the gpiochip1 differ. This shouldn't be caused by the DT, and if it is, it should be the same as using the 5.15.88 variant again.

To test the basic function of the GPIO subsystem in 6.6.16, post the state of "/sys/kernel/debug/gpio" whilegpioset -c0 34=onis running at the same time with root permissions.

-

11 hours ago, Ryzer said:

which location do I source the old dtb from as there is a pcduino2.dtb at /boot/dtb/ as well as /boot/dtb-5.15.88.

/boot/dtb/ is probably a symlink to the real /boot/dtb-5.15.88 dirctory with the version number in the name to hint the source version the DTBs are build from.

I usually have plenty of kernels installed at the same time, so that it only takes to adjust the symlink to refer to a different DTB set. But this doesn't work so easily in Armbian with its single kernel layout strategy, because it results in name and directory conflicts there.

But in your case, it should be sufficient to copy the /boot/dtb-5.15.88 directory alongside to the /boot/dtb-6.6.16 directory and adjust the symlink accordingly.

Oh, just to be clear, they're both mainline kernel builds and there's no legacy kernel fork involved, because in this case it won't work, because most likely incompatible out of tree hacks are involved.11 hours ago, Ryzer said:As another test I have tried using the spi-gpio module in place of spi-sun4i but have been unable to get that to work at all.

You can't mix binaries of kernel components of different versions, not even different builds of the same version, because you can't make sure that the ABI hasn't changed. For DTBs intermixing is possible because they describe hardware and are agnostic of the consumer binary code. The mainline kernel with its "no regression" policy ensures that earlier releases remain functional.

For a given userspace, you can use any kernel as long as it's build is configured with the same components, because it exposes a stable userspace-kernel API. -

To rule out that with the error prone Armbian DT workflow something got messed up, run the 6.6.16 kernel with the DTB and its related DTBOs created with the 5.15.88 build.

-

5 hours ago, Ryzer said:

A slight draw back with this approach is that it does not currently acknowledge when gpio are configured for spi.

Look at "/sys/kernel/debug/gpio", it will tell you wich gpio is in use by which driver.

-

-

12 minutes ago, hi-ko said:

the bootloader doesn't find a boot device

Bootstd is scanning for a valid bootflow at the partition where the bootflag is set. If none is set, the first partition is used as a fall-back default.

-

55 minutes ago, hi-ko said:

bootloader still boots into sdcard:

Mainline U-Boot scans at various locations for valid bootflows. Usually eMMC and microSD are scanned befor NVMe, so as long as an valid bootflow is found there, that one is used.

-

17 minutes ago, hi-ko said:

- used armbian-install: to boot from selected nve partition (btrfs) and install bootloader on sdcard

-

bootloader found the partition but faild to mount brfs partition:

[/sbin/fsck.ext4 (1) -- /dev/nvme0n1p2] fsck.ext4 -a -C0 /dev/nvme0n1p2

Looks like some partition format mixup, btrfs vs. ext4.

-

34 minutes ago, hi-ko said:

Here the console log from the failed boot

You are still using legacy firmware, the first step should be to switch to mainline.

-

-

1 hour ago, Werner said:

Voltage might be an issue.

The issue is probably due to the fact that rk35xx SoCs have to carve out some memory areas above 16 GB as reserved. Access to those areas leads to crashes.

Because no fully open source TF-A is available yet, current mainline U-Boot has mechanisms landed to take the area information from the closed source TPL.

Chances are the firmware you're using hasn't backported this yet, because devices larger than 16GB are only now becoming widely available in the open market. -

On 2/24/2024 at 5:52 PM, p789 said:

I need a program that can sniff the 433Mhz signal coming from the remote control and analyze it in such a form that I can send it out again via the sender.

I would run:

gpiomon --consumer 433MHz --edges both --format "%S %E" con1-07 | tee 433MHz.logOn 2/24/2024 at 5:52 PM, p789 said:In the good old sysfs times there were tools (like the 433Utils I mentioned above) that could do that - and my question is how this is done today...

I would let gnuplot chew on the result of the gpiomon run to visualize (433MHz.pdf) it, because I can interpret an image better than any columns of numbers.

This is a program that is over thirty years old and works on almost every platform and can be used in any environment, and you can only complain that it is not a special solution and that you don't have to rebuild half the system yourself, but that doesn't bother me.

After I have identified the relevant places, I would take the corresponding timeings from the log and thus generate a suitable gpioset command that imitates the original. -

14 hours ago, Hand Rawing said:

Did you mean that I need to build the latest version of mesa from source code?

No, it's just the spec with which my currently running mesa is built. Already the mesa version with which I started had all the necessary, essential functions. Of course, all bug fixes and improvements that have been incorporated in the meantime are not included there.

14 hours ago, Hand Rawing said:Does this mean the configuration in kernel?

No, there is not much to see in terms of kernel. As long as the Panfrost driver is built and the Mali GPU is properly wired-up in DT, there is nothing to do.

It's about the user space counterpart mesa. It is the component that make use of the GPU the kernel exposes.14 hours ago, Hand Rawing said:Hi, can you share all the requirements that are required for making the GPU work?

If you want to check all dependencies, you have to look at all spec files that are pulled by Requires from the mesa package. For me, however, this is done by the package manager during installation. And to build mesa, I install all BuildRequires with:

dnf builddep mesa.specBut building Mesa yourself has long since ceased to be necessary, as no modifications are necessary and the standard package works out-of-the-box.

8 hours ago, Hand Rawing said:3. command used to compile the mesa

Your build configuration options are looking incomplete.

Here's an excerpt from the build.log resulting from the spec file:

/usr/bin/meson setup --buildtype=plain --prefix=/usr --libdir=/usr/lib64 --libexecdir=/usr/libexec --bindir=/usr/bin --sbindir=/usr/sbin --includedir=/usr/include --datadir=/usr/share --mandir=/usr/share/man --infodir=/usr/share/info --localedir=/usr/share/locale --sysconfdir=/etc --localstatedir=/var --sharedstatedir=/var/lib --wrap-mode=nodownload --auto-features=enabled . redhat-linux-build -Dplatforms=x11,wayland -Ddri3=enabled -Dosmesa=true -Dgallium drivers=swrast,virgl,nouveau,r300,svga,radeonsi,r600,freedreno,etnaviv,tegra,vc4,v3d,kmsro,lima,panfrost,zink -Dgallium-vdpau=enabled -Dgallium-omx=bellagio -Dgallium-va=enabled -Dgallium-xa=enabled -Dgallium-nine=true -Dteflon=true -Dgallium-opencl=icd -Dgallium-rusticl=true -Dvulkan-drivers=swrast,amd,broadcom,freedreno,panfrost,imagination-experimental,nouveau -Dvulkan-layers=device-select -Dshared-glapi=enabled -Dgles1=enabled -Dgles2=enabled -Dopengl=true -Dgbm=enabled -Dglx=dri -Degl=enabled -Dglvnd=enabled -Dintel-rt=disabled -Dmicrosoft-clc=disabled -Dllvm=enabled -Dshared-llvm=enabled -Dvalgrind=enabled -Dbuild-tests=false -Dselinux=true -Dandroid-libbacktrace=disabled -

29 minutes ago, Hand Rawing said:

I have built a unofficial version armbian myself from mainline, but the ‘glxinfo -B’ still shows llvmpipe as GPU device.

Have you made sure that all BuildRequires have been properly fulfilled and that the build configuration options have been selected correctly?

Especially hardware-related ones. -

9 hours ago, Hand Rawing said:

Can you share or tell me which image are you using?

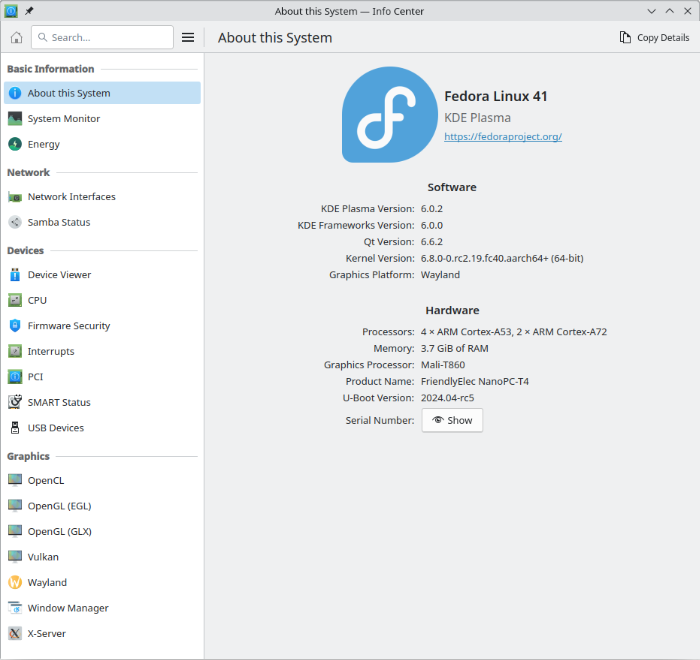

I've started with this, but the logs provided are created with this system:

However, the system used is not really important, the Mali support has been very mature in mainline for a long time, so any correspondingly built system should be usable.

-

3 hours ago, Hand Rawing said:

I am wondering if I can use the GPU on kernel 6.x, because I found that the case in this forum that can enable GPU for rk3399 all use the legacy version kernel, like 4.X.

Works for me since ages.

-

1 hour ago, HansD said:

I'm not into bootmenu editing nor firmaware building. So I cannot and have not checked those suggestions.

I just need a reliable host for Homeassistant.

I guess with such an attitude you certainly can't motivate anyone to find an immediate solution to your problem. Maybe you'll be lucky and it will work with a future release, but until then all you can do is keep trying and waiting.

You got what you paid for. The currency here is to contribute to the project (Armbian) and help with problem analysis. The project is community driven and you are a member of the community. -

-

4 hours ago, McTurbo said:

hantro-vpu driver, only MPEG2 and VP8 are shown as supported codecs for decoding. No H.264

Since userspace cannot sensibly select between two decoder instances of the same type, the H.264 hanto decoder is usually disabled for the rk3399 and the H.264 rkvdec is preferred.

-

20 hours ago, johmue said:

Is there an "official" way to get back my /dev/AML1 UART device which is sustainable?

meson-g12b-odroid-n2-plus.dtb is a base DTB with a static applied overlay.

In your mentioned thread at post 23 is a reference to a parallel thread where the overlay source is provided.

So prepare a PR so that the overlay can be included in Armbian.

You'll probably reap tons of grateful users who have been waiting for someone to make the effort. -

4 hours ago, mongoose said:

I did (edit boot.cmd), but to no success.

Some settings that were set at build time can't be modified at runtime.

This requires a new firmware build, e.g. to set the boot delay to 0, the build configuration has to be set to "CONFIG_BOOTDELAY=0".

4 hours ago, mongoose said:it uses the defaults, though this doesn't happen with u-boot on debian.

This is due to the build configuration. The build configuration determines the properties of a binary created from a certain source. These can be detail settings, or even decisive for which hardware it is usable.

Finally, U-Boot can be built for all devices from the same source of a given release version.

E.g. "make libretech-cc_defconfig" prepares a default configuration for LePotato.

You can use "make menuconfig" to fine tune it afterwards.4 hours ago, mongoose said:how I could integrate that into an existing armbian image

I don't know the details of Armbian's build framework, but I'm sure you can inject a patch that implements your desired change.

-

2 hours ago, ag123 said:

u-boot does the bulk of the 'black magic' dealing with memory initialization and sizing

This is not correct. Due to its size, U-Boot must be run from RAM. The RAM must therefore already be initialized before U-Boot can be loaded at all. This is usually done by Trusted Firmware-A (TF-A). U-Boot is only the payload (in TF-A terminology: BL33).

You might want to read TF-A's documentation to understand the boot flow, I think here is a good place to start. You're lucky if TF-A is open source for your SoC, but often only a binary BLOOB for BL2 and BL31 is provided by the SoC manufacturers. -

1 hour ago, mongoose said:

"save" doesn't exist.

Your firmware is build without persistent Environment.

On 4/12/2024 at 12:51 AM, mongoose said:16 Loading Environment from nowhere...

Only the compiled-in Environment is used, which can only be modified before build.

SPI not working properly with cs-gpio

in Allwinner sunxi

Posted

Ok, you have confirmed that the GPIO line can be properly acquired by a suitable process and the GPIO subsystem is working as expected.

The SPI subsystem does not seem to do this as desired for the CS line.

Whether this is due to a bug patched out of tree in the 5.15.88 kernel, or just a configuration error in the DT, I can't say, since you haven't published any DT sources on how your SPI controller is wired.

I'm interested in the original sources from which the DTB was created, and not any from some disassembled DTB, as this has lost valuable information that was striped out during the assembly of the DTB.

But I also know that it is very difficult to acquire them with an Armbian build system since they most probably only exist as build artifacts which get composed from various patches.