All Activity

- Past hour

-

This works-we are even running daily automated tests on Bookworm, Jammy and Noble. https://github.com/armbian/os/actions/runs/15362508470/job/43232345405 Manual test on Rockchip64 (Bananapi M7) with 6.12.28-current, latest kernel from daily repository. Here is build log: Setting up zfs-dkms (2.3.1-1~bpo12+1) ... Loading new zfs-2.3.1 DKMS files... Building for 6.12.31-current-rockchip64 Building initial module for 6.12.31-current-rockchip64 Done. zfs.ko: Running module version sanity check. - Original module - No original module exists within this kernel - Installation - Installing to /lib/modules/6.12.31-current-rockchip64/updates/dkms/ spl.ko: Running module version sanity check. - Original module - No original module exists within this kernel - Installation - Installing to /lib/modules/6.12.31-current-rockchip64/updates/dkms/ depmod... Setting up libzfs6linux:arm64 (2.3.1-1~bpo12+1) ... Setting up zfsutils-linux (2.3.1-1~bpo12+1) ... insmod /lib/modules/6.12.31-current-rockchip64/updates/dkms/spl.ko insmod /lib/modules/6.12.31-current-rockchip64/updates/dkms/zfs.ko Created symlink /etc/systemd/system/zfs-import.target.wants/zfs-import-cache.service → /lib/systemd/system/zfs-import-cache.service. Created symlink /etc/systemd/system/zfs.target.wants/zfs-import.target → /lib/systemd/system/zfs-import.target. Created symlink /etc/systemd/system/zfs-mount.service.wants/zfs-load-module.service → /lib/systemd/system/zfs-load-module.service. Created symlink /etc/systemd/system/zfs.target.wants/zfs-load-module.service → /lib/systemd/system/zfs-load-module.service. Created symlink /etc/systemd/system/zfs.target.wants/zfs-mount.service → /lib/systemd/system/zfs-mount.service. Created symlink /etc/systemd/system/zfs.target.wants/zfs-share.service → /lib/systemd/system/zfs-share.service. Created symlink /etc/systemd/system/zfs-volumes.target.wants/zfs-volume-wait.service → /lib/systemd/system/zfs-volume-wait.service. Created symlink /etc/systemd/system/zfs.target.wants/zfs-volumes.target → /lib/systemd/system/zfs-volumes.target. Created symlink /etc/systemd/system/multi-user.target.wants/zfs.target → /lib/systemd/system/zfs.target. zfs-import-scan.service is a disabled or a static unit, not starting it. Processing triggers for libc-bin (2.36-9+deb12u10) ... Processing triggers for man-db (2.11.2-2) ... Processing triggers for initramfs-tools (0.142+deb12u3) ... update-initramfs: Generating /boot/initrd.img-6.12.31-current-rockchip64 W: Possible missing firmware /lib/firmware/rtl_nic/rtl8126a-3.fw for module r8169 W: Possible missing firmware /lib/firmware/rtl_nic/rtl8126a-2.fw for module r8169 update-initramfs: Armbian: Converting to u-boot format: /boot/uInitrd-6.12.31-current-rockchip64 Image Name: uInitrd Created: Sat May 31 16:18:38 2025 Image Type: AArch64 Linux RAMDisk Image (gzip compressed) Data Size: 16727849 Bytes = 16335.79 KiB = 15.95 MiB Load Address: 00000000 Entry Point: 00000000 update-initramfs: Armbian: Symlinking /boot/uInitrd-6.12.31-current-rockchip64 to /boot/uInitrd '/boot/uInitrd' -> 'uInitrd-6.12.31-current-rockchip64' update-initramfs: Armbian: done. root@bananapim7:/home/igorp# modinfo zfs filename: /lib/modules/6.12.31-current-rockchip64/updates/dkms/zfs.ko version: 2.3.1-1~bpo12+1 license: CDDL license: Dual BSD/GPL license: Dual MIT/GPL author: OpenZFS description: ZFS alias: zzstd alias: zcommon alias: zunicode alias: znvpair alias: zlua alias: icp alias: zavl alias: devname:zfs alias: char-major-10-249 srcversion: 2742833EE1C14D857611F06 depends: spl name: zfs vermagic: 6.12.31-current-rockchip64 SMP preempt mod_unload aarch64 parm: zvol_inhibit_dev:Do not create zvol device nodes (uint) parm: zvol_major:Major number for zvol device (uint) parm: zvol_threads:Number of threads to handle I/O requests. Setto 0 to use all active CPUs (uint) parm: zvol_request_sync:Synchronously handle bio requests (uint) parm: zvol_max_discard_blocks:Max number of blocks to discard (ulong) parm: zvol_num_taskqs:Number of zvol taskqs (uint) parm: zvol_prefetch_bytes:Prefetch N bytes at zvol start+end (uint) parm: zvol_volmode:Default volmode property value (uint) parm: zvol_blk_mq_queue_depth:Default blk-mq queue depth (uint) parm: zvol_use_blk_mq:Use the blk-mq API for zvols (uint) parm: zvol_blk_mq_blocks_per_thread:Process volblocksize blocks per thread (uint) parm: zvol_open_timeout_ms:Timeout for ZVOL open retries (uint) parm: zfs_xattr_compat:Use legacy ZFS xattr naming for writing new user namespace xattrs parm: zfs_fallocate_reserve_percent:Percentage of length to use for the available capacity check (uint) parm: zfs_key_max_salt_uses:Max number of times a salt value can be used for generating encryption keys before it is rotated (ulong) parm: zfs_object_mutex_size:Size of znode hold array (uint) parm: zfs_unlink_suspend_progress:Set to prevent async unlinks (debug - leaks space into the unlinked set) (int) parm: zfs_delete_blocks:Delete files larger than N blocks async (ulong) parm: zfs_dbgmsg_enable:Enable ZFS debug message log (int) parm: zfs_dbgmsg_maxsize:Maximum ZFS debug log size (uint) parm: zfs_admin_snapshot:Enable mkdir/rmdir/mv in .zfs/snapshot (int) parm: zfs_expire_snapshot:Seconds to expire .zfs/snapshot (int) parm: zfs_snapshot_no_setuid:Disable setuid/setgid for automounts in .zfs/snapshot (int) parm: zfs_vdev_scheduler:I/O scheduler parm: zfs_vdev_open_timeout_ms:Timeout before determining that a device is missing parm: zfs_vdev_failfast_mask:Defines failfast mask: 1 - device, 2 - transport, 4 - driver parm: zfs_vdev_disk_max_segs:Maximum number of data segments to add to an IO request (min 4) parm: zfs_vdev_disk_classic:Use classic BIO submission method parm: zfs_arc_shrinker_limit:Limit on number of pages that ARC shrinker can reclaim at once parm: zfs_arc_shrinker_seeks:Relative cost of ARC eviction vs other kernel subsystems parm: zfs_abd_scatter_enabled:Toggle whether ABD allocations must be linear. (int) parm: zfs_abd_scatter_min_size:Minimum size of scatter allocations. (int) parm: zfs_abd_scatter_max_order:Maximum order allocation used for a scatter ABD. (uint) parm: zio_slow_io_ms:Max I/O completion time (milliseconds) before marking it as slow parm: zio_requeue_io_start_cut_in_line:Prioritize requeued I/O parm: zfs_sync_pass_deferred_free:Defer frees starting in this pass parm: zfs_sync_pass_dont_compress:Don't compress starting in this pass parm: zfs_sync_pass_rewrite:Rewrite new bps starting in this pass parm: zio_dva_throttle_enabled:Throttle block allocations in the ZIO pipeline parm: zio_deadman_log_all:Log all slow ZIOs, not just those with vdevs parm: zfs_commit_timeout_pct:ZIL block open timeout percentage parm: zil_replay_disable:Disable intent logging replay parm: zil_nocacheflush:Disable ZIL cache flushes parm: zil_slog_bulk:Limit in bytes slog sync writes per commit parm: zil_maxblocksize:Limit in bytes of ZIL log block size parm: zil_maxcopied:Limit in bytes WR_COPIED size parm: zfs_vnops_read_chunk_size:Bytes to read per chunk parm: zfs_bclone_enabled:Enable block cloning parm: zfs_bclone_wait_dirty:Wait for dirty blocks when cloning parm: zfs_dio_enabled:Enable Direct I/O parm: zfs_zil_saxattr:Disable xattr=sa extended attribute logging in ZIL by settng 0. parm: zfs_immediate_write_sz:Largest data block to write to zil parm: zfs_max_nvlist_src_size:Maximum size in bytes allowed for src nvlist passed with ZFS ioctls parm: zfs_history_output_max:Maximum size in bytes of ZFS ioctl output that will be logged parm: zfs_zevent_retain_max:Maximum recent zevents records to retain for duplicate checking parm: zfs_zevent_retain_expire_secs:Expiration time for recent zevents records parm: zfs_lua_max_instrlimit:Max instruction limit that can be specified for a channel program parm: zfs_lua_max_memlimit:Max memory limit that can be specified for a channel program parm: zap_micro_max_size:Maximum micro ZAP size before converting to a fat ZAP, in bytes (max 1M) parm: zap_iterate_prefetch:When iterating ZAP object, prefetch it parm: zap_shrink_enabled:Enable ZAP shrinking parm: zfs_trim_extent_bytes_max:Max size of TRIM commands, larger will be split parm: zfs_trim_extent_bytes_min:Min size of TRIM commands, smaller will be skipped parm: zfs_trim_metaslab_skip:Skip metaslabs which have never been initialized parm: zfs_trim_txg_batch:Min number of txgs to aggregate frees before issuing TRIM parm: zfs_trim_queue_limit:Max queued TRIMs outstanding per leaf vdev parm: zfs_removal_ignore_errors:Ignore hard IO errors when removing device parm: zfs_remove_max_segment:Largest contiguous segment to allocate when removing device parm: vdev_removal_max_span:Largest span of free chunks a remap segment can span parm: zfs_removal_suspend_progress:Pause device removal after this many bytes are copied (debug use only - causes removal to hang) parm: zfs_rebuild_max_segment:Max segment size in bytes of rebuild reads parm: zfs_rebuild_vdev_limit:Max bytes in flight per leaf vdev for sequential resilvers parm: zfs_rebuild_scrub_enabled:Automatically scrub after sequential resilver completes parm: raidz_expand_max_reflow_bytes:For testing, pause RAIDZ expansion after reflowing this many bytes parm: raidz_expand_max_copy_bytes:Max amount of concurrent i/o for RAIDZ expansion parm: raidz_io_aggregate_rows:For expanded RAIDZ, aggregate reads that have more rows than this parm: zfs_scrub_after_expand:For expanded RAIDZ, automatically start a pool scrub when expansion completes parm: zfs_vdev_aggregation_limit:Max vdev I/O aggregation size parm: zfs_vdev_aggregation_limit_non_rotating:Max vdev I/O aggregation size for non-rotating media parm: zfs_vdev_read_gap_limit:Aggregate read I/O over gap parm: zfs_vdev_write_gap_limit:Aggregate write I/O over gap parm: zfs_vdev_max_active:Maximum number of active I/Os per vdev parm: zfs_vdev_async_write_active_max_dirty_percent:Async write concurrency max threshold parm: zfs_vdev_async_write_active_min_dirty_percent:Async write concurrency min threshold parm: zfs_vdev_async_read_max_active:Max active async read I/Os per vdev parm: zfs_vdev_async_read_min_active:Min active async read I/Os per vdev parm: zfs_vdev_async_write_max_active:Max active async write I/Os per vdev parm: zfs_vdev_async_write_min_active:Min active async write I/Os per vdev parm: zfs_vdev_initializing_max_active:Max active initializing I/Os per vdev parm: zfs_vdev_initializing_min_active:Min active initializing I/Os per vdev parm: zfs_vdev_removal_max_active:Max active removal I/Os per vdev parm: zfs_vdev_removal_min_active:Min active removal I/Os per vdev parm: zfs_vdev_scrub_max_active:Max active scrub I/Os per vdev parm: zfs_vdev_scrub_min_active:Min active scrub I/Os per vdev parm: zfs_vdev_sync_read_max_active:Max active sync read I/Os per vdev parm: zfs_vdev_sync_read_min_active:Min active sync read I/Os per vdev parm: zfs_vdev_sync_write_max_active:Max active sync write I/Os per vdev parm: zfs_vdev_sync_write_min_active:Min active sync write I/Os per vdev parm: zfs_vdev_trim_max_active:Max active trim/discard I/Os per vdev parm: zfs_vdev_trim_min_active:Min active trim/discard I/Os per vdev parm: zfs_vdev_rebuild_max_active:Max active rebuild I/Os per vdev parm: zfs_vdev_rebuild_min_active:Min active rebuild I/Os per vdev parm: zfs_vdev_nia_credit:Number of non-interactive I/Os to allow in sequence parm: zfs_vdev_nia_delay:Number of non-interactive I/Os before _max_active parm: zfs_vdev_queue_depth_pct:Queue depth percentage for each top-level vdev parm: zfs_vdev_def_queue_depth:Default queue depth for each allocator parm: zfs_vdev_mirror_rotating_inc:Rotating media load increment for non-seeking I/Os parm: zfs_vdev_mirror_rotating_seek_inc:Rotating media load increment for seeking I/Os parm: zfs_vdev_mirror_rotating_seek_offset:Offset in bytes from the last I/O which triggers a reduced rotating media seek increment parm: zfs_vdev_mirror_non_rotating_inc:Non-rotating media load increment for non-seeking I/Os parm: zfs_vdev_mirror_non_rotating_seek_inc:Non-rotating media load increment for seeking I/Os parm: zfs_initialize_value:Value written during zpool initialize parm: zfs_initialize_chunk_size:Size in bytes of writes by zpool initialize parm: zfs_condense_indirect_vdevs_enable:Whether to attempt condensing indirect vdev mappings parm: zfs_condense_indirect_obsolete_pct:Minimum obsolete percent of bytes in the mapping to attempt condensing parm: zfs_condense_min_mapping_bytes:Don't bother condensing if the mapping uses less than this amount of memory parm: zfs_condense_max_obsolete_bytes:Minimum size obsolete spacemap to attempt condensing parm: zfs_condense_indirect_commit_entry_delay_ms:Used by tests to ensure certain actions happen in the middle of a condense. A maximum value of 1 should be sufficient. parm: zfs_reconstruct_indirect_combinations_max:Maximum number of combinations when reconstructing split segments parm: vdev_file_logical_ashift:Logical ashift for file-based devices parm: vdev_file_physical_ashift:Physical ashift for file-based devices parm: zfs_vdev_default_ms_count:Target number of metaslabs per top-level vdev parm: zfs_vdev_default_ms_shift:Default lower limit for metaslab size parm: zfs_vdev_max_ms_shift:Default upper limit for metaslab size parm: zfs_vdev_min_ms_count:Minimum number of metaslabs per top-level vdev parm: zfs_vdev_ms_count_limit:Practical upper limit of total metaslabs per top-level vdev parm: zfs_slow_io_events_per_second:Rate limit slow IO (delay) events to this many per second parm: zfs_deadman_events_per_second:Rate limit hung IO (deadman) events to this many per second parm: zfs_dio_write_verify_events_per_second:Rate Direct I/O write verify events to this many per second parm: zfs_vdev_direct_write_verify:Direct I/O writes will perform for checksum verification before commiting write parm: zfs_checksum_events_per_second:Rate limit checksum events to this many checksum errors per second (do not set below ZED threshold). parm: zfs_scan_ignore_errors:Ignore errors during resilver/scrub parm: vdev_validate_skip:Bypass vdev_validate() parm: zfs_nocacheflush:Disable cache flushes parm: zfs_embedded_slog_min_ms:Minimum number of metaslabs required to dedicate one for log blocks parm: zfs_vdev_min_auto_ashift:Minimum ashift used when creating new top-level vdevs parm: zfs_vdev_max_auto_ashift:Maximum ashift used when optimizing for logical -> physical sector size on new top-level vdevs parm: zfs_vdev_raidz_impl:RAIDZ implementation parm: zfs_txg_timeout:Max seconds worth of delta per txg parm: zfs_read_history:Historical statistics for the last N reads parm: zfs_read_history_hits:Include cache hits in read history parm: zfs_txg_history:Historical statistics for the last N txgs parm: zfs_multihost_history:Historical statistics for last N multihost writes parm: zfs_flags:Set additional debugging flags parm: zfs_recover:Set to attempt to recover from fatal errors parm: zfs_free_leak_on_eio:Set to ignore IO errors during free and permanently leak the space parm: zfs_deadman_checktime_ms:Dead I/O check interval in milliseconds parm: zfs_deadman_enabled:Enable deadman timer parm: spa_asize_inflation:SPA size estimate multiplication factor parm: zfs_ddt_data_is_special:Place DDT data into the special class parm: zfs_user_indirect_is_special:Place user data indirect blocks into the special class parm: zfs_deadman_failmode:Failmode for deadman timer parm: zfs_deadman_synctime_ms:Pool sync expiration time in milliseconds parm: zfs_deadman_ziotime_ms:IO expiration time in milliseconds parm: zfs_special_class_metadata_reserve_pct:Small file blocks in special vdevs depends on this much free space available parm: spa_slop_shift:Reserved free space in pool parm: spa_num_allocators:Number of allocators per spa parm: spa_cpus_per_allocator:Minimum number of CPUs per allocators parm: zfs_unflushed_max_mem_amt:Specific hard-limit in memory that ZFS allows to be used for unflushed changes parm: zfs_unflushed_max_mem_ppm:Percentage of the overall system memory that ZFS allows to be used for unflushed changes (value is calculated over 1000000 for finer granularity) parm: zfs_unflushed_log_block_max:Hard limit (upper-bound) in the size of the space map log in terms of blocks. parm: zfs_unflushed_log_block_min:Lower-bound limit for the maximum amount of blocks allowed in log spacemap (see zfs_unflushed_log_block_max) parm: zfs_unflushed_log_txg_max:Hard limit (upper-bound) in the size of the space map log in terms of dirty TXGs. parm: zfs_unflushed_log_block_pct:Tunable used to determine the number of blocks that can be used for the spacemap log, expressed as a percentage of the total number of metaslabs in the pool (e.g. 400 means the number of log blocks is capped at 4 times the number of metaslabs) parm: zfs_max_log_walking:The number of past TXGs that the flushing algorithm of the log spacemap feature uses to estimate incoming log blocks parm: zfs_keep_log_spacemaps_at_export:Prevent the log spacemaps from being flushed and destroyed during pool export/destroy parm: zfs_max_logsm_summary_length:Maximum number of rows allowed in the summary of the spacemap log parm: zfs_min_metaslabs_to_flush:Minimum number of metaslabs to flush per dirty TXG parm: spa_upgrade_errlog_limit:Limit the number of errors which will be upgraded to the new on-disk error log when enabling head_errlog parm: spa_config_path:SPA config file (/etc/zfs/zpool.cache) parm: zfs_autoimport_disable:Disable pool import at module load parm: zfs_spa_discard_memory_limit:Limit for memory used in prefetching the checkpoint space map done on each vdev while discarding the checkpoint parm: metaslab_preload_pct:Percentage of CPUs to run a metaslab preload taskq parm: spa_load_verify_shift:log2 fraction of arc that can be used by inflight I/Os when verifying pool during import parm: spa_load_verify_metadata:Set to traverse metadata on pool import parm: spa_load_verify_data:Set to traverse data on pool import parm: spa_load_print_vdev_tree:Print vdev tree to zfs_dbgmsg during pool import parm: zio_taskq_batch_pct:Percentage of CPUs to run an IO worker thread parm: zio_taskq_batch_tpq:Number of threads per IO worker taskqueue parm: zfs_max_missing_tvds:Allow importing pool with up to this number of missing top-level vdevs (in read-only mode) parm: zfs_livelist_condense_zthr_pause:Set the livelist condense zthr to pause parm: zfs_livelist_condense_sync_pause:Set the livelist condense synctask to pause parm: zfs_livelist_condense_sync_cancel:Whether livelist condensing was canceled in the synctask parm: zfs_livelist_condense_zthr_cancel:Whether livelist condensing was canceled in the zthr function parm: zfs_livelist_condense_new_alloc:Whether extra ALLOC blkptrs were added to a livelist entry while it was being condensed parm: zio_taskq_read:Configure IO queues for read IO parm: zio_taskq_write:Configure IO queues for write IO parm: zio_taskq_write_tpq:Number of CPUs per write issue taskq parm: zfs_multilist_num_sublists:Number of sublists used in each multilist parm: zfs_multihost_interval:Milliseconds between mmp writes to each leaf parm: zfs_multihost_fail_intervals:Max allowed period without a successful mmp write parm: zfs_multihost_import_intervals:Number of zfs_multihost_interval periods to wait for activity parm: metaslab_aliquot:Allocation granularity (a.k.a. stripe size) parm: metaslab_debug_load:Load all metaslabs when pool is first opened parm: metaslab_debug_unload:Prevent metaslabs from being unloaded parm: metaslab_preload_enabled:Preload potential metaslabs during reassessment parm: metaslab_preload_limit:Max number of metaslabs per group to preload parm: metaslab_unload_delay:Delay in txgs after metaslab was last used before unloading parm: metaslab_unload_delay_ms:Delay in milliseconds after metaslab was last used before unloading parm: zfs_mg_noalloc_threshold:Percentage of metaslab group size that should be free to make it eligible for allocation parm: zfs_mg_fragmentation_threshold:Percentage of metaslab group size that should be considered eligible for allocations unless all metaslab groups within the metaslab class have also crossed this threshold parm: metaslab_fragmentation_factor_enabled:Use the fragmentation metric to prefer less fragmented metaslabs parm: zfs_metaslab_fragmentation_threshold:Fragmentation for metaslab to allow allocation parm: metaslab_lba_weighting_enabled:Prefer metaslabs with lower LBAs parm: metaslab_bias_enabled:Enable metaslab group biasing parm: zfs_metaslab_segment_weight_enabled:Enable segment-based metaslab selection parm: zfs_metaslab_switch_threshold:Segment-based metaslab selection maximum buckets before switching parm: metaslab_force_ganging:Blocks larger than this size are sometimes forced to be gang blocks parm: metaslab_force_ganging_pct:Percentage of large blocks that will be forced to be gang blocks parm: metaslab_df_max_search:Max distance (bytes) to search forward before using size tree parm: metaslab_df_use_largest_segment:When looking in size tree, use largest segment instead of exact fit parm: zfs_metaslab_max_size_cache_sec:How long to trust the cached max chunk size of a metaslab parm: zfs_metaslab_mem_limit:Percentage of memory that can be used to store metaslab range trees parm: zfs_metaslab_try_hard_before_gang:Try hard to allocate before ganging parm: zfs_metaslab_find_max_tries:Normally only consider this many of the best metaslabs in each vdev parm: zfs_active_allocator:SPA active allocator parm: zfs_zevent_len_max:Max event queue length parm: zfs_scan_vdev_limit:Max bytes in flight per leaf vdev for scrubs and resilvers parm: zfs_scrub_min_time_ms:Min millisecs to scrub per txg parm: zfs_obsolete_min_time_ms:Min millisecs to obsolete per txg parm: zfs_free_min_time_ms:Min millisecs to free per txg parm: zfs_resilver_min_time_ms:Min millisecs to resilver per txg parm: zfs_scan_suspend_progress:Set to prevent scans from progressing parm: zfs_no_scrub_io:Set to disable scrub I/O parm: zfs_no_scrub_prefetch:Set to disable scrub prefetching parm: zfs_async_block_max_blocks:Max number of blocks freed in one txg parm: zfs_max_async_dedup_frees:Max number of dedup blocks freed in one txg parm: zfs_free_bpobj_enabled:Enable processing of the free_bpobj parm: zfs_scan_blkstats:Enable block statistics calculation during scrub parm: zfs_scan_mem_lim_fact:Fraction of RAM for scan hard limit parm: zfs_scan_issue_strategy:IO issuing strategy during scrubbing. 0 = default, 1 = LBA, 2 = size parm: zfs_scan_legacy:Scrub using legacy non-sequential method parm: zfs_scan_checkpoint_intval:Scan progress on-disk checkpointing interval parm: zfs_scan_max_ext_gap:Max gap in bytes between sequential scrub / resilver I/Os parm: zfs_scan_mem_lim_soft_fact:Fraction of hard limit used as soft limit parm: zfs_scan_strict_mem_lim:Tunable to attempt to reduce lock contention parm: zfs_scan_fill_weight:Tunable to adjust bias towards more filled segments during scans parm: zfs_scan_report_txgs:Tunable to report resilver performance over the last N txgs parm: zfs_resilver_disable_defer:Process all resilvers immediately parm: zfs_resilver_defer_percent:Issued IO percent complete after which resilvers are deferred parm: zfs_scrub_error_blocks_per_txg:Error blocks to be scrubbed in one txg parm: zfs_dirty_data_max_percent:Max percent of RAM allowed to be dirty parm: zfs_dirty_data_max_max_percent:zfs_dirty_data_max upper bound as % of RAM parm: zfs_delay_min_dirty_percent:Transaction delay threshold parm: zfs_dirty_data_max:Determines the dirty space limit parm: zfs_wrlog_data_max:The size limit of write-transaction zil log data parm: zfs_dirty_data_max_max:zfs_dirty_data_max upper bound in bytes parm: zfs_dirty_data_sync_percent:Dirty data txg sync threshold as a percentage of zfs_dirty_data_max parm: zfs_delay_scale:How quickly delay approaches infinity parm: zfs_zil_clean_taskq_nthr_pct:Max percent of CPUs that are used per dp_sync_taskq parm: zfs_zil_clean_taskq_minalloc:Number of taskq entries that are pre-populated parm: zfs_zil_clean_taskq_maxalloc:Max number of taskq entries that are cached parm: zvol_enforce_quotas:Enable strict ZVOL quota enforcment parm: zfs_livelist_max_entries:Size to start the next sub-livelist in a livelist parm: zfs_livelist_min_percent_shared:Threshold at which livelist is disabled parm: zfs_max_recordsize:Max allowed record size parm: zfs_allow_redacted_dataset_mount:Allow mounting of redacted datasets parm: zfs_snapshot_history_enabled:Include snapshot events in pool history/events parm: zfs_disable_ivset_guid_check:Set to allow raw receives without IVset guids parm: zfs_default_bs:Default dnode block shift parm: zfs_default_ibs:Default dnode indirect block shift parm: zfs_prefetch_disable:Disable all ZFS prefetching parm: zfetch_max_streams:Max number of streams per zfetch parm: zfetch_min_sec_reap:Min time before stream reclaim parm: zfetch_max_sec_reap:Max time before stream delete parm: zfetch_min_distance:Min bytes to prefetch per stream parm: zfetch_max_distance:Max bytes to prefetch per stream parm: zfetch_max_idistance:Max bytes to prefetch indirects for per stream parm: zfetch_max_reorder:Max request reorder distance within a stream parm: zfetch_hole_shift:Max log2 fraction of holes in a stream parm: zfs_pd_bytes_max:Max number of bytes to prefetch parm: zfs_traverse_indirect_prefetch_limit:Traverse prefetch number of blocks pointed by indirect block parm: ignore_hole_birth:Alias for send_holes_without_birth_time (int) parm: send_holes_without_birth_time:Ignore hole_birth txg for zfs send parm: zfs_send_corrupt_data:Allow sending corrupt data parm: zfs_send_queue_length:Maximum send queue length parm: zfs_send_unmodified_spill_blocks:Send unmodified spill blocks parm: zfs_send_no_prefetch_queue_length:Maximum send queue length for non-prefetch queues parm: zfs_send_queue_ff:Send queue fill fraction parm: zfs_send_no_prefetch_queue_ff:Send queue fill fraction for non-prefetch queues parm: zfs_override_estimate_recordsize:Override block size estimate with fixed size parm: zfs_recv_queue_length:Maximum receive queue length parm: zfs_recv_queue_ff:Receive queue fill fraction parm: zfs_recv_write_batch_size:Maximum amount of writes to batch into one transaction parm: zfs_recv_best_effort_corrective:Ignore errors during corrective receive parm: dmu_object_alloc_chunk_shift:CPU-specific allocator grabs 2^N objects at once parm: zfs_nopwrite_enabled:Enable NOP writes parm: zfs_per_txg_dirty_frees_percent:Percentage of dirtied blocks from frees in one TXG parm: zfs_dmu_offset_next_sync:Enable forcing txg sync to find holes parm: dmu_prefetch_max:Limit one prefetch call to this size parm: dmu_ddt_copies:Override copies= for dedup objects parm: ddt_zap_default_bs:DDT ZAP leaf blockshift parm: ddt_zap_default_ibs:DDT ZAP indirect blockshift parm: zfs_dedup_log_txg_max:Max transactions before starting to flush dedup logs parm: zfs_dedup_log_mem_max:Max memory for dedup logs parm: zfs_dedup_log_mem_max_percent:Max memory for dedup logs, as % of total memory parm: zfs_dedup_prefetch:Enable prefetching dedup-ed blks parm: zfs_dedup_log_flush_passes_max:Max number of incremental dedup log flush passes per transaction parm: zfs_dedup_log_flush_min_time_ms:Min time to spend on incremental dedup log flush each transaction parm: zfs_dedup_log_flush_entries_min:Min number of log entries to flush each transaction parm: zfs_dedup_log_flush_flow_rate_txgs:Number of txgs to average flow rates across parm: zfs_dbuf_state_index:Calculate arc header index parm: dbuf_cache_max_bytes:Maximum size in bytes of the dbuf cache. parm: dbuf_cache_hiwater_pct:Percentage over dbuf_cache_max_bytes for direct dbuf eviction. parm: dbuf_cache_lowater_pct:Percentage below dbuf_cache_max_bytes when dbuf eviction stops. parm: dbuf_metadata_cache_max_bytes:Maximum size in bytes of dbuf metadata cache. parm: dbuf_cache_shift:Set size of dbuf cache to log2 fraction of arc size. parm: dbuf_metadata_cache_shift:Set size of dbuf metadata cache to log2 fraction of arc size. parm: dbuf_mutex_cache_shift:Set size of dbuf cache mutex array as log2 shift. parm: zfs_btree_verify_intensity:Enable btree verification. Levels above 4 require ZFS be built with debugging parm: brt_zap_prefetch:Enable prefetching of BRT ZAP entries parm: brt_zap_default_bs:BRT ZAP leaf blockshift parm: brt_zap_default_ibs:BRT ZAP indirect blockshift parm: zfs_arc_min:Minimum ARC size in bytes parm: zfs_arc_max:Maximum ARC size in bytes parm: zfs_arc_meta_balance:Balance between metadata and data on ghost hits. parm: zfs_arc_grow_retry:Seconds before growing ARC size parm: zfs_arc_shrink_shift:log2(fraction of ARC to reclaim) parm: zfs_arc_pc_percent:Percent of pagecache to reclaim ARC to parm: zfs_arc_average_blocksize:Target average block size parm: zfs_compressed_arc_enabled:Disable compressed ARC buffers parm: zfs_arc_min_prefetch_ms:Min life of prefetch block in ms parm: zfs_arc_min_prescient_prefetch_ms:Min life of prescient prefetched block in ms parm: l2arc_write_max:Max write bytes per interval parm: l2arc_write_boost:Extra write bytes during device warmup parm: l2arc_headroom:Number of max device writes to precache parm: l2arc_headroom_boost:Compressed l2arc_headroom multiplier parm: l2arc_trim_ahead:TRIM ahead L2ARC write size multiplier parm: l2arc_feed_secs:Seconds between L2ARC writing parm: l2arc_feed_min_ms:Min feed interval in milliseconds parm: l2arc_noprefetch:Skip caching prefetched buffers parm: l2arc_feed_again:Turbo L2ARC warmup parm: l2arc_norw:No reads during writes parm: l2arc_meta_percent:Percent of ARC size allowed for L2ARC-only headers parm: l2arc_rebuild_enabled:Rebuild the L2ARC when importing a pool parm: l2arc_rebuild_blocks_min_l2size:Min size in bytes to write rebuild log blocks in L2ARC parm: l2arc_mfuonly:Cache only MFU data from ARC into L2ARC parm: l2arc_exclude_special:Exclude dbufs on special vdevs from being cached to L2ARC if set. parm: zfs_arc_lotsfree_percent:System free memory I/O throttle in bytes parm: zfs_arc_sys_free:System free memory target size in bytes parm: zfs_arc_dnode_limit:Minimum bytes of dnodes in ARC parm: zfs_arc_dnode_limit_percent:Percent of ARC meta buffers for dnodes parm: zfs_arc_dnode_reduce_percent:Percentage of excess dnodes to try to unpin parm: zfs_arc_eviction_pct:When full, ARC allocation waits for eviction of this % of alloc size parm: zfs_arc_evict_batch_limit:The number of headers to evict per sublist before moving to the next parm: zfs_arc_prune_task_threads:Number of arc_prune threads parm: zstd_earlyabort_pass:Enable early abort attempts when using zstd parm: zstd_abort_size:Minimal size of block to attempt early abort parm: zfs_max_dataset_nesting:Limit to the amount of nesting a path can have. Defaults to 50. parm: zfs_fletcher_4_impl:Select fletcher 4 implementation. parm: zfs_sha512_impl:Select SHA512 implementation. parm: zfs_sha256_impl:Select SHA256 implementation. parm: icp_gcm_impl:Select gcm implementation. parm: zfs_blake3_impl:Select BLAKE3 implementation. parm: icp_aes_impl:Select aes implementation.

-

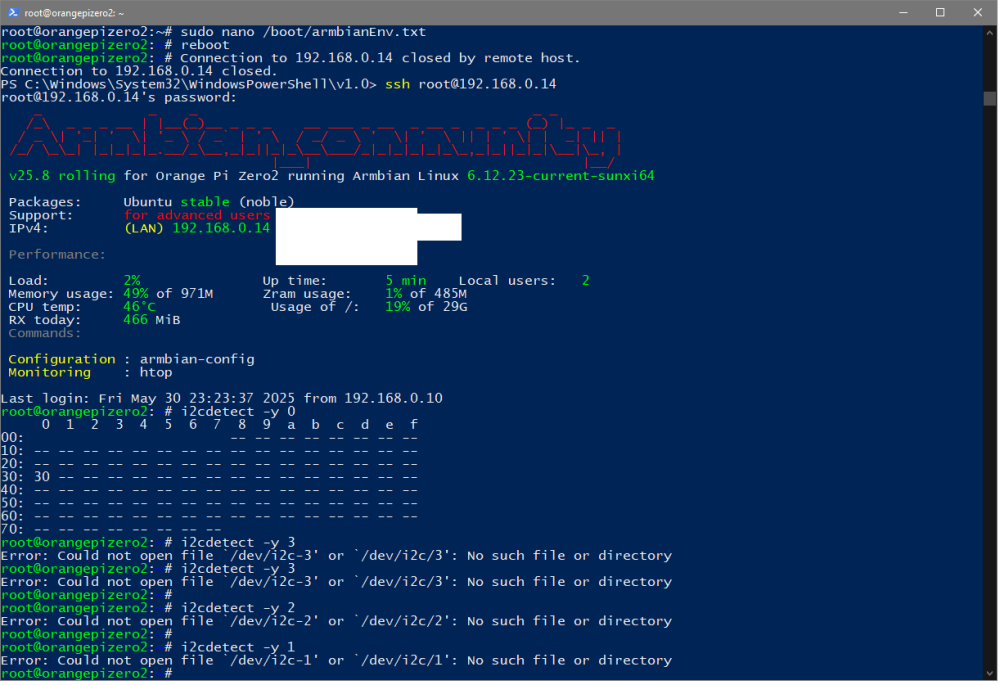

I2C not working on Orange Pi Zero2

Yordan Yanakiev replied to Yordan Yanakiev's topic in Allwinner sunxi

seems like the config should be distinguishing by models, not by .. idk even what it is. let say config->OrangePi2->hardware->peripherals->i2c->i2's enumerate. -

Hi, I could try to help with maintenance, but first we should find where the problem is. Even if we ignore aic8800 driver (I can confirm that when it is working it is indeed shiet and there are occasional USB disconnections of WLAN card), zfs-dkms still doesn't work. Latest version where both drivers were working was 6.11.2. Currently compiling 6.11.9 to see if there DKMS drivers work. I am suspecting that kernel headers for 6.12 and later kernels are to blame

- Today

-

Welcome to the club Perhaps you rather help us maintaining and fixing what is possible, so I would guide you away from things that are complete waste of time. Such as this. DKMS works on Armbian, but if this (shit) driver works, that is another question. In some cases it takes years before driver become usable ... while performance still sucks. https://docs.armbian.com/WifiPerformance/#xradio-xr819 AFAIK, there are no reliable driver for aic8800. Sorry for bringing bad news ...

-

All the kernel panic options that you have shown here occur on v6.12.23. It all happens randomly. I suspect that this may be due to the presence of broken (faulty) blocks on the memory device. It's easy to check this.If you connect the SD card via an adapter to a Linux computer: sudo fsck.ext4 /dev/sdX1

-

linux sunxi current kernel crash

Johnny on the couch replied to Johnny on the couch's topic in Allwinner sunxi

Sometimes panic is after reboot, sometimes is after few hours of uptime. Panic1 from the previous post is while doing apt update. 2nd was while compiling zfs-dkms and 3rd I don't rember. The same Noble image works on eMMC, without panic, for now. I'll try to reproduce it with another SD card. -

When you have started the device on a new operating system image, does the kernel panic appear? After what action does the core panic?

-

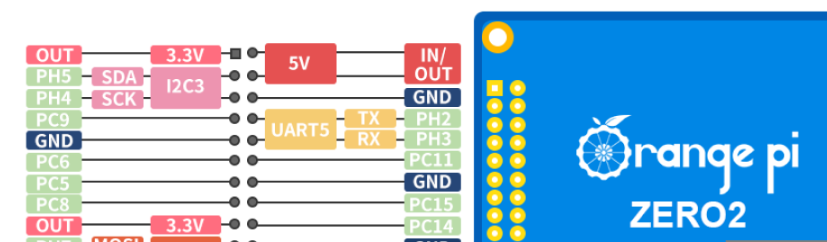

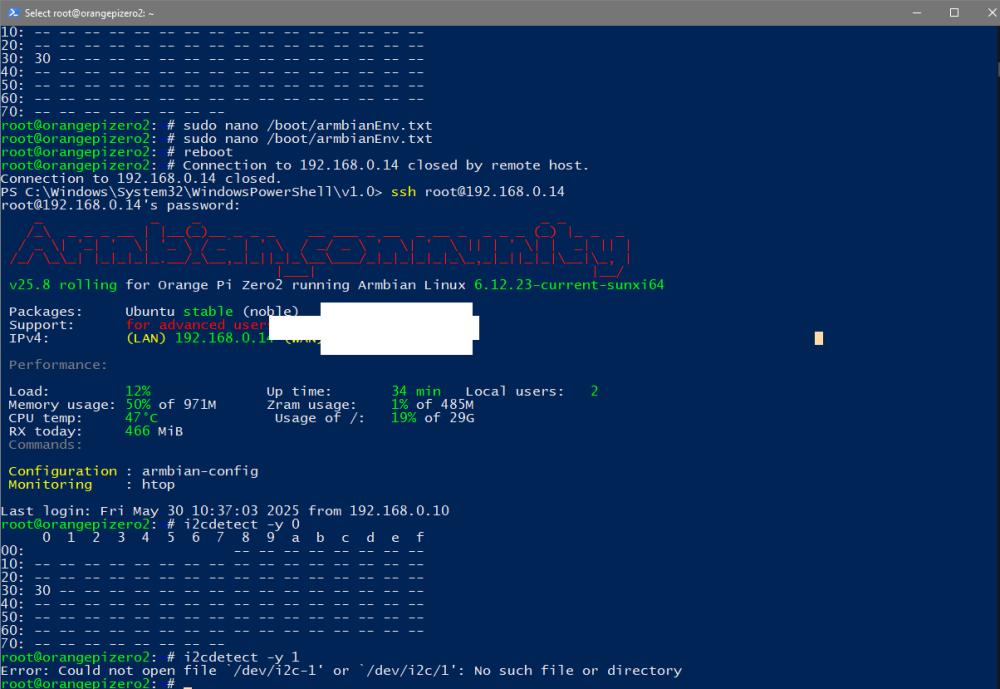

Yes in overlays i2c3-ph is on system is i2c-1 on OZPI v2/3 boards on pins 3 and 5 So you can check connected devices on pins 3 and 5 i2cdetect -y 1

-

Trouble getting hardware decoding to work on Rock 5 ITX

bucknaked posted a topic in Radxa Rock 5 ITX

I had this plan on utilizing the Radxa Rock 5 ITX+ as a Moonlight client device to stream games from my desktop computer to my living room TV, and using my 8BitDo Ultimate controller to play. On paper, the board has all the bells and whistles needed to do it, but I am having trouble getting hardware decoding of H264 and/or HEVC to work as expected. From what I gather, I need mesa-vpu support, but the documentation I find is very scattered, and repositories that once held the needed resources are no longer available. I am currently running Armbian_25.DBhsHKsx.2.2_Rock-5-itx_bookworm_vendor_6.1.99_cinnamon-backported-mesa_desktop.img (build date 21st of February, 2025), downloaded from Armbian.com. Hardware decoding does however not work, despite it being marked with "backported mesa". I've been at this for two weeks now with different images, including Armbian and Radxa OS, even going so far as trying to build Armbian myself from scratch. The image I am currently using is however the most stable I have experienced so far. Am I missing something? How do I get hardware decoding to work? Edit: Link to armbianmonitor output -

Even booting image built with compile.sh and trying to load module doesn't work: root@longanpi-3h:~# modprobe aic_btusb_usb modprobe: ERROR: could not insert 'aic_btusb_usb': Exec format error root@longanpi-3h:~# dmesg | tail [ 7.172720] systemd[1]: Started systemd-rfkill.service - Load/Save RF Kill Switch Status. [ 7.390663] systemd[1]: Finished armbian-ramlog.service - Armbian memory supported logging. [ 7.437995] systemd[1]: Starting systemd-journald.service - Journal Service... [ 7.445699] systemd[1]: Finished ldconfig.service - Rebuild Dynamic Linker Cache. [ 7.497556] systemd-journald[412]: Collecting audit messages is disabled. [ 7.588956] systemd[1]: Started systemd-journald.service - Journal Service. [ 7.646152] systemd-journald[412]: Received client request to flush runtime journal. [ 9.628132] EXT4-fs (mmcblk0p1): resizing filesystem from 446464 to 932864 blocks [ 9.725518] EXT4-fs (mmcblk0p1): resized filesystem to 932864 [ 92.076404] module aic_btusb: .gnu.linkonce.this_module section size must match the kernel's built struct module size at run time root@longanpi-3h:~# modinfo /lib/modules/6.12.30-current-sunxi64/updates/dkms/aic_btusb_usb.ko filename: /lib/modules/6.12.30-current-sunxi64/updates/dkms/aic_btusb_usb.ko license: GPL version: 2.1.0 description: AicSemi Bluetooth USB driver version author: AicSemi Corporation import_ns: VFS_internal_I_am_really_a_filesystem_and_am_NOT_a_driver srcversion: B6C3A1904D0AFEA27CE3E93 alias: usb:vA69Cp88DCd*dc*dsc*dp*icE0isc01ip01in* alias: usb:vA69Cp8D81d*dc*dsc*dp*icE0isc01ip01in* alias: usb:vA69Cp8801d*dc*dsc*dp*icE0isc01ip01in* depends: name: aic_btusb vermagic: 6.12.30-current-sunxi64 SMP mod_unload aarch64 parm: btdual:int parm: bt_support:int parm: mp_drv_mode:0: NORMAL; 1: MP MODE (int)

-

I2C not working on Orange Pi Zero2

Yordan Yanakiev replied to Yordan Yanakiev's topic in Allwinner sunxi

Good lords. i2c3-ph is actually i2c-1which is i2c-0 since it is on pin 3 and 5. LOL ! This is literally a total mess. -

How to compile DKMS on the board? I am trying this for the past week without success and starting to go crazy... The last kernel which worked is 6.11.2. I tried with zfs-dkms (from apt's repositories and from manually build .deb from zfs git) and with aic8800 drivers. I've tried Bookworm image, tried Noble. Tried -current and -edge kernels. Tried many kernel revisions. Tried to build kernels (image & headers) with compile.sh with docker, without docker. Updated my x86 Debian to Trixie. Compilers are same on the build host and on the board: longanpi-3h# dpkg -l | grep -i gcc ii gcc 4:13.2.0-7ubuntu1 arm64 GNU C compiler ii gcc-13 13.3.0-6ubuntu2~24.04 arm64 GNU C compiler ii gcc-13-aarch64-linux-gnu 13.3.0-6ubuntu2~24.04 arm64 GNU C compiler for the aarch64-linux-gnu architecture ii gcc-13-base:arm64 13.3.0-6ubuntu2~24.04 arm64 GCC, the GNU Compiler Collection (base package) ... root@4585c06e2f54:/armbian# dpkg -l | grep -i gcc ii gcc 4:13.2.0-7ubuntu1 amd64 GNU C compiler ii gcc-13 13.3.0-6ubuntu2~24.04 amd64 GNU C compiler ii gcc-13-aarch64-linux-gnu 13.3.0-6ubuntu2~24.04cross1 amd64 GNU C compiler for the aarch64-linux-gnu architecture ii gcc-13-aarch64-linux-gnu-base:amd64 13.3.0-6ubuntu2~24.04cross1 amd64 GCC, the GNU Compiler Collection (base package) ... Or even better: Is it possible somehow to build those drivers on the host PC? 16x x86 CPUs are much, much faster than 4x A53.

-

linux sunxi current kernel crash

Johnny on the couch replied to Johnny on the couch's topic in Allwinner sunxi

Hi, I am not aware of the older Longan image. I've used it for a few months with OrangePi Zero3 image without kernel panics. I've collected few more kernel crashes on the same image (latest Ubuntu Noble freshly installed on SD card): apt update on USB WiFi, LAN still connected, DKMS aic8800 built, freshly reinstalled image While doing apt install zfs-dkms panic3: -

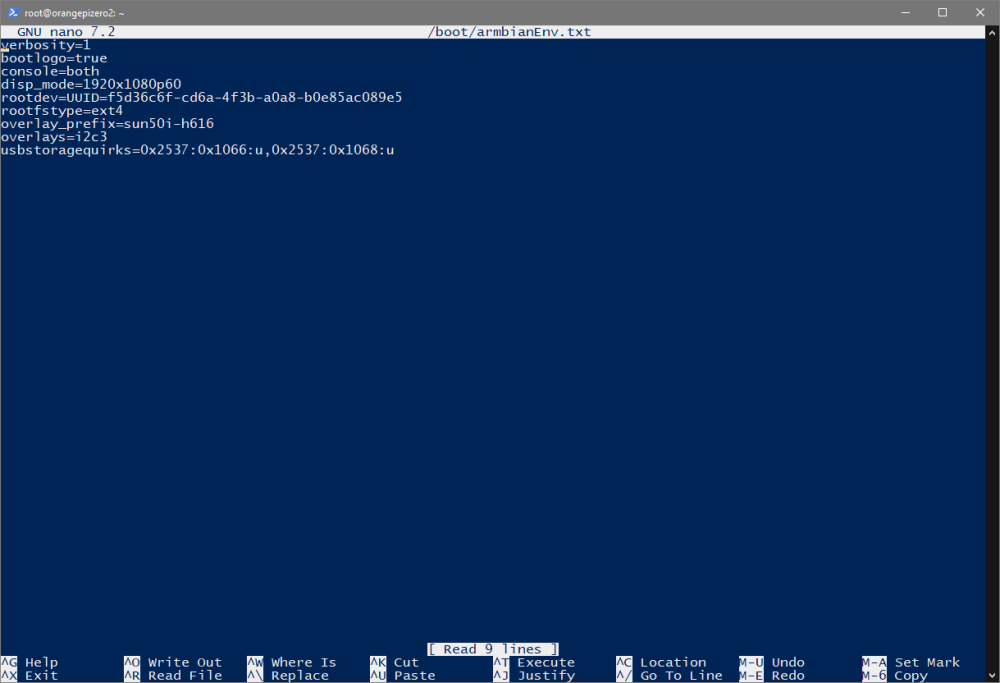

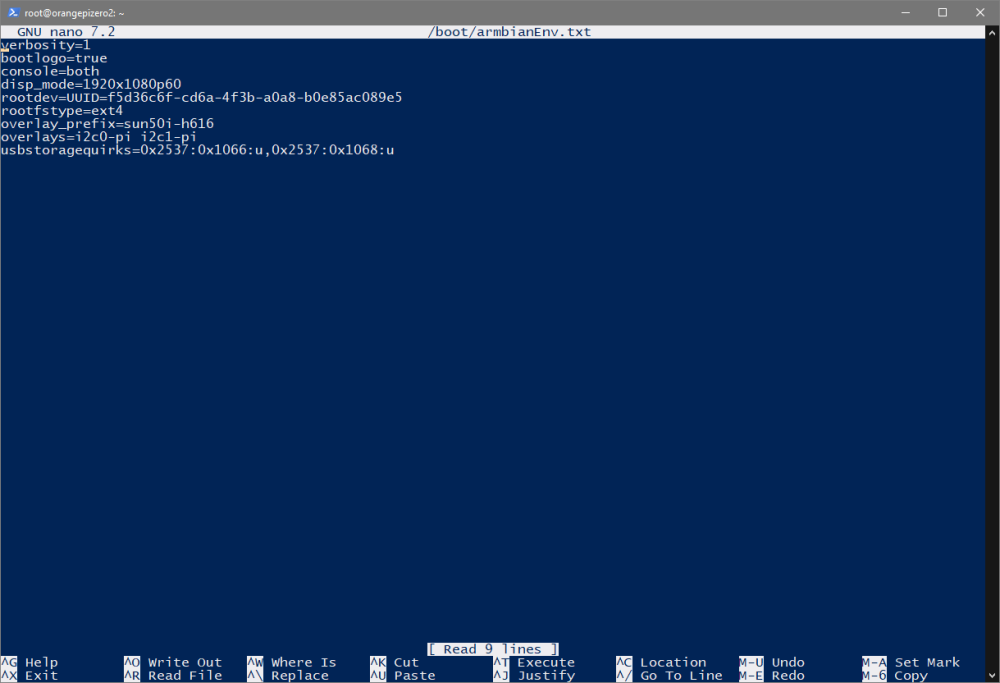

I confirm that armbian-config has problem with add ovelay prefix to overlays= please look on: https://github.com/armbian/configng/issues/592 So i must add manually devices in amrbinaEnv.txt and for OZPI v3 to use I2C i have overlay_prefix=sun50i-h616 overlays=i2c3-ph

-

I assume should work with vendor. Maybe missing node in device tree. Maybe missing firmware blobs or same as above. Expected since neither SPI nor eMMC are present by default. Direct boot from NVMe isn't support by any Rockchip soc.

-

CSC Armbian for RK322x TV box boards

Almero Ramadhan Insan wibowo replied to jock's topic in Rockchip CPU Boxes

does anyone know how to get maskrom and uart on this rk3228a board from mxq pro 4k 5G since pushing the little button shows up as armlogic devices on device manager but the actual chip is rockchip and sorry for bad quality images - Yesterday

-

Good question. I did compared predefined default kernels from canonical (6.8) and debian (6.10) on the same hardware twice: 1) qemu arm64 emulator on x86_64 host with the same parameters. lines 6 and 7 on the screenshot 2) qemu x86_64 emulator on x86_64 host with the same parameters. lines 4 and 5 on the screenshot

-

jimbolaya is the first prize winner in the Giveaway: FriendlyElec Nanopi R3S

-

I2C not working on Orange Pi Zero2

Yordan Yanakiev replied to Yordan Yanakiev's topic in Allwinner sunxi

-

Armbian for an old Allwinner A10 tablet

Ryzer replied to thewiseguyshivam's topic in Allwinner sunxi

All Armbian board configurations files live in build/config/board/ Besides the Pcduino2 Cubieboard and OlinuXino-lime board, I don't know what other A10 boards still have some level of support. After that the next step would probably be to confirm that it works with uboot. Here you have another config file and dts. For any tweaks to uboot you will need to pass uboot-patch when running compile.sh. Note that when you do this you will need to open a new tab and navigate to cache/sources/u-boot-worktree/ Similiarly for the kernel you would navigate to cache/sources/kernel/ currently 6.12 is "current" while 6.14 is "edge" Hope this helps Ryzer -

I2C not working on Orange Pi Zero2

Yordan Yanakiev replied to Yordan Yanakiev's topic in Allwinner sunxi

so, how should be looking my armbianEnv file ? -

My mistake I was suggesting based on prior comments found on the Orange Pi Zero3 thread but after reviewing again it looks like the port part of the overlay is no longer necessary either so as Kris777 suggested just the interface name should hopefully now suffice. According to wiki, the main i2c interface should be i2c3: https://linux-sunxi.org/Xunlong_Orange_Pi_Zero2

-

For production, boxes are much more interesting than boards. We just need to deal with a honest manufacturer. The last dtb from @mmie4jbcu worked very well for me in a x88 pro 20.

-

I2C not working on Orange Pi Zero2

Yordan Yanakiev replied to Yordan Yanakiev's topic in Allwinner sunxi

-

Armbian for an old Allwinner A10 tablet

thewiseguyshivam replied to thewiseguyshivam's topic in Allwinner sunxi

Hi! Great, that's a good starting point. So where could I find a reference file I can edit and align with my tablet? And where should I place this file? How would this file (possibly) connect to some dts? (This is just for my curiosity) Thank you!