Search the Community

Showing results for tags 'helios64'.

-

I literally registered to post this complaint here. How STUPID it is to have a M.2 SATA SHARED WITH HDD1!! IT DEFEATS THE WHOLE PURPOSE OF THIS ENCLOSURE. PERIOD!!!! My mistake was that I did not know what it meant that M.2 was SHARED before I purchased this enclosure and now, I have a need to expand beyond the measly 16GB of your eMMC and I found out THE PAINFUL WAY that IT IS NOT POSSIBLE because of the shared SATA port. IT"S BEYOND FRUSTRATION. YOU CANNOT EVEN IMAGINE HOW STUPID THIS DECISION OF YOURS IS. IT JUST LITERALLY DOWNGRADED YOUR NAS FROM AN EXCELLENT ENCLOSURE TO AN OVERPRICED JUNK THAT I HAD TO WAIT FOR FOR MORE THAN 6 MONTHS. AND THERE IS NO WAY TO RETURN IT TO YOU AND GET MY MONEY BACK. What should I do with my RAID now, once I want to install an SSD drive? Should I now use RAID drive as a slow storage and have symbolic links to make sure that I don't run out of space on eMMC? IF THERE WAS SOME WAY TO RATE YOUR ENCLOSURE SO NOBODY ELSE MAKES THE SAME MISTAKE I DID, I WOULD CERTAINLY DO THAT.

-

Anyone know a solution for this? Linux vlib2 5.9.14-rockchip64 #20.11.4 SMP PREEMPT Tue Dec 15 08:52:20 CET 2020 aarch64 aarch64 aarch64 GNU/Linux Jan 08 14:27:05 vlib2 systemd[2481]: Starting Timed resync... Jan 08 14:27:05 vlib2 profile-sync-daemon[11324]: WARNING: Do not call /usr/bin/profile-sync-daemon as root. Jan 08 14:27:05 vlib2 systemd[2481]: psd-resync.service: Main process exited, code=exited, status=1/FAILURE Jan 08 14:27:05 vlib2 systemd[2481]: psd-resync.service: Failed with result 'exit-code'. Jan 08 14:27:05 vlib2 systemd[2481]: Failed to start Timed resync.

-

Hello everyone, If anyone would like to have a helios64 without having to wait for the next batch, mine is for sale. This is the complete bundle, it's in very good condition. I will post pictures very soon. I live in France, it would be better if you are in Europe so that the shipping costs remain reasonable...

-

Hello Guys, I would like to have question maybe unwise but it's hard to find information about it. I would like to prevent somehow my Helios64 or it's power supply to minimize risk of fire. Do you have any propositions to minimize that risk? Thank you for your help in advance

-

I haven't been able to find any specs on the eMMC module in the helios64. I've found some posts online indicating that it is a samsung KLMAG1JETD-B041, but I haven't been able to find any info on the write endurance of this module (not sure if it is standard for eMMC vendors to provide that information like SSD vendors). I have been able to run `mmc extcsd read /dev/mmcblk1`, which shows that the emmc life time estimation is between 0 and 10%, but since this value is in 10% increments, its not very helpful. I've had issues with the default ramlog-based /var/log, so I had to turn it off. I've increased the size to 250MB but it regularly fills up because the 'sysstat', 'pcp' (for cockpit-pcp), and 'atop' packages all write persistent logs into /var/log, which 'armbian-truncate-logs' doesn't clean up. I could probably update the armbian-truncate-logs script to support these tools if that was the only issue. However I've also enabled the systemd persistent journal, and although I can see that armbian-truncate-logs is calling journalctl, I still get messages about corrupt journals. Also `journalctl` only reads logs from /var/log/journal, not /var/log.hdd/journal, so it is only able to show the current day's logs, which isn't very useful. So I'm trying to determine if I should be safe to have /var/log on disk without the ramlog overlay. Any recommendations? I do have my root filesystem on btrfs, and /var/log in a separate subvolume. Maybe there's some way to tweak how frequently to sync /var/log to disk, but I don't know of any mechanism off the top of my head. Thanks, Mike

-

My Helios64 is "far away" and I am afraid to change network configuration, because if I miss something I could lose connectivity to the box. I would like to attach COM-to-IP adapter so that I always had serial console available, but... what actually do I need? "Normal" com-to-ip adapter provides COM port. Helios64 has COM-in-USB. So...? How to convert Helios'es serial console to anything accessible via LAN?

-

I know that WOL is currently not supported from the thread in kernel 5.x However, the specs of Helios64 showed and still shows that the interfaces have WOL support: * https://wiki.kobol.io/helios64/intro/#board * https://kobol.io under Multi-Gigabit Ethernet section What is missing ? How can we help ?

-

To continue discussion from Helios64 Support Currently there is no plan to support to disable the power but it's interesting to know such use case. If we implemented this feature, you would need to go u-boot prompt to enable and disable the power. would this acceptable for you? Under Linux device tree, the power rail declared as regulator node with regulator-always-on property. This prevent kernel (and user) to turn off the regulator. Unless we are able to create device node for the SATA port, to act as consumer of the regulator, we can't remove the regulator-always-on property.

-

Hi! Does anyone have experience comparing ZFS performance on different operating systems on the same hardware. It is very interesting to compare the performance on FreeBSD and Linux.

-

Hi, I have major issues with my helios64 / ZFS setup - maybe anyone can give me a good hint. I'm running the following system: Linux helios64 5.9.14-rockchip64 #20.11.4 SMP PREEMPT Tue Dec 15 08:52:20 CET 2020 aarch64 GNU/Linux I have a three WD 4TB Plus disk - on each disk is encrypted with luks. The three encrypted disks are bundled into a raidz1 zpool "archive" using zfs. Basically this setup works pretty good, but with rather high disc usage, e.g. during a scrub, the whole pool degrades due to read / crc errors. As example: zpool status -x pool: archive state: DEGRADED status: One or more devices are faulted in response to persistent errors. Sufficient replicas exist for the pool to continue functioning in a degraded state. action: Replace the faulted device, or use 'zpool clear' to mark the device repaired. scan: scrub in progress since Sun Jan 10 09:54:30 2021 613G scanned at 534M/s, 363G issued at 316M/s, 1.46T total 7.56M repaired, 24.35% done, 0 days 01:00:57 to go config: NAME STATE READ WRITE CKSUM archive DEGRADED 0 0 0 raidz1-0 DEGRADED 87 0 0 EncSDC DEGRADED 78 0 0 too many errors (repairing) EncSDD FAULTED 64 0 0 too many errors (repairing) EncSDE DEGRADED 56 0 20 too many errors (repairing) errors: No known data errors dmesg shows a lot of these errors: [Jan10 09:59] ata3.00: NCQ disabled due to excessive errors [ +0.000009] ata3.00: exception Emask 0x2 SAct 0x18004102 SErr 0x400 action 0x6 [ +0.000002] ata3.00: irq_stat 0x08000000 [ +0.000004] ata3: SError: { Proto } [ +0.000006] ata3.00: failed command: READ FPDMA QUEUED [ +0.000007] ata3.00: cmd 60/00:08:e8:92:01/08:00:68:00:00/40 tag 1 ncq dma 1048576 in res 40/00:d8:68:8b:01/00:00:68:00:00/40 Emask 0x2 (HSM violation) [ +0.000002] ata3.00: status: { DRDY } [ +0.000004] ata3.00: failed command: READ FPDMA QUEUED [ +0.000006] ata3.00: cmd 60/80:40:e8:9a:01/03:00:68:00:00/40 tag 8 ncq dma 458752 in res 40/00:d8:68:8b:01/00:00:68:00:00/40 Emask 0x2 (HSM violation) [ +0.000002] ata3.00: status: { DRDY } [ +0.000003] ata3.00: failed command: READ FPDMA QUEUED [ +0.000005] ata3.00: cmd 60/80:70:68:9e:01/00:00:68:00:00/40 tag 14 ncq dma 65536 in res 40/00:d8:68:8b:01/00:00:68:00:00/40 Emask 0x2 (HSM violation) [ +0.000003] ata3.00: status: { DRDY } [ +0.000002] ata3.00: failed command: READ FPDMA QUEUED [ +0.000006] ata3.00: cmd 60/80:d8:68:8b:01/01:00:68:00:00/40 tag 27 ncq dma 196608 in res 40/00:d8:68:8b:01/00:00:68:00:00/40 Emask 0x2 (HSM violation) [ +0.000002] ata3.00: status: { DRDY } [ +0.000002] ata3.00: failed command: READ FPDMA QUEUED [ +0.000005] ata3.00: cmd 60/00:e0:e8:8c:01/06:00:68:00:00/40 tag 28 ncq dma 786432 in res 40/00:d8:68:8b:01/00:00:68:00:00/40 Emask 0x2 (HSM violation) [ +0.000002] ata3.00: status: { DRDY } [ +0.000007] ata3: hard resetting link [ +0.475899] ata3: SATA link up 6.0 Gbps (SStatus 133 SControl 300) [ +0.001128] ata3.00: configured for UDMA/133 [ +0.000306] scsi_io_completion_action: 8 callbacks suppressed [ +0.000009] sd 2:0:0:0: [sdd] tag#1 UNKNOWN(0x2003) Result: hostbyte=0x00 driverbyte=0x08 cmd_age=0s [ +0.000006] sd 2:0:0:0: [sdd] tag#1 Sense Key : 0x5 [current] [ +0.000003] sd 2:0:0:0: [sdd] tag#1 ASC=0x21 ASCQ=0x4 [ +0.000006] sd 2:0:0:0: [sdd] tag#1 CDB: opcode=0x88 88 00 00 00 00 00 68 01 92 e8 00 00 08 00 00 00 [ +0.000003] print_req_error: 8 callbacks suppressed [ +0.000003] blk_update_request: I/O error, dev sdd, sector 1744933608 op 0x0:(READ) flags 0x700 phys_seg 16 prio class 0 [ +0.000019] zio pool=archive vdev=/dev/mapper/EncSDC error=5 type=1 offset=893389230080 size=1048576 flags=40080cb0 [ +0.000069] sd 2:0:0:0: [sdd] tag#8 UNKNOWN(0x2003) Result: hostbyte=0x00 driverbyte=0x08 cmd_age=0s [ +0.000005] sd 2:0:0:0: [sdd] tag#8 Sense Key : 0x5 [current] [ +0.000003] sd 2:0:0:0: [sdd] tag#8 ASC=0x21 ASCQ=0x4 [ +0.000004] sd 2:0:0:0: [sdd] tag#8 CDB: opcode=0x88 88 00 00 00 00 00 68 01 9a e8 00 00 03 80 00 00 [ +0.000004] blk_update_request: I/O error, dev sdd, sector 1744935656 op 0x0:(READ) flags 0x700 phys_seg 7 prio class 0 [ +0.000014] zio pool=archive vdev=/dev/mapper/EncSDC error=5 type=1 offset=893390278656 size=458752 flags=40080cb0 [ +0.000049] sd 2:0:0:0: [sdd] tag#14 UNKNOWN(0x2003) Result: hostbyte=0x00 driverbyte=0x08 cmd_age=0s [ +0.000004] sd 2:0:0:0: [sdd] tag#14 Sense Key : 0x5 [current] [ +0.000004] sd 2:0:0:0: [sdd] tag#14 ASC=0x21 ASCQ=0x4 [ +0.000003] sd 2:0:0:0: [sdd] tag#14 CDB: opcode=0x88 88 00 00 00 00 00 68 01 9e 68 00 00 00 80 00 00 [ +0.000004] blk_update_request: I/O error, dev sdd, sector 1744936552 op 0x0:(READ) flags 0x700 phys_seg 16 prio class 0 [ +0.000013] zio pool=archive vdev=/dev/mapper/EncSDC error=5 type=1 offset=893390737408 size=65536 flags=1808b0 The S.M.A.R.T values of the discs are OK - only "UDMA_CRC_ERROR_COUNT" are increased (values ~25, increasing). What's also worth mentioning: If I start a scrub I get these errors after about 10 seconds (rather quickly) - but it seems that if I let the scrub continue, there are no more errors occuring. Does this indicate bad discs? The discs itself do not indicate any problems (selftest does not show issues). Is this related to the zfs implementations for armbian? Anything I can do to get this reliable? Thank!

-

I got the Helios64 and was running it from an external SD card so far since when I first installed it, the other options where not available, yet. I'm running openmediavault 5.5 (Armbian 20.11.6 Buster with Linux 5.9.14-rockchip64). Now, I wanted to move the system to a SSD and installed a "Crucial MX500 1TB CT1000MX500SSD4" according to https://wiki.kobol.io/helios64/m2/ Unfortunately, the SSD is not detected by the system, see output of dmesg below. For ata1, it only shows SATA link down. Is there anything I can do to figure out what the problem is? Or is there any reason why this particular SSD would not work? ~$ dmesg | grep ata [ 0.000000] Memory: 3740032K/4061184K available (14464K kernel code, 2094K rwdata, 5980K rodata, 4224K init, 573K bss, 190080K reserved, 131072K cma-reserved) [ 0.016073] CPU features: detected: ARM errata 1165522, 1319367, or 1530923 [ 1.394468] libata version 3.00 loaded. [ 3.019011] dwmmc_rockchip fe320000.mmc: DW MMC controller at irq 28,32 bit host data width,256 deep fifo [ 3.315946] ata1: SATA max UDMA/133 abar m8192@0xfa010000 port 0xfa010100 irq 240 [ 3.315955] ata2: SATA max UDMA/133 abar m8192@0xfa010000 port 0xfa010180 irq 241 [ 3.315964] ata3: SATA max UDMA/133 abar m8192@0xfa010000 port 0xfa010200 irq 242 [ 3.315972] ata4: SATA max UDMA/133 abar m8192@0xfa010000 port 0xfa010280 irq 243 [ 3.315979] ata5: SATA max UDMA/133 abar m8192@0xfa010000 port 0xfa010300 irq 244 [ 3.628407] ata1: SATA link down (SStatus 0 SControl 300) [ 13.625910] ata2: softreset failed (1st FIS failed) [ 14.101801] ata2: SATA link up 6.0 Gbps (SStatus 133 SControl 300) [ 14.121464] ata2.00: ATA-10: ST4000VN008-2DR166, SC60, max UDMA/133 [ 14.121475] ata2.00: 7814037168 sectors, multi 0: LBA48 NCQ (depth 32), AA [ 14.123003] ata2.00: configured for UDMA/133 [ 14.601788] ata3: SATA link up 6.0 Gbps (SStatus 133 SControl 300) [ 14.615989] ata3.00: ATA-10: ST4000VN008-2DR166, SC60, max UDMA/133 [ 14.616001] ata3.00: 7814037168 sectors, multi 0: LBA48 NCQ (depth 32), AA [ 14.617535] ata3.00: configured for UDMA/133 [ 15.093805] ata4: SATA link up 6.0 Gbps (SStatus 133 SControl 300) [ 15.113279] ata4.00: ATA-10: ST4000VN008-2DR166, SC60, max UDMA/133 [ 15.113290] ata4.00: 7814037168 sectors, multi 0: LBA48 NCQ (depth 32), AA [ 15.114802] ata4.00: configured for UDMA/133 [ 15.428239] ata5: SATA link down (SStatus 0 SControl 300) [ 17.307715] EXT4-fs (mmcblk0p1): mounted filesystem with writeback data mode. Opts: (null) [ 21.469500] EXT4-fs (md0): mounted filesystem with ordered data mode. Opts: user_xattr,usrjquota=aquota.user,grpjquota=aquota.group,jqfmt=vfsv0,acl

-

Hello I was finishing setting up my Helios64 (system installed on emmc) with Openmediavault (luks + snapraid + mergerfs + samba) on 3 disks, everything was working and I thought I should reboot to make sure that it is indeed the case (especially making sure that luks locked disks would not be an issue). An what a surprise, after booting up I couldn't ssh in so I connect the serial to log in as root. It seems that all services are dead like below. # service ssh status ● ssh.service - OpenBSD Secure Shell server Loaded: loaded (/lib/systemd/system/ssh.service; enabled; vendor preset: enab Active: inactive (dead) Docs: man:sshd(8) man:sshd_config(5) And once I manually restarted the ssh service I get: "System is booting up. Unprivileged users are not permitted to log in yet. Please come back later. For technical details, see pam_nologin(8)." Connection closed by 192.168.0.3 port 22 Could anyone give me some directions on how I can start debugging this?

-

Hi, I think I may have posted about this in a few places, but I'm not sure where most are looking to discuss helios64 issues. The problem I am having is that the fans do not turn at all. As per the wiki, I've tried setting fancontrol off, and checking that the pwm value goes to 255, which it does but still no movement. I am using the fans supplied in the kit. There is nothing obstructing the fans, and sometimes if I disconnect and reconnect them from the board they will twitch a bit, or spin for a short while (until the connector is fully down against the board), but nothing otherwise. I've also tried heating the cpu up to ~85C (e.g `cat /dev/random | gzip > /dev/null`) with fancontrol enabled, and whilst the pwm value changes, as expected there is no movement in the fans. I've also tried FreeBSD, which seems to have the same problem. Anyone else seen this issue? Seems most people have problems with the fans being to loud. Anything further I can do to get to the bottom of it? Thanks, Mark

-

Hello I'm running a Plex server on my Helios64 and when I turn on subtitles it buffers every other second making the movie unwatchable. Is this a known issue with a fix? Sincerely Magnus Nygren

-

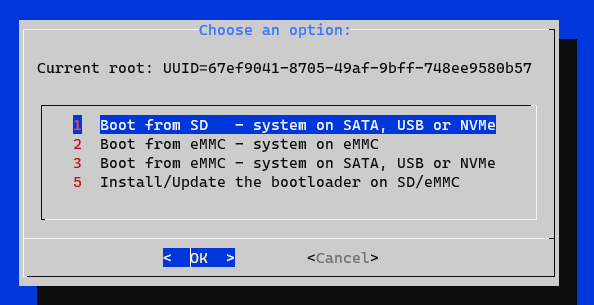

My plan is as follows: - Insert SD card. - Make a clone / back-up of boot and system using armbian-config whenever I'm about to do something major. (boot and system are both on eMMC) - "unclick" SD card and leave it in the back. - (repeat, until something breaks, and then just insert it and restore using armbian-config if something goes wrong.) I am confused by the eMMC to SD card path though. Here's some context: ❯ lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT loop0 7:0 0 86.6M 1 loop /snap/core/10578 loop1 7:1 0 178.2M 1 loop /snap/microk8s/1866 loop2 7:2 0 86.6M 1 loop /snap/core/10585 sda 8:0 0 931.5G 0 disk ├─bcache0 253:0 0 9.1T 0 disk /mnt/storage ├─bcache1 253:128 0 9.1T 0 disk ├─bcache2 253:256 0 9.1T 0 disk └─bcache3 253:384 0 9.1T 0 disk sdb 8:16 0 9.1T 0 disk └─bcache0 253:0 0 9.1T 0 disk /mnt/storage sdc 8:32 0 9.1T 0 disk └─bcache1 253:128 0 9.1T 0 disk sdd 8:48 0 9.1T 0 disk └─bcache3 253:384 0 9.1T 0 disk sde 8:64 0 9.1T 0 disk └─bcache2 253:256 0 9.1T 0 disk mmcblk1 179:0 0 14.6G 0 disk └─mmcblk1p1 179:1 0 14.4G 0 part / mmcblk1boot0 179:32 0 4M 1 disk mmcblk1boot1 179:64 0 4M 1 disk mmcblk0 179:96 0 59.5G 0 disk └─mmcblk0p1 179:97 0 59.5G 0 part zram0 251:0 0 1.9G 0 disk [SWAP] zram1 251:1 0 50M 0 disk /var/log ❯ ls -l /sys/block/ total 0 lrwxrwxrwx 1 root root 0 Jan 5 11:46 bcache0 -> ../devices/virtual/block/bcache0 lrwxrwxrwx 1 root root 0 Jan 5 11:46 bcache1 -> ../devices/virtual/block/bcache1 lrwxrwxrwx 1 root root 0 Jan 5 11:46 bcache2 -> ../devices/virtual/block/bcache2 lrwxrwxrwx 1 root root 0 Jan 5 11:46 bcache3 -> ../devices/virtual/block/bcache3 lrwxrwxrwx 1 root root 0 Jan 5 11:46 loop0 -> ../devices/virtual/block/loop0 lrwxrwxrwx 1 root root 0 Jan 5 11:46 loop1 -> ../devices/virtual/block/loop1 lrwxrwxrwx 1 root root 0 Jan 5 11:46 loop2 -> ../devices/virtual/block/loop2 lrwxrwxrwx 1 root root 0 Jan 5 11:46 loop3 -> ../devices/virtual/block/loop3 lrwxrwxrwx 1 root root 0 Jan 5 11:46 loop4 -> ../devices/virtual/block/loop4 lrwxrwxrwx 1 root root 0 Jan 5 11:46 loop5 -> ../devices/virtual/block/loop5 lrwxrwxrwx 1 root root 0 Jan 5 11:46 loop6 -> ../devices/virtual/block/loop6 lrwxrwxrwx 1 root root 0 Jan 5 11:46 loop7 -> ../devices/virtual/block/loop7 lrwxrwxrwx 1 root root 0 Jan 5 19:38 mmcblk0 -> ../devices/platform/fe320000.mmc/mmc_host/mmc0/mmc0:aaaa/block/mmcblk0 lrwxrwxrwx 1 root root 0 Jan 5 11:46 mmcblk1 -> ../devices/platform/fe330000.sdhci/mmc_host/mmc1/mmc1:0001/block/mmcblk1 lrwxrwxrwx 1 root root 0 Jan 5 11:46 mmcblk1boot0 -> ../devices/platform/fe330000.sdhci/mmc_host/mmc1/mmc1:0001/block/mmcblk1/mmcblk1boot0 lrwxrwxrwx 1 root root 0 Jan 5 11:46 mmcblk1boot1 -> ../devices/platform/fe330000.sdhci/mmc_host/mmc1/mmc1:0001/block/mmcblk1/mmcblk1boot1 lrwxrwxrwx 1 root root 0 Jan 5 11:46 sda -> ../devices/platform/f8000000.pcie/pci0000:00/0000:00:00.0/0000:01:00.0/ata1/host0/target0:0:0/0:0:0:0/block/sda lrwxrwxrwx 1 root root 0 Jan 5 11:46 sdb -> ../devices/platform/f8000000.pcie/pci0000:00/0000:00:00.0/0000:01:00.0/ata2/host1/target1:0:0/1:0:0:0/block/sdb lrwxrwxrwx 1 root root 0 Jan 5 11:46 sdc -> ../devices/platform/f8000000.pcie/pci0000:00/0000:00:00.0/0000:01:00.0/ata3/host2/target2:0:0/2:0:0:0/block/sdc lrwxrwxrwx 1 root root 0 Jan 5 11:46 sdd -> ../devices/platform/f8000000.pcie/pci0000:00/0000:00:00.0/0000:01:00.0/ata4/host3/target3:0:0/3:0:0:0/block/sdd lrwxrwxrwx 1 root root 0 Jan 5 11:46 sde -> ../devices/platform/f8000000.pcie/pci0000:00/0000:00:00.0/0000:01:00.0/ata5/host4/target4:0:0/4:0:0:0/block/sde lrwxrwxrwx 1 root root 0 Jan 5 11:46 zram0 -> ../devices/virtual/block/zram0 lrwxrwxrwx 1 root root 0 Jan 5 11:46 zram1 -> ../devices/virtual/block/zram1 lrwxrwxrwx 1 root root 0 Jan 5 11:46 zram2 -> ../devices/virtual/block/zram2 ❯ sudo cat /etc/fstab # <file system> <mount point> <type> <options> <dump> <pass> tmpfs /tmp tmpfs defaults,nosuid 0 0 UUID=67ef9041-8705-49af-9bff-748ee9580b57 / ext4 defaults,noatime,nodiratime,commit=600,errors=remount-ro,x-gvfs-hide 0 1 UUID=a27e49e9-329b-4bba-a6d3-ec5b23148e6c /mnt/storage btrfs defaults 0 2 - mmcblk0 is the SD card. - mmcblk1 is the eMMC, and is my boot. - What should I be clicking in armbian-config to clone my boot and system to the sd card? - Is there an alternative recommendation for making a backup? Any other recommendations? Thank you very much!

-

is there any way to send audio through displayport? i installed kodi, just as a database updater, but it fills the log with errors related to the audio system, and i see that aplay doesn't show any audio device, so another question is, is there any way to emulate an audio device? thanks

-

Hi Guys, i'm selling my helios64. No harddisks and 2 Adapter for 2,5 zoll included. Possible shipping in Europe with insurance. Please send me DMs Pics comes later... Greetings Daniel

-

I've been watching! Whether to sell in China? When will the next batch be pre-ordered?

-

ok, so my flaming new helios finally arrived (after Christmas, what a pity) and, after spending some time assembling it (it's not the easiest thing in the world, expecially if you want to attach the mainboard BEFORE connecting all the cables, as specified within the instructions) I started configuring it. As i don't like the easy way (I'd have bought something else, if i would have) I went for a daily build with Groovy (i don't need OMV for my purpose and am quite accostumed to the ubuntu way), and the first thing i did was to make it a rolling release, putting devel in the place of groovy in sources.list, so that now i run hirsute (even if with a groovy userspace, as armbian has no hirsute userspace yet). Here comes a question... could it be possible to have a devel channel, replicating the 'latest' channel, so that once hirsute overcomes groovy i don't have to edit armbian.list to reflect the change? OK, back to the testing... i come from many years with a dns-340l from d-link with fun_plug, not exactly the most user friendly situation and not exactly the top on the hardware side, so i can say i know how to make things work, so i migrated some config files, moved the disks and guess what? my shares came back to life in not more than a couple of hours, and now with a mariadb server (instead of an old mysql 4.something), and i can say i see the difference. I use kodi with a central db and (maybe the db server, maybe the ram, maybe who knows) everything is faster now... before every database read was a pity, now it's quite instantaneous. BTW, i had an usb-c to hdmi cable hanging around and it seems to be working (at least, after enabling the correct dtbo,, it shows the login on screen) so i tried to build kodi (i reached 80° C while building, is that normal? that's why i said 'flaming' in the start) and now have to try it as a service... i used to have an headless kodi installed on a rpi4 to update my library, but i guess i could have an instance running directly on the NAS, headless or not (i'd like to try how is kodi rendered on the helios) Now, another question... until now I saw only the upper fan spin (and you can see i reached quite high temperatures), what could have gone wrong? Anyway, the Helios64 is a real beast, it didn't freeze on me yet and is really over my expectations, thanks Helios team

-

I have just put my Helios64 into operation. I have 5 HDD with 10TB each. Three HDD I mounted with ZFS (0.86) to a raidz1. the other two disks I mounted individually, formatting one disk with XFS and the last disk already had NTFS as filesystem. On this disk (NTFS) I have some very large *.vhdx files which I only use for copy tests. I use the console with the Mitnight Commander for this. The copying from the NTFS disk to the XFS disk reached a speed of about 130 MB/s. Copying from the XFS disk to the ZFS raid reached a speed of about 100 - 110 MB/s. I cannot do anything with these values alone. Can someone please tell me if these speeds fit like this? Rosi

-

I have nfs server. Whenever I do "systemctl stop nfs-kernel-server" I can see this in dmesg: lockd: couldn't shutdown host module for net f00000a1! Later when I do "systemctl start nfs-kernel-server" I see this: NFSD: starting 90-second grace period (net f00000a1) Of course "systemctl restart" gives me both messages in a row. Any idea what does it mean? What the hell is this "f00000a1"? Except strange messages everything seems to work fine...

-

Are there any plans to offer a 2-bay version of Helias64? Whould it be possible to purchase the board only - without case?

-

Hello everyone, I've been running my Helios64 for about 2 months now. My system is installed on the eMMC and i'm running a few docker containers (all from linuxserver.io) Radarr Sonarr Bazarr Transmission Jackett Jellyfin I'm having difficulties with Jellyfin and my media library. When lauching tv shows or movies, the CPU rockets to ~97-99% use and the media is constantly stopping to load. I would like to know what I can do to ease the strain on the CPU and be finally able to use Jellyfin. Has anyone been able to use hardware acceleration with docker ? What info can I give you to shade light on the matter ? Regards

-

Hello Guys, i'm positiv with Corona and in quaratine in my sleeping room while the rest of the family stays in the other rooms. So i can't backup my system with the MicroSD-Card... Can i install the latest updates? 20.11.4? And the other stuff showing? armbian-config/buster,buster 20.11.4 all [aktualisierbar von: 20.11.3] linux-buster-root-current-helios64/buster 20.11.4 arm64 [aktualisierbar von: 20.11.3] linux-dtb-current-rockchip64/buster 20.11.4 arm64 [aktualisierbar von: 20.11.3] linux-image-current-rockchip64/buster 20.11.4 arm64 [aktualisierbar von: 20.11.3] linux-u-boot-helios64-current/buster 20.11.4 arm64 [aktualisierbar von: 20.11.3] openmediavault/usul,usul,usul,usul,usul,usul 5.5.19-1 all [aktualisierbar von: 5.5.18-1] python3-lxml/stable,stable 4.3.2-1+deb10u2 arm64 [aktualisierbar von: 4.3.2-1+deb10u1] salt-common/usul,usul,usul,usul,usul,usul 3002.2+ds-1 all [aktualisierbar von: 3002.1+ds-1] salt-minion/usul,usul,usul,usul,usul,usul 3002.2+ds-1 all [aktualisierbar von: 3002.1+ds-1] tzdata/stable-updates,stable-updates 2020e-0+deb10u1 all [aktualisierbar von: 2020d-0+deb10u1] wsdd/usul,usul,usul,usul,usul,usul 0.6.2-1 all [aktualisierbar von: 0.5-1] Thanks in advance. Daniel

-

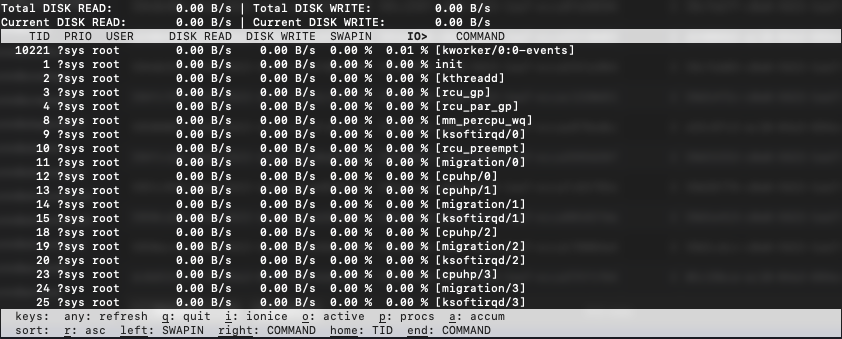

Can you guys share your experience with disk sleep on the Helios64 + Armbian. In my case I haven't seen the disks sleep - they are always spinning (or so they seem) and never on standby. I have a standalone /dev/sda and a RAID5 array with disks /dev/sd[b-e] (created with mdadm) - and XFS formatted logical volumes on top of these. 'iotop' says that nothing is accessing the disks: 'hdparm -B /dev/sd[a-z]' after each reboot is always: sudo hdparm -B /dev/sd[a-e] /dev/sda: APM_level = 254 /dev/sdb: APM_level = off /dev/sdc: APM_level = off /dev/sdd: APM_level = off /dev/sde: APM_level = off 'hdparm -C /dev/sd[a-z]' is always: $ sudo hdparm -B /dev/sd[a-e] /dev/sda: APM_level = 254 /dev/sdb: APM_level = off /dev/sdc: APM_level = off /dev/sdd: APM_level = off /dev/sde: APM_level = off Added a rule to set power management parameters and it seems to be ignored $ cat /etc/udev/rules.d/99-hdd-pwm.rules ACTION=="add|change", KERNEL=="sd[a-z]", ATTRS{queue/rotational}=="1", RUN+="/usr/bin/hdparm -B 63 -S 120 /dev/%k"