usual user

Members-

Posts

425 -

Joined

-

Last visited

Content Type

Forums

Store

Crowdfunding

Applications

Events

Raffles

Community Map

Everything posted by usual user

-

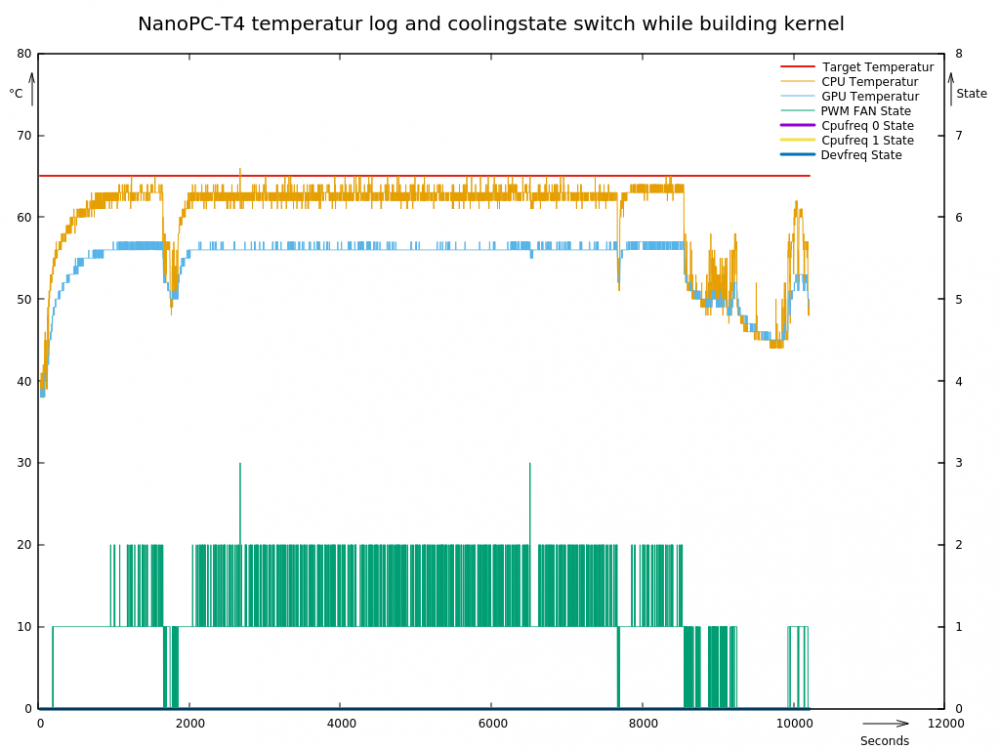

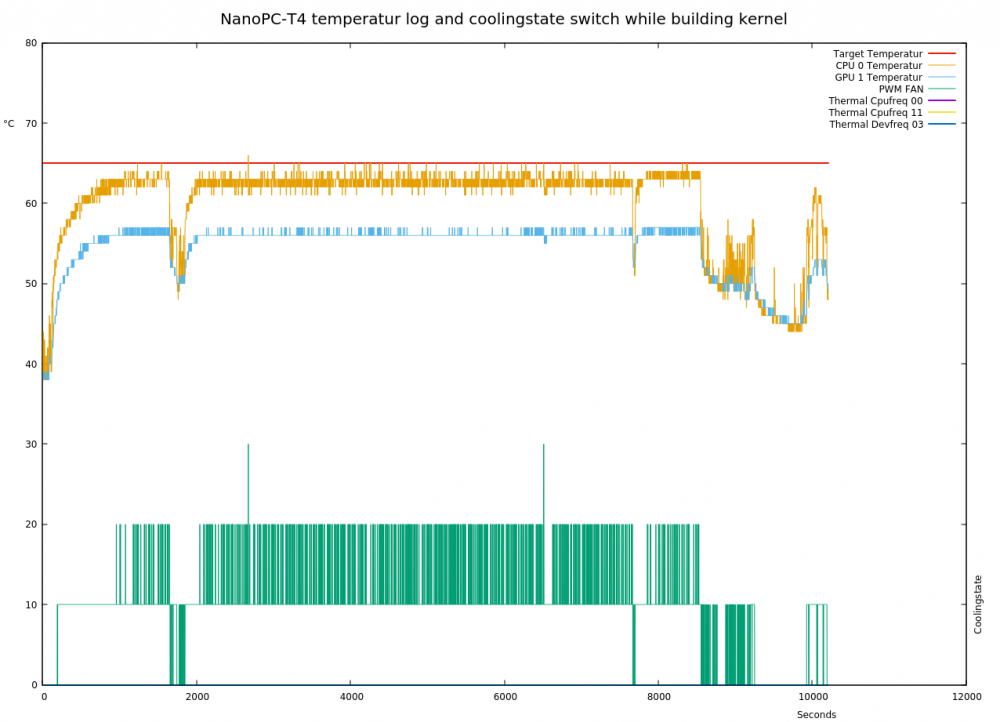

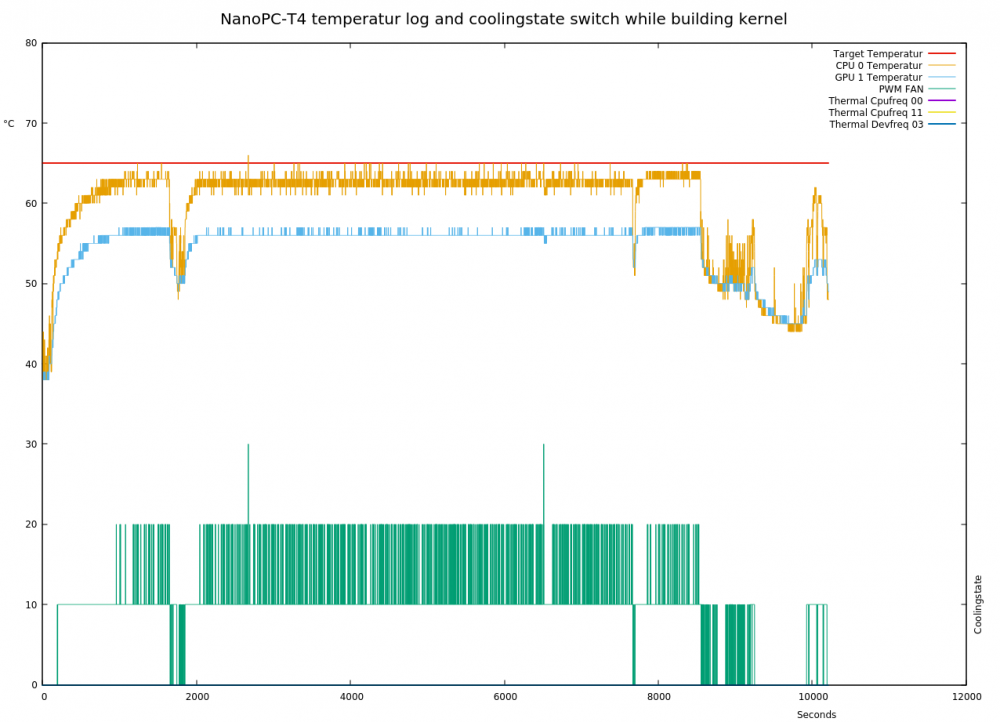

The right way to use the fan would be to have a proper thermal setup (rk3399-rockpro64-tz.dts) in the devicetree. With this the kernel thermal system can handle the management. This is a visualisation of a tmon log documenting the working of the thermal system: rk3399-rockpro64.dtb is a mainline dtb with rk3399-rockpro64-tz.dtbo applied via: fdtoverlay --input rk3399-rockpro64.dtb --output rk3399-rockpro64.dtb rk3399-rockpro64-tz.dtbo See if this is working for you by replacing your dtb and check with tmon.

-

I gave up xorg and switched to plasma-desktop. Kwin is supporting a wayland backend and so I get a lightning fast graphics desktop with all bells and whistles. Ok the bugs at the panfrost stack still exist but this environment makes efficient use of anything that is available. Thanks to the configurability of kwin, I can have the same look and feel of my previous desktop.

-

Inspired by you, I have also done some more tests on my site. For me it is also freezing. Since I am on panfrost, we can rule out lima and panfrost for this. The one we have still in common is rockchipdrm. i.MX6 is using imxdrm and is not suffering this flaw, so IMHO the display subsystem is responsible for this error. I don't know how mature the lima GL support in Mesa already is, so IMHO Mesa is to blame here. But we are dealing with 2D acceleration functions of the display subsystem for Xwindow, so these errors are not relevant for our further investigations. The concept of a dedicated cursor plane is gone in atomic modesetting. The plane is handled as any other plane, but the constraints of the plane are still obeyed. The selection of a suitable cursor plane will most probably select this one, but any other one can be chosen.

-

This is only the proprietary kernel part that is already implemented in mainline via /dev/dri/renderD128. The missing functionality is how the binary bloob uses it, which must be implemented via the as yet non-existent armsoc submodule. glamor has it already but using it via modesetting is sub optimal because of KMS/drm implementation design decisions there.

-

Exactly, they were done on the same device. The buffer pass around forces the 3D GPU IP to slow down because the required buffers are not available by time. The performance hit for the display output isn't reflected by the log but by visual inspection it makes huge difference. In both cases, the 3D rendering power is sufficient to allow a flowing 60Hz display. The DRM scan-out buffer is handed to the Mali proprietary OpenGL ES libraries and they do the buffer dance in the bloob via the Mali proprietary kernel interface. When 3D rendering is done the buffer is handed back to DRM and the scan-out takes place. This is what the submodule has to implement with the Mali rendernode (/dev/dri/renderD128) In the early days it was a security guard to protect stable installations. It made dma_buf support accessible and usable when drivers provide the support. I don't know if it is still required or meanwhile obsolete. It is still dangling around in my configurations.

-

Single Armbian image for RK + AML + AW (aarch64 ARMv8)

usual user replied to balbes150's topic in General Chat

To switch back to the old method simply rename extlinux.conf. e.g. mv extlinux.conf extlinux.conf-disabled But I don't know if the old files get still maintained. -

Reread my log analysis of Xorg.0_driver_as_rockchip.log. You setup two screens there. One driven via modesetting with the 3D render node (card1) as the display subsystem with no scan-out hardware. You can't see the result of any 3D hardware rendering on screen 0 on any monitor. The second screen is driven by fbdev with display subsystem (card0) as the display subsystem with scan-out hardware. Hence my proposal to use Section "Device" with Driver "fbdev" to see that it will deliver the same output results without setting up the unusable render node screen. This only indicates that you get 2D hardware acceleration via fbdev emulation without 3D support. fbdev is like armsoc, it is also missing a submodule for 3D support. It used to be doing everything via fbdev device and hence is deprecated. Same as for armsoc, you need a submodule. But armsoc is the better choice since you can make use of full KMS/drm acceleration. Alternatively you can rewrite modesetting to not delegate everything to OpenGL and use dma_buf for buffer pass around. This is not a problem for rk322x SOCs only. It applies to all devices that use render nodes. The less CPU power the device has, the more disadvantage the buffer pass causes.

-

This is all about where the memory is located where the operations takes place. In PC world there is only one "GPU" IP. It is implementing everything. Display engine for scan-out and GPU for OpenGL. Once the GPU has rendered directly to the scan-out memory the hardware of the display subsystem outputs it to the monitor. So offloading anything on OpenGl is a good idea. It is a device independent standard and doing composition for movie video is also a fast path. There is no need to support display subsystem acceleration in the CPU area. But we are dealing with SOCs. They have several IPs where the memory they are dealing with is separated. I.e. They need to pass around memory buffers so that they can work on data that they share. The buffer format has to be identical between different IPs otherwise you have to convert. A "dumb buffer" format is always possible but you loose acceleration features of special formats. But this requires device dependent knowledge. E.g. a display subsystem may support NV12 format for scan-out. Uploading NV12 data for compositing on the 3d GPU and then forwarding via dump buffer to the display subsystem will not improve the performance, but forwarding via dma_buf to the display subsystem will. The impact of improper buffer pass around can be seen by the uploaded glmark2 logs. The 3d performance is decreasing cause the GPU can not be served fast enough. The armada driver implements buffer pass around via etnaviv_gpu for i.MX6 in a device dependent manner and uses dma_buf for zero copy. See buffer-flow.pdf for the ways the buffers have to travel. modesetting and armsoc are missing this support, hence the low performance. Maybe the armada source can serve as a template for what is required. As both use the same method for buffer pass around, this is expected. modesetting is only optimized for PC like scenarios. Armsoc is only dealing with the display subsystem. It does not interact with lima or panfrost it is falling back to swrast as no armsoc_dri for mainline is available. (EE) AIGLX error: dlopen of /usr/lib64/dri/armsoc_dri.so failed (/usr/lib64/dri/armsoc_dri.so: cannot open shared object file: No such file or directory) (EE) AIGLX error: unable to load driver armsoc (II) IGLX: Loaded and initialized swrast (II) GLX: Initialized DRISWRAST GL provider for screen 0 In the Mali proprietary case that code took care for the proper buffer pass around via the proprietary kernel interface. But that doesn't belong in the Mesa counterpart, as it only cares about OpenGL and it doesn't matter how IPs interact. It provides only buffer import and export. For mainline in xorg the submodule is the proper place. For Weston it is the drm-backend which it already has.

-

Everything is as expected. - modesetting doesn't have 2D acceleration, but 3D hardware acceleration support over glamour. - fbdev has some 2d acceleration via KMS frambuffer emulation, but no 3d hardware acceleration support. - armsoc seems to have no real 2d acceleration support and no 3d acceleration as I see no suitable sub module. Wayland can use full KMS and 3d acceleration. E.g. Weston with the the drm-backend is running full accelerated. For example, to get a similar flow with Xwindow, armsoc must receive adequate KMS acceleration support and a sub module for 3D acceleration. But there is not much development for ddx drivers anymore. Every new development is Wayland focused. Think it's time to look for a suitable Waland compositor. glmark2-es2-wayland.logglmark2-es2-Xwindow.log

-

Here my log analysis. Xorg.0_driver_as_modesetting.log: The Section "OutputClass" finds the rockchip KMS device and identifies the card node. [ 31.046] (**) OutputClass "dwhdmi-rockchip" setting /dev/dri/card0 as PrimaryGPU No Section "Device" Identifier "KMS-1" Driver "modesetting" EndSection so driver auto probing with modesetting and fbdev. [ 31.206] (II) LoadModule: "modesetting" [ 31.214] (II) LoadModule: "fbdev" modesetting initializes with KMS device and glamor is loading. [ 31.262] (II) modeset(0): using drv /dev/dri/card0 [ 31.285] (II) Loading sub module "glamoregl" glamor is associating with GPU device [ 36.175] (II) modeset(0): glamor X acceleration enabled on Mali400 fbdev gets unloaded. [ 36.195] (II) UnloadModule: "fbdev" All is set up properly and Xwindow is working as best as possibly with modesetting. modesetting does not use acceleration functions from KMS by design and shifts all 2D actions to the 3D GPU, regardless of whether it is emulated by software or accelerated by hardware. You can test with the attached glxgears script started from a terminal window. Xorg.0_driver_as_rockchip.log: No "Section "OutputClass" so no KMS device identification. No Section "Device" so driver auto probing with modesetting and fbdev. [ 53.702] (II) LoadModule: "modesetting" [ 53.704] (II) LoadModule: "fbdev" modesetting initializes with GPU (Mali) device because of fall back. [ 53.694] falling back to /sys/devices/platform/20000000.gpu/drm/card1 [ 53.707] (WW) Falling back to old probe method for modesetting modesetting outputs to a not display capable device because a rendernode has no scan-out facility. fbdev is associating with framebuffer device [ 54.354] (II) FBDEV(1): hardware: rockchipdrmfb (video memory: 3600kB) I am confused what is going on here and not sure what this proves. Now I see. In the end you get fbdev with swrast. With this stanza you should get the same observation in the performance: Section "OutputClass" Identifier "dwhdmi-rockchip" MatchDriver "rockchip" Option "PrimaryGPU" "TRUE" EndSection Section "Device" Identifier "KMS-1" Driver "fbdev" EndSection "drmdevice" located at libdrm glxgears

-

That commit was in 2019 and should be available since 5.2. Can you please attach your /var/log/Xorg.0.log?

-

You will also not see it as built-in, the Rockchip display subsystem will be provided by DesignWare IP. The rockchip driver functionality will be provided by the dw_hdmi module. It will provide a cardX node in /dev/dri/. The 3d Mali GPU IP will use either the lima or panfrost module depending which flavor of Mali your device has. It will provide a renderDXXX node in /dev/dri/. Its companion cardX node is of no use and is only there for drm implementation reasons. The ddx xorg driver (modesetting, armsoc, armada, ...) will use the cardX node for display output. Since Xwindow doesn't know what hardware you have, you can use this stanza to give it a hint instead of let it guess which cardX node to use: Section "OutputClass" Identifier "dwhdmi-rockchip" MatchDriver "rockchip" Option "PrimaryGPU" "TRUE" EndSection This stanza will force instead of autoprobe which ddx driver is to be used: Section "Device" Identifier "KMS-1" Driver "armsoc" EndSection For the ddx driver to support 3d on a different IP it needs the functionality of a submodule (glamoregl for modesetting, etnaviv_drm for armada, but I'm not aware of one for armsoc) to access it via the renderDXXX node. The IPs will exchange buffers via dma_buf with zero copy. The armada driver can configure which submodule to use, so you can combine the i.MX display subsystem with any GPU IP you have a suitable submodule for. drmdevice-rockchip.log

-

Out of curiosity I built the armsoc driver for my rk3399. I can confirm your observation of the faster display output. Of course, there is no 3D acceleration because the driver has no way to delegate 3D requests to the 3D render node. The armada driver (you want the unstable-devel branch) I use for my imx6 has such an ability and surpasses the rk3399 with modesetting despite the lower specification for now. IMHO armsoc is a dead end until it gets a similar ability. And using glamor with its unnecessary scanout indirection via 3d is also a bad idea. We are all in the same boot, no xorg driver to glue all IPs in an efficient manner together available. xorg-rockchip-modesetting.logxorg-rockchip-armsoc.logxorg-imx-armada.log

-

The directory gets populated by the UDC driver. I don't know your SOC so I can' t tell in which state the UDC support in the kernel is. When you define a hardware feature in DT, supporting code is not automatically generated in a magical way. Perhaps another forum reader can clarify the status of the UDC support for your SOC.

-

"/sys/class/udc/" is not a symlink, it is a directory which will hold symlinks to available UDCs. If none is there, the UDC is not set up properly. You have to fix this up first so the USB gadget framework can make use of it.

-

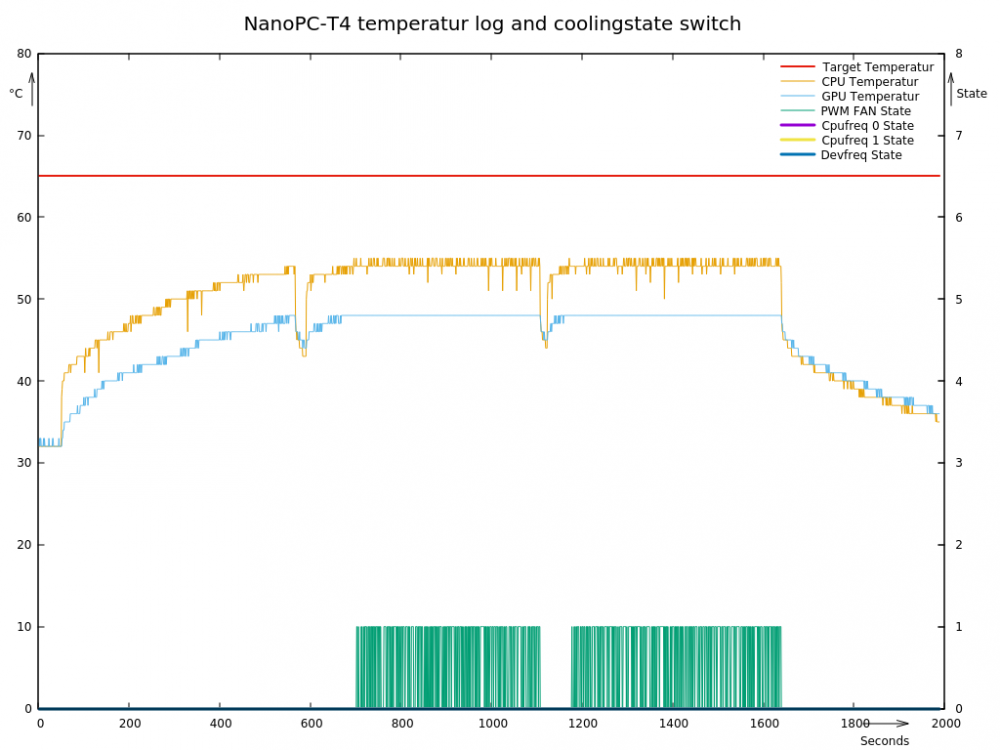

I've run it three times in a row. This is the visualization of the tmon log, which shows how my thermal system is performing:

-

Single Armbian image for RK + AML + AW (aarch64 ARMv8)

usual user replied to balbes150's topic in General Chat

With this you make your uboot less flexible and lock out users who want e.g. use dtb overlay features at boot time. -

Single Armbian image for RK + AML + AW (aarch64 ARMv8)

usual user replied to balbes150's topic in General Chat

The files can coexist without any harm. As long as distro-boot is active, i.e. an extlinux.conf can be found in the search path, no other configuration files are considered. If you need a feature that distro-boot does not support, you can always switch back to the previous scheme by simply removing extlinux.conf from the search path. -

Out of curiosity, I'm interested in how your thermal system works during the test. Can you by chance provide a tmon.log? To collect one, start "tmon -dl", perform your thermal stress test and after round about 15 minutes kill the tmon process. Now post the resulting /var/tmp/tmon.log.

-

Increase loglevel, e.g. loglevel=9 for everything.

-

My device is only equipped with this tiny cooling set: Even though all six cores run at 100% load, the fan only runs at full speed for two occasions: Frequency scaling and DVFS haven't even begun yet. The kernel is handling the thermal system, no user space involved. Maybe time to collect tmon's log and investigate how the thermal system is performing.

-

2 OPiPC+'s, same /boot, different results

usual user replied to laurentppol's topic in Allwinner sunxi