sfx2000

Members-

Posts

631 -

Joined

-

Last visited

Content Type

Forums

Store

Crowdfunding

Applications

Events

Raffles

Community Map

Everything posted by sfx2000

-

With Telco - errors have a lot of magnitude - 10LogR level... Up until the M&A with ATT - I ran Leap Wireless' AAA/HSS, OTASP, and Messaging platforms as the Systems Engineer that scaled a platform for 20M customers... SBC's are a bit of a hobby now - did a stint with a startup that did Robotics up until last May (yes, it's complicated)

-

One of the fun things... Make a tin-foil hat and be ready for this... American Hackers, Russian Hackers, China and Israel... I think he somehow missed Iran and North Korea, who are also very accomplished there, along with the French and UK agencies... https://danrl.com/blog/2016/travel-wifi/

-

Hehe - we're an alexa free-zone here... (there's Siri, but that's extremely set back in settings on the devices) I have the same coffee maker - and yes, it's old-school, but it works The lab-space looks a bit scary, IMHO - data centers seem to be a bit safer (less problems on one, but multiplied by many)

-

Apple did have some "courage" in removing MagSafe, DisplayPort/Thunderbolt, HDMI, SDCard and USB-A ports on the MacBook Pro's... Courage != Smart, IMHO there, as many that had existing laptops fiercely resisted upgrades... as those ports/slots were very useful (and most of the useful keys for the touchbar Macbook Pro's - that's courage I suppose to alienate many that do unix/linux dev on the shell, esc and tilde were muscle memory there with editors) Notable they didn't replace Lightning with USB-C on the iDevices... which is one area where they would benefit due to regulatory pressure* from many regions... * huge pressure from EU/China to do a common phone charging/accessory connector to reduce e-Waste, Apple is one of the last there

-

USB-C powered boards -- important information

sfx2000 replied to chwe's topic in SD card and PSU issues

BTW - never did try to charge the phone from a true USB-C charger - was concerned that it would allow the magic smoke to appear, and I'm fresh out of smoke... -

USB-C powered boards -- important information

sfx2000 replied to chwe's topic in SD card and PSU issues

Indeed... -

The whole Bloomberg article raises some interesting points however... Dropping a "spy chip" on the board seems like more effort than needed, when the board actually has at least two processors that have sub-Ring0 access - the BMC (Aspeed's AST family is very common), and then of course the ARC or x86 cores in Intel (and Tensilica on AMD, along with the ARM on the Zen/Epyc chip itself). BMC's on servers recently had an interesting run of problems - if you have Dell in your data center, might want to check if DRAC updates are available, as folks found a way to hack that... https://www.servethehome.com/idracula-vulnerability-impacts-millions-of-legacy-dell-emc-servers/ Would be easier to hack the firmware in those that develop some dedicated microcontroller and firmware that Bloomberg asserts... heck, on x86, because of the mess that it is, just fuzzing the chip can find undocumented instructions that give one god mode https://github.com/xoreaxeaxeax/rosenbridge As you mentioned, on RPi, why not go after VCOS/ThreadX - 10M plus devices out there make that a sweet target for those who would have the resources. On the low end - AllWinner, Rockchip, and others - keeping in mind that they generally pull in IP blocks and assemble them - it's not just the ARM cores, and GPU's from ARM/Imagination, etc, but IP blocks from Cadence and others for UART, USB, SD, I2C, SPI, Power Management, etc... It is quite literally like Lego's - snap the parts together in VHDL, and ship it to a fab - pay for whatever process one wants (28nm, 40nm, 7nm Finfet), and some QA afterwords...

-

Couple of interesting reads about USB chargers - Ken Shirriff has done quite a bit of in depth research on various adapters... http://www.righto.com/2012/10/a-dozen-usb-chargers-in-lab-apple-is.html http://www.righto.com/2014/05/a-look-inside-ipad-chargers-pricey.html http://www.righto.com/2013/06/the-mili-universal-carwall-usb-charger.html Cable quality - obviously very important - as is the adapter itself - esp with devices where the power in is also a USB-OTG - a bad power supply there can be a bag of hurt even more...

-

USB-C powered boards -- important information

sfx2000 replied to chwe's topic in SD card and PSU issues

I've had mixed results overall with USB-C - when it works, it's really good... overall, I'm in favor of it, but with different implementations, it's a challenge for users. Challenge is quality of cables, which if one gets from a trusted vendor shouldn't be a problem. I've also got an Intel NUC7i5BNH - which like the Macbook Pro's is full blown let's put everything we can on that port - Display Port, Thunderbolt 3, USB3.1, about the only thing that port doesn't do is support power in... Kaby Lake with Sunrise Point (i5-7260), which is about as official of a reference that one can image perhaps - and it's still a bit of a work in progress on both Windows and Linux. - Ubuntu 18.04 does have some thunderbolt support, which is encouraging now that Intel has changed the licensing a bit for other OEM's - hopefully Thunderbolt doesn't suffer the sad outcome of FireWire (which died eventually because of onerous licensing terms from Apple and others (sony for example)). On the high end SBC's - now that we're seeing SoC's and boards with PCI-e support, USB-C and even Thunderbolt makes things interesting in an odd sort of way - it could actually simplify the layouts - pop a couple of USB-C ports and one can remove some ports and save some space... and add new capabilities if they do the Thunderbolt path - want a SATA dock, external GPU, WiFi, 10Gbe even... You do make a good point about LiPo - but one would assume that a board that supports USB-C probably would have a PMIC, and there, depending on the capabilities of the chip, battery support for charging shouldn't be an issue. -

board support - general discussion / project aims

sfx2000 replied to chwe's topic in Advanced users - Development

Just to share a thought or two... Armbian is actually in a special place - and thru community consensus and working with the OEM/Chipset community can do a lot of good work... Not that much different than what the OpenWRT and FreeElectrons/Bootlin folks have done... I've read thru the threads - and that really stuck out was @tkasier and the GPIO mess that is... and yes, it's a mess. (along with uboot and dts, and then solve kernel and related stuff) - anyways, been there, done that. Armbian has great experience at bringing up boards, doing some tweaks to improve performance/compat as needed... So it's reach out to the community - the chipset guys, the board folks, work with the community - and there, it's the regular gatherings, and build the brand and set up BOF meetings to get some consensus across the community. I'm a former member of IETF - and one of the big deals is letting loose of ownership, but keeping a gentle hand on development, and that gains a lot of respect within the larger than armbian team... Hard to explain, but if the team has already solved hard problems, then those solutions are generally agreed upon, so the collective can move forward. Getting back to Igor's statement... Don't tell - rather be open and ask... Find the community gatherings, work the threads with OEM's and Chipset contacts... -

That's a bit of a concern these days... We have a ipblock/processor (the AR100) that has better than root access to memory that can read/write at will I repeat - having access to memory is having access to OS and IO at a layer deeper than root... @chwe - yes, interesting indeed... Note the bloomberg thing with BMC's on servers... https://www.bloomberg.com/news/features/2018-10-04/the-big-hack-how-china-used-a-tiny-chip-to-infiltrate-america-s-top-companies Allwinner probably needs to be clear on what that AR100 does - not just a blob, but source...

-

USB-C powered boards -- important information

sfx2000 replied to chwe's topic in SD card and PSU issues

I'm assuming you mean "Dumb Mode" USB-C PD mode is complicated, no doubt... Recently I received a ODM smartphone from Shenzen - USB-C on the Device, and USB-A on the power adapter - close my eyes, and trust that it works with the adapter/cable/device - all together for just charging the battery... and it does. I'm probably not going to plug it into my MacBook Pro - just because, like facebook and relationships, it's complicated Once all sorted, USB-C can be very cool - image just a couple of ports, charge/sync/video, OTG even for gadgets... -

I can read, review, and understand the script - and I did... and gave a bit of feedback. But for the benefit of the forum and community - no disrespect to others, but many do copy/paste on trust without knowing... Tell it to me again like I'm five years old... why go down this path? What is the process, why is it done, how can it be done better?

-

With WiFi in general - it's really hard to get consistent results on a benchmark basis unless it is very controlled environment... Things that affect WiFi in order... 1) The neighbors and other devices on your own SSID - WiFi is a shared medium, and contention based - if you're in a crowded neighborhood, no matter anything else, that's a factor overall in performance and consistency, as it's traffic dependent. If you must test, do it between 12AM and 4AM, that's the general network quiet hour unless you have a neighbor like me that can't sleep and runs netflix over wifi... 2) Client RF front-end and antenna - some boards have SoP's that have the chipset, but still the antenna is a bit of deal (actually a lot) 3) Client chipset and driver - Realtek is common, and it has it's plus/minus, Ralink-Mediatek is generally good, Broadcom's fmac is safe, but limited sometimes, and QCA's ath9k usb is the boss, but old-school and hard to find these days - sometimes get them on the shenzen marketplace, but full featured without patches, and fully supported by kernel drivers, including monitor mode which is useful for some... the XR chipset that is on some SBC's is a bit of an unknown for me at the moment. BTW - for IoT - most of the ESP8266 and ESP32 boards are quite predictable, if not excellent, but they're consistent... 3a) Drivers are important - some are better even in the same chip, and the loose floorboards that linux-wireless provides, sometimes works, sometimes not as they're not always in sync with kernel there. All being equal - let's say we have Board A vs. Board B... 4) Board Layout - with the SoP mentioned above, there's also self-generated noise from the board itself - some boards are going to be better than others there - same goes with the USB/SD adapters as well... 5) The AP - least concern - but the AP does come into play as performance over distance is generally a 10LogR function, with R being range at a high level - there are advantages for newer AP's, but generally the client chip/driver/config is dominant. Get the right chips/rf/driver/ap - it's a win, obviously - a two stream 802.11ac USB adapter can do wonders if the driver is supported - likewise, a single stream wifi NIC on a noisy board is going to be a challenge no matter what the environment is. Benchmarking on a single run is going to be a problem - see item (1) above, everything else becomes somewhat relative... Best device for checking wifi - an old Android phone or iPod Touch - I've got an older Samsung Galaxy S4 (ex-Cricket/Leapwireless, pre ATT) with a dead SIM - nice part is the S4 supports 11ac in 5GHz, so it's a good sounder for WiFi and Google's play store has good apps to scan there - the currrent iPod touch is also good, it's not a great performer, but good enough to test for Single Board comps or Set-Top Boxes for RF characteristics...

-

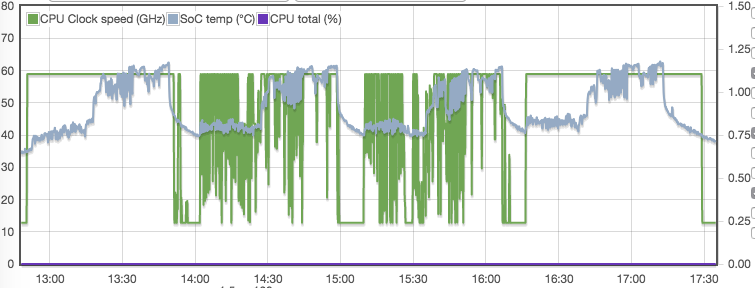

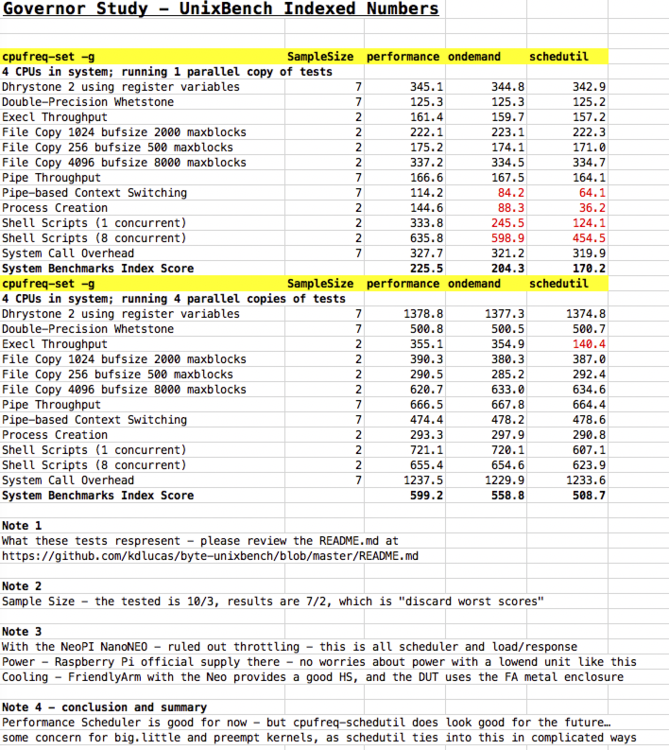

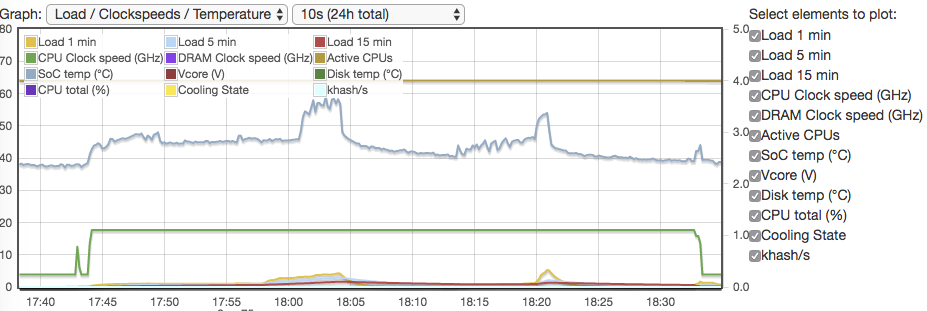

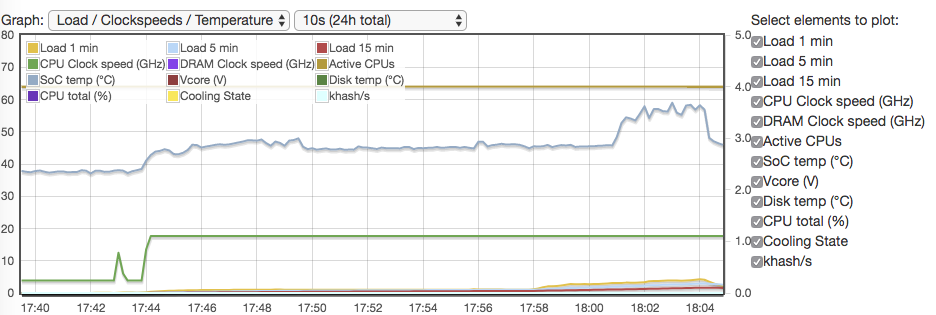

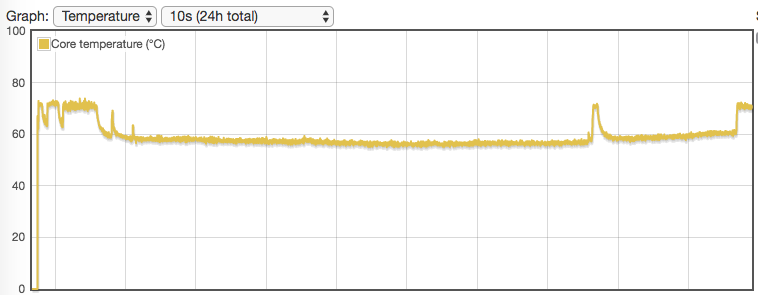

So you're suggesting your benchmark script is meaningless? I don't think so - it's very useful... and can be more useful in doing A/B testing - rather than set params, report them - and let the tester review and test as needed. the rk3288-tinker was armbian stock clocks - no tweaks there... it's just hopelessly impaired in a multiple thread environment - it gets heatsoaked, and no chance to recover with stock armbian - the numbers show that. The whole rk3288-tinker problem is something that is really driving me how to sort it - using what is on hand, and no code-impact to armbian - so going down the road of how to make the best use of resources available... Challenges at the moment with tinker is that armbian does do the lower limit, and still sorting out if the DT updates on mainstream have been pulled in as 4.14 has a lot of changes on the mainline. Hint on today's testing - I chose the Allwinner H3 device from FA - the NEO, as this is a well tested device (as you know) - just playing around with CPU schedulers... if you want the worksheet with backup on the testing - reach out to me directly... Yes - I know UnixBench is kind of relative - useless across different machines/platforms - but in relative testing, with the same board/kernel/compiler, it's very useful - as you can see the time to complete the actual test, each sequence is almost an hour under test - so fair chance that thermals would be the biggest problem... The NEO H3 - It doesn't throttle, but it's a fast race to minimum across performance/ondemand/schedutil... single thread shows some regression, but four threads bear interesting stuff when doing some fun with UnixBench... as you can see on the timeline - more than enough time for the H3 to get throttled, but it never does... did performance as a parity/sanity check - but the sample sizes across the benchs do suggest a high confidence interval on the results. Last run - I pushed the H3 into powersave to bring the clocks down, otherwise it would have been 4 cores running at max - 4 cores burning on performance, I suppose temps would have eventually come down - I let it run a bit - did a stint in powersave mode to get to a good entry point for temps at 40C on for a good entry point on ondemand and schedutil - they would always jump down to minclock there - perf wants to be fast in single thread - but these days... and with big.little, woe to be the kthread on the wrong core... NOTE - I've highlighted the performance regressions across things... and they deserve further study. With cpufreq schedutil, might improve... Looking forward - getting to a point where benchmarks can be useful, automated, and reproducible... ARM's LISA looks really good for the CI workflow - and it opens up the black boxes that most benchmarks do... https://github.com/ARM-software/lisa All told - upcoming items like affordable big.little boards, preempt-rt, and cpufreq coming into play is going to complicate things... it's the upcoming perfect storm for the team, so get ahead of it... I'm here to help - not trying to get into conflict - I've got more than a fair amount of time doing embedded stuff on arm - on QCOM, Marvell, Freescale, and TI - android and emdedded - I'm not crazy like the stuff over on xda, where things go strange. I'd rather work with you than against you - against you, I'll lose, that's true, but the community might also lose on the investment of my time and effort to contribute.

-

Same configs as the Tinker - testing Nano Pi NEO with stock armbian clocks on mainline - my Neo isn't likely going to throttle as it's got good power and very good on the thermals with the heat sink and case it's in... stock clocks has it underclocked a bit as it is for power reasons - re other Armbian docs on this board for IoT, etc... gov/sched/swappinness perf - noop - 100 http://ix.io/1oac schedutil -CFQ - 10 http://ix.io/1oai (this one throttled just a bit... but that's one sample)

-

@tkaiser - Had a chance to do a deeper review the following... https://github.com/armbian/build/blob/master/packages/bsp/common/usr/lib/armbian/armbian-zram-config Couple of questions... I realize that this is your script, and you've done a fair amount of effort on it, perhaps across multiple platforms and kernels 1) why so complicated? 2) why mount the zram partion as ext4-journaled - is this for instrumentation perhaps? mkfs.ext4 -O ^has_journal -s 1024 -L log2ram /dev/zram0 This adds a bit of overhead, and isn't really needed... swap is swap... It's good in any event, and my script might not be as sexy or complicated as yours - actually, with your script, the swappiness testing variables are buried in overhead there if I read your script correctly. I realize you might get excited a bit about a challenge, but take another look - less code is better sometimes. I'm not here to say you're wrong, I'm here to suggest there's perhaps another path - I know my script isn't perfect, but like yours, it works...

-

Opened up an issue on the git - don't change the governor - report what is in use, so folks can A/B changes... Anyways - RK3288-Tinker, which can be/often is thermally challenged - had to hack the sbc-bench script to not set the CPU gov to perf... gov/sched/swappinness... perf - noop - 100 http://ix.io/1o7Y Throttling statistics (time spent on each cpufreq OPP): 1800 MHz: 86.82 sec 1704 MHz: 83.66 sec 1608 MHz: 114.67 sec 1512 MHz: 155.11 sec 1416 MHz: 205.71 sec 1200 MHz: 169.03 sec 1008 MHz: 109.07 sec 816 MHz: 144.17 sec 600 MHz: 106.14 sec schedutil -CFQ - 10 http://ix.io/1o9Y Throttling statistics (time spent on each cpufreq OPP): 1800 MHz: 350.94 sec 1704 MHz: 121.37 sec 1608 MHz: 73.64 sec 1512 MHz: 92.46 sec 1416 MHz: 104.79 sec 1200 MHz: 92.22 sec 1008 MHz: 66.33 sec 816 MHz: 149.59 sec 600 MHz: 132.10 sec Interesting numbers... feel free to walk thru the rest... Without getting into the uboot and dt to reset the lower limit - would be nice to see if the RK can sprint to get fast, fallback a bit more to recover and sprint again... RK3288 can do the limbo as low as 126MHz - right now with Armbian we're capped at the bottom at 600MHz - so the dynamic range between idle and max'ed out is 10C, as the RK3288 with the provided HS idles at 60C in the current build. That and going with PREEMPT vs normal there... the PREEMPT stuff does weird things with IO and drivers there, would be nice to have a regular kernel within Armbian to bounce against.

-

Hehe... just wait for USB-C connectors on SBC's... that's going to be a mess with all the interesting options (perhaps the reason why the folks over on Cupertino decided with their recent HW releases to keep their proprietary connection)

-

@tkaiser Little quirk with sbc-bench on the git... Once tests are done, looks like the cores are left on their own and cooking.... Recent test, and after.... dropping the clocks was after a shutdown, pull power, and startup... Example here is NanoPI-NEO, current Armbian bionic image.... http://ix.io/1nY2 Oddly enough, a couple of weeks back, Tinker got into a bad place there where it did a hard shutdown...

-

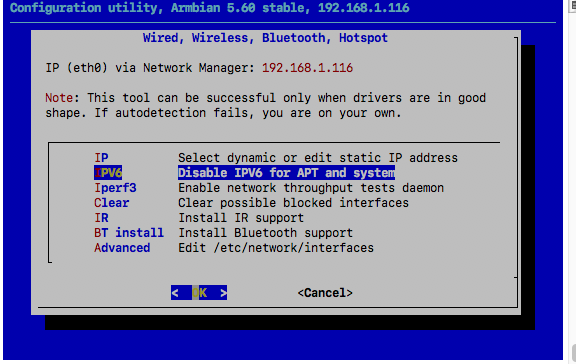

Allwinner H3 NanoPiNEO - hi temps on mainline vs. vendor build

sfx2000 replied to sfx2000's topic in Allwinner sunxi

Ok... so some work here.... minor change was moving default cpu-gov from performance to ondemand - pulls down temps a bit, but perf is still within ranges.... http://ix.io/1nY2 Fun stuff.... bits in the crucible... -

Allwinner H3 NanoPiNEO - hi temps on mainline vs. vendor build

sfx2000 replied to sfx2000's topic in Allwinner sunxi

Ok -- bit of work, as my stash of recent SD cards ended up being a rather bad batch (I order them in bulk, and they're branded, and a trusted reseller, and still get a bad batch from time to time...) -

NanoPI NEO on current Bionic as of 9/30/18 http://ix.io/1nXL This is the v1.31 board so some of the temps might be lower that the 1.0/1.1 boards - it does nicely for an H3 RK3288-Tinker is still a hot mess - pardon the term... http://ix.io/1nXQ But this is expected - passive cooling with the Asus provided heatsink, and we're powering it over uUSB with a 2.5A Raspberry Pi (official) power supply... Might be interesting to see what happens with Tinker and letting it clock down to 126MHz as I'm thinking right now, it is getting heat soaked, so it spends a huge amount of time at 600MHz - current suggests that the upper limit temp wise is 70c, so when it gets there, it pulls back - and it doesn't have far to pull back - my tinker idles at 60c with the current Armbian Bionic build - that last little stint at 70c is the Tinker running sbc-bench on the current git...

-

The 64-bit Rockchips are showing very good memory performance - I've got a friend working on bringing up a renegade board on Arch, and the memory performance he's observed is consistent with findings here....