usual user

Members-

Posts

519 -

Joined

-

Last visited

Content Type

Forums

Store

Crowdfunding

Applications

Events

Raffles

Community Map

Everything posted by usual user

-

Provide output of ir-keytable and ir-keytable -t while pressing your mapped ir buttons.

-

Since you haven't provided any details about what keycodes you've mapped and what desktop features are assigned to them, no one can tell if what you're seeing is what to expect.

-

UMS has not interested me much so far. However, the mention still inspired me to bring forward my rebase, which was planned after the v2023.10 GA release. Now my serial console boots up with a nice little boot menu: *** U-Boot Boot Menu *** Standard Boot NVME USB Mass Storage eMMC USB Mass Storage microSD USB Mass Storage usb 0:3 nvme 0:1 nvme 0:3 nvme 0:4 mmc 1:2 U-Boot console Press UP/DOWN to move, ENTER to select, ESC to quit Now I don't even need to physically insert the SD card back and forth between the card reader and the M1 when I want to perform a firmware update from my host build system, because the M1 can now act as a card reader itself. And that even for NVME, SATA and eMMC storage. I rarely need this because I usually run updates directly from the M1, but it's still nice to have. However, this only becomes really interesting when HDMI video is also available and the EXPO menu can also be used. I guess the day is approaching when I'll finally run this on the serial console: run mmc-fw-to-sf It will transfer my self-contained firmware image build into the SPI flash. I will then lose the possibility to use outdated legacy OS forks and can only use modern operating systems with current bootflows, but if I understand correctly, I have never used anything so unmaintained 😉 But the need to press the RCY button at system startup is starting to get annoying.

-

Bring up for Odroid N2 (Meson G12B)

usual user replied to Brad's topic in Advanced users - Development

There are several tools to write SPI flash. Choose whatever you're familiar with, e.g.: dd if=u-boot-meson.bin of=/dev/mtd0 The tricky part is to wire the SPI flash up in devicetree. Hardkernel seems to have declared this information to be Top Secret. I extracted this information from Pettitboot, but I only got a very unstable functionality. It took me several tries before the data was error-free written, so I'm hesitant to publish my used values. Maybe there is someone out there who knows more reliably functioning binding values and can contribute them. -

With the release of U-Boot v2023.10, everyone will be able to create their own firmware on a stable basis. The only difference to other firmware builds is a make odroid-m1-rk3568_defconfig to setup a suitable configuration for the ODROID-M1. If you want to build beforehand, you need to dig mailing lists and patchwork for non-landed fixes and improvements to pick them up. As you can see here this has already happened. They use distributions that are not in any way designed specifically for the ODROID-M1 and where Petitboot is not able to support the bootflow used. And this is possible because the rk3568 SOC has sufficient mainline kernel support.

-

Judging by your following command excerpts, this time you did everything as required. You should now have my provided firmware build in use and it is working as expected. I.e. it is using distro-boot and should be able to boot various operating systems from USB, SD-card or eMMC as long as either a suitable legacy- (boot.scr), extlinux- (extlinux.conf) or EFI-bootflow is in place. And since it doesn't carry any OS artefacts on the firmware device and uses unmodified OS images for a single drive, OS updates should work much more reliably. In order to determine the cause and possibly find a solution, the serial console logs are mandatory. If you can't provide serial console logs, you're pretty much on your own. If it breaks for you, you have to keep the parts.

-

Judging by your following command excerpts, you did not do this. Since I don't know if your SD card to be prepared was really in a card reader listed as /dev/sda when you ran the command "root@odroidc4:/# dd if=/dev/zero of=/dev/sda1", I can't really tell if you chose the right device. In any case, you didn't target the entire device for the dd commands as instructed, but only the first partition (dev/sda1). The correct one here would have been "/dev/sda". Serial console logs are taken via the 4pin UART Connector for system console and are essential for proper debugging. Because you wrote my firmware in the first partition, and not at the location where it is expected by the MASKROM code, I can't tell what exactly is going on without appropriate console logs. But you have apparently corrupted the contents of the first partition enough, so that the existing firmware also uses the bootflow in the USB storage. However, the original goal is to have an SD card that contains only the firmware and no other system artifacts.

-

Out of curiosity, are you interested in a little experiment? If so, - prepare an entire cleared SD card (dd if=/dev/zero of=/dev/${entire-SD-card}) - dd the unpacked firmware (tar -xzf uboot-meson.tgz) that is uploaded here in place with: dd bs=512 seek=1 conv=notrunc,fsync if=u-boot-meson.bin of=/dev/${entire-SD-card} - dd the unmodified Armbian_23.02.2_Odroidc4_jammy_current_6.1.11 image onto your USB 3.0 storage - plug in the prepared SD card and your USB 3.0 storage into your odroid c4 - boot the odroid c4 and post the serial console log

-

Software that lies about true hardware specifications is nothing new. Even in this forum there are many repetitive posts about this topic.

-

You ignored the organization, a byte is 8 bit hence 512Mx4 x 4 chips = 1 Giga Byte.

-

The ole power-off button using gpio question - AML-S905X-CC

usual user replied to pezmaker's topic in Beginners

The necessity of the "-B" parameter with the observed gpio behavior is probably due to how the gpio is wired up in the DT and how its pin control is set up there. Since I didn't know the exact use case, I only presented the basic command and left it to the user's imagination how it would ultimately be used. It's general shell usage, and if I understand the use case correctly, I'd have e.g. used something like this in a script that loads on startup: gpiomon --num-events=1 -B pull-down -f gpiochip0 9 && wall power off initiated && sleep 5 && halt You're welcome. -

The ole power-off button using gpio question - AML-S905X-CC

usual user replied to pezmaker's topic in Beginners

gpiomon is your friend, e.g.: sudo gpiomon --num-events=1 gpiochip1 97 && poweroff -

To get multimedia acceleration, you'll need an OS with up-to-date mainline software releases and a DE based on Wayland. For applications based on the ffmpeg framework, you will need patched mainline ffmpeg because request-api support is not yet included in mainline out-of-the-box. In order to have HEVC decoder support for the rk3399, you still need the kernel patches, as there is still more development work to be done. For the OPI 4 LTS support you need a suitable DTB that obeys mainline bindings and a device specific firmware to boot the OS. As far as I can see, Armbian offers these two components. So all the necessary information is available to create an appropriate OS.

-

Can't read /etc/armbianmonitor/datasources/soctemp

usual user replied to Peter Andersson's topic in Rockchip

I asked you, as a first step, to upload the rk3399-rock-4c-plus.dtb, which is currently provided by the Armbian OS, to be able to check if the thermal zone is really not wired-up. There's no point in putting effort into fixing something that isn't broken. -

Can't read /etc/armbianmonitor/datasources/soctemp

usual user replied to Peter Andersson's topic in Rockchip

Exactly, either there is no thermal zone wired-up in the DT or the kernel build configuration lacks the necessary drivers enabled. To exclude the DTB, you can upload yours used in armbian and I can investigate accordingly. -

Can't read /etc/armbianmonitor/datasources/soctemp

usual user replied to Peter Andersson's topic in Rockchip

What does tmon tell about your thermal subsystem? -

I didn't claim to use Armbian. I'm using an OS that is compiled for an entire architecture (aarch64) and not for a specific single device. It works for any SOC, provided that a kernel can be built that uses mainline APIs. I can use the same storage device that contains the OS with all my devices (NanoPC-T4, ODROID-N2+, ODROID-M1, HoneyComb, ...). OK, I have some media copies, as flipping back and forth when using multiple devices at the same time is very annoying and impractical. The only requirement for this to be possible is the presence of a firmware that can boot the OS. And here's where my dilemma begins. The OS is 100% FREE & OPEN SOURCE, i.e. it does not provide firmware based on binary blobs. Firmware with mainline U-Boot as payload fulfills all the necessary requirements to boot my OS from any storage media and I have learned over time how to build it for my own. Armbian has always been a great help here, whether it's with knowledge from their forum or with legacy firmware builds as a workaround for my device bring-up until I was able to switch to mainline firmware. And since I've shared my firmware builds here and in other places, I also have confirmations that builds also work for devices I don't own (ODROID-C4, ODROID-HC4, ODROID-N2, Radxa ROCK Pi 4B, Radxa ROCK Pi 4C, ...). I would suggest you choose the OS you are most familiar with, or the one that best suits your needs as long as it uses recent mainline software. IMHO debian based distributions are way to stable ... outdated. Or you can find someone to backport all mainline improvements and deploy them in the appropriate distribution.

-

It's a good thing that my distribution doesn't know about this rumor, otherwise it would probably have to stop working immediately. There are several Wayland implementations, e.g. the plasma-desktop or Gnome with Wayland backend. User space is not really device-specific and therefore no specific solution for a specific device is necessary. As long as there is sufficient mainline kernel support for the components of a device, this even works out-of-the-box, provided you choose suitable software programs. The operating system I'm using works unchanged according to the kernel support for all my devices comparably. The only solution that is not yet satisfactory is hardware-accelerated video decoding, as development for mainline support is still in full swing. But with a suitable SOC selection, there are solutions that leave almost nothing to be desired for comprehensive desktop operation. E.g. my rk3399 based device is feature complete for generic desktop usage. My rk3568-based device only lacks support for the rkvdec2 video decoder IP, the hantro support is already available. The worst is for the S922X, because there is currently no working video hardware acceleration in mainline. But with its CPU processing power, a video playback with moderate resolutions is still usable.

-

To use mainline kernel hardware video acceleration in applications it requires basic support for V4L2-M2M hardware-accelerated video decode in the first place. E.g. firefox has just landed initial support for the h.264 decoder. The next showstopper would be the lack of V4L2-M2M support in the ffmpeg framework. There are some hack patches in the wild, but no out-of-the-box solution. The next showstopper would be the lack of kernel support for the SOC video decoder IP. The lack of support in the application is a general problem for all devices that provide their decoders via V4L2-M2M. The lack of support in the ffmpeg framework is a problem for all applications that base on it. Gstreamer-based applications, on the other hand, offer out-of-the-box solutions, as the necessary support is already integrated into the mainline gstreamer framework. When it comes to choosing a desktop environment, Xwindow is a bad choice. It was developed for x86 architecture and therefore cannot deal efficiently with V4L2-M2M requirements due to its design. Their developers at that time also recognized this and therefore developed Wayland from scratch. So when it comes to efficiently using a video pipeline in combination with a display pipeline, a desktop environment with Wayland backend is the first choice.

-

Since I haven't gotten any feedback on my build so far, I don't see much sense in it. In my experience, Armbian users tend to stick to legacy methods and my extensions only affect the upcomming U-Boot Standard Boot. In addition, the adoption of reviewed-by patches from the patchwork to the mainline tree seems to have stalled. So it may take some time until the general support is available out-of-the-box. And these are valuable improvements to the Rockchip platform, which also benefit ODROID-M1 support. Since Armbian already has a working boot method, I don't see any reason for an underpaid Armbian developer to invest his valuable time in an unfinished solution that anyone can have for free when everything finally lands. For me, it's different story because my preferred distro requires different prerequisites. I had made my build available so that others could save themselves the trouble of self-building, but there doesn't seem to be any particular interest.

-

Not a missing package, it is the lack of basic support for V4L2-M2M hardware-accelerated video decode in the application in the first place. The next showstopper would be the lack of V4L2-M2M support in the ffmpeg framework. There are some hack patches in the wild, but no out-of-the-box solution. The next showstopper would be the lack of kernel support for the SOC video decoder IP. The lack of support in the application is a general problem for all devices that provide their decoders via V4L2-M2M. The lack of support in the ffmpeg framework is a problem for all applications that base on it. Gstreamer-based applications, on the other hand, offer out-of-the-box solutions, as the necessary support is already integrated into the mainline gstreamer framework.

-

dpkg -l | grep libgbm bash: dpkg: command not found... I'm currently running mesa 23.0.3 and since libgbm is an integral part of mesa, the version is of course identical. However, the current version is not really important, as the necessary API support is already very mature. It is only important that it is built with the current headers of its BuildRequires to represent the status quo. Because the API between gbm and mpv does not change, but possibly by applying kernel patches between kernel and gbm (sun4i-drm_dri), it is probably more appropriate to rebuild mesa with the updated kernel headers. BTW, to get retroarch I would do: dnf install retroarch

-

IMHO, you're using a software stack that's way too outdated. You are missing features and improvements that have already landed. I'm not an expert in analyzing compliance logs, but if you compare your log to the one provided by @robertoj above, you'll see that your kernel at least lacks features that its provides: Format ioctls: @robertoj test VIDIOC_ENUM_FMT/FRAMESIZES/FRAMEINTERVALS: OK @mrfusion fail: v4l2-test-formats.cpp(263): fmtdesc.description mismatch: was 'Sunxi Tiled NV12 Format', expected 'Y/CbCr 4:2:0 (32x32 Linear)' @mrfusion test VIDIOC_ENUM_FMT/FRAMESIZES/FRAMEINTERVALS: FAIL @robertoj test VIDIOC_G_FMT: OK @robertoj test VIDIOC_TRY_FMT: OK @robertoj test VIDIOC_S_FMT: OK @mrfusion fail: v4l2-test-formats.cpp(460): pixelformat 32315453 (ST12) for buftype 1 not reported by ENUM_FMT @mrfusion test VIDIOC_G_FMT: FAIL @mrfusion fail: v4l2-test-formats.cpp(460): pixelformat 32315453 (ST12) for buftype 1 not reported by ENUM_FMT @mrfusion test VIDIOC_TRY_FMT: FAIL @mrfusion fail: v4l2-test-formats.cpp(460): pixelformat 32315453 (ST12) for buftype 1 not reported by ENUM_FMT @mrfusion test VIDIOC_S_FMT: FAIL

-

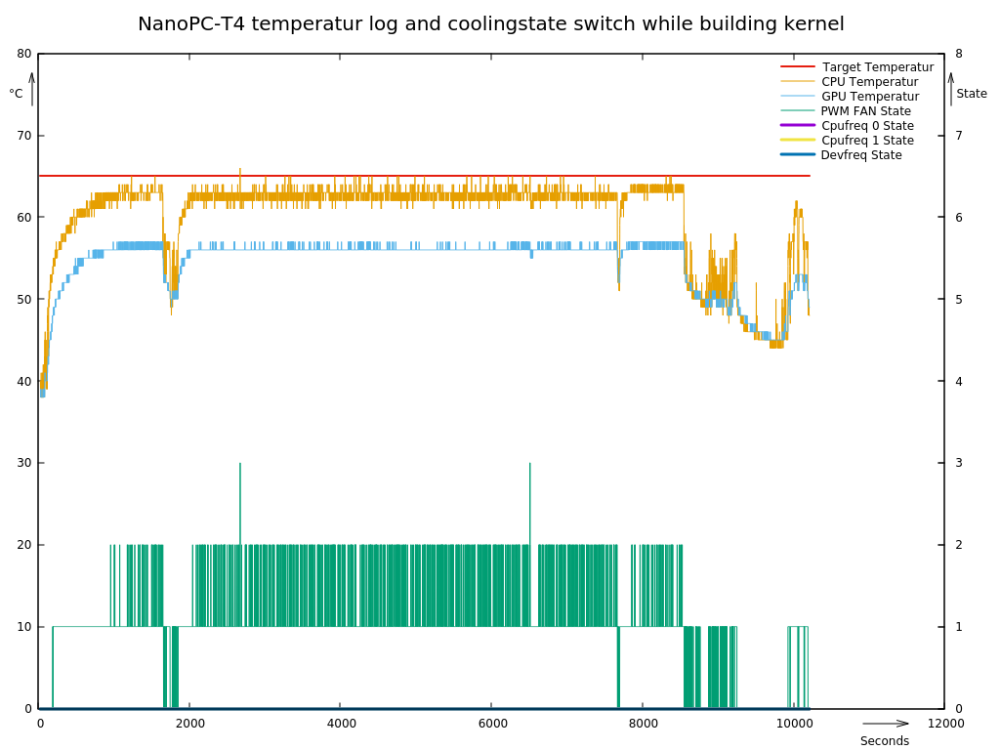

How does the NanoPC-T4 Armbian system use the onboard PWM fan interface?

usual user replied to laning's topic in Rockchip

Mainline DTB has proper thermal zone configuration. The kernel can manage its temperature management on its own, there is no need for an error-prone userspace component to be involved. As long as the kernel binary has build all the necessary drivers, it works out-of-the-box.